-

NI Community

- Welcome & Announcements

-

Discussion Forums

- Most Active Software Boards

- Most Active Hardware Boards

-

Additional NI Product Boards

- Academic Hardware Products (myDAQ, myRIO)

- Automotive and Embedded Networks

- DAQExpress

- DASYLab

- Digital Multimeters (DMMs) and Precision DC Sources

- Driver Development Kit (DDK)

- Dynamic Signal Acquisition

- FOUNDATION Fieldbus

- High-Speed Digitizers

- Industrial Communications

- IF-RIO

- LabVIEW Communications System Design Suite

- LabVIEW Electrical Power Toolkit

- LabVIEW Embedded

- LabVIEW for LEGO MINDSTORMS and LabVIEW for Education

- LabVIEW MathScript RT Module

- LabVIEW Web UI Builder and Data Dashboard

- MATRIXx

- Hobbyist Toolkit

- Measure

- NI Package Manager (NIPM)

- Phase Matrix Products

- RF Measurement Devices

- SignalExpress

- Signal Generators

- Switch Hardware and Software

- USRP Software Radio

- NI ELVIS

- VeriStand

- NI VideoMASTER and NI AudioMASTER

- VirtualBench

- Volume License Manager and Automated Software Installation

- VXI and VME

- Wireless Sensor Networks

- PAtools

- Special Interest Boards

- Community Documents

- Example Programs

-

User Groups

-

Local User Groups (LUGs)

- Aberdeen LabVIEW User Group (Maryland)

- Advanced LabVIEW User Group Denmark

- Automated T&M User Group Denmark

- Bangalore LUG (BlrLUG)

- Bay Area LabVIEW User Group

- British Columbia LabVIEW User Group Community

- Budapest LabVIEW User Group (BudLUG)

- Chicago LabVIEW User Group

- Chennai LUG (CHNLUG)

- CSLUG - Central South LabVIEW User Group (UK)

- Delhi NCR (NCRLUG)

- Denver - ALARM

- DutLUG - Dutch LabVIEW Usergroup

- Egypt NI Chapter

- Gainesville LabVIEW User Group

- GLA Summit - For all LabVIEW and TestStand Enthusiasts!

- GUNS

- Highland Rim LabVIEW User Group

- Huntsville Alabama LabVIEW User Group

- Hyderabad LUG (HydLUG)

- Indian LabVIEW Users Group (IndLUG)

- Ireland LabVIEW User Group Community

- LabVIEW LATAM

- LabVIEW Team Indonesia

- LabVIEW - University of Applied Sciences Esslingen

- LabVIEW User Group Berlin

- LabVIEW User Group Euregio

- LabVIEW User Group Munich

- LabVIEW Vietnam

- Louisville KY LabView User Group

- London LabVIEW User Group

- LUGG - LabVIEW User Group at Goddard

- LUGNuts: LabVIEW User Group for Connecticut

- LUGE - Rhône-Alpes et plus loin

- LUG of Kolkata & East India (EastLUG)

- LVUG Hamburg

- Madison LabVIEW User Group Community

- Mass Compilers

- Melbourne LabVIEW User Group

- Midlands LabVIEW User Group

- Milwaukee LabVIEW Community

- Minneapolis LabVIEW User Group

- Montreal/Quebec LabVIEW User Group Community - QLUG

- NASA LabVIEW User Group Community

- Nebraska LabVIEW User Community

- New Zealand LabVIEW Users Group

- NI UK and Ireland LabVIEW User Group

- NOBLUG - North Of Britain LabVIEW User Group

- NOCLUG

- North Oakland County LabVIEW User Group

- NWUKLUG

- Orange County LabVIEW Community

- Orlando LabVIEW User Group

- Oregon LabVIEW User Group

- Ottawa and Montréal LabVIEW User Community

- Phoenix LabVIEW User Group (PLUG)

- Politechnika Warszawska

- PolŚl

- Rhein-Main Local User Group (RMLUG)

- Romandie LabVIEW User Group

- Rutherford Appleton Laboratory

- Sacramento Area LabVIEW User Group

- San Diego LabVIEW Users

- Sheffield LabVIEW User Group

- Silesian LabVIEW User Group (PL)

- South East Michigan LabVIEW User Group

- Southern Ontario LabVIEW User Group Community

- South Sweden LabVIEW User Group

- SoWLUG (UK)

- Space Coast Area LabVIEW User Group

- Stockholm LabVIEW User Group (STHLUG)

- Swiss LabVIEW User Group

- Swiss LabVIEW Embedded User Group

- Sydney User Group

- Top of Utah LabVIEW User Group

- UKTAG – UK Test Automation Group

- Utahns Using TestStand (UUT)

- UVLabVIEW

- VeriStand: Romania Team

- WaFL - Salt Lake City Utah USA

- Washington Community Group

- Western NY LabVIEW User Group

- Western PA LabVIEW Users

- West Sweden LabVIEW User Group

- WPAFB NI User Group

- WUELUG - Würzburg LabVIEW User Group (DE)

- Yorkshire LabVIEW User Group

- Zero Mile LUG of Nagpur (ZMLUG)

- 日本LabVIEWユーザーグループ

- [IDLE] LabVIEW User Group Stuttgart

- [IDLE] ALVIN

- [IDLE] Barcelona LabVIEW Academic User Group

- [IDLE] The Boston LabVIEW User Group Community

- [IDLE] Brazil User Group

- [IDLE] Calgary LabVIEW User Group Community

- [IDLE] CLUG : Cambridge LabVIEW User Group (UK)

- [IDLE] CLUG - Charlotte LabVIEW User Group

- [IDLE] Central Texas LabVIEW User Community

- [IDLE] Cowtown G Slingers - Fort Worth LabVIEW User Group

- [IDLE] Dallas User Group Community

- [IDLE] Grupo de Usuarios LabVIEW - Chile

- [IDLE] Indianapolis User Group

- [IDLE] Israel LabVIEW User Group

- [IDLE] LA LabVIEW User Group

- [IDLE] LabVIEW User Group Kaernten

- [IDLE] LabVIEW User Group Steiermark

- [IDLE] தமிழினி

- NORDLUG Nordic LabVIEW User Group

- Academic & University Groups

-

Special Interest Groups

- Actor Framework

- Biomedical User Group

- Certified LabVIEW Architects (CLAs)

- DIY LabVIEW Crew

- LabVIEW APIs

- LabVIEW Champions

- LabVIEW Development Best Practices

- LabVIEW Web Development

- NI Labs

- NI Linux Real-Time

- NI Tools Network Developer Center

- UI Interest Group

- VI Analyzer Enthusiasts

- [Archive] Multisim Custom Simulation Analyses and Instruments

- [Archive] NI Circuit Design Community

- [Archive] NI VeriStand Add-Ons

- [Archive] Reference Design Portal

- [Archive] Volume License Agreement Community

- 3D Vision

- Continuous Integration

- G#

- GDS(Goop Development Suite)

- GPU Computing

- Hardware Developers Community - NI sbRIO & SOM

- JKI State Machine Objects

- LabVIEW Architects Forum

- LabVIEW Channel Wires

- LabVIEW Cloud Toolkits

- Linux Users

- Unit Testing Group

- Distributed Control & Automation Framework (DCAF)

- User Group Resource Center

- User Group Advisory Council

- LabVIEW FPGA Developer Center

- AR Drone Toolkit for LabVIEW - LVH

- Driver Development Kit (DDK) Programmers

- Hidden Gems in vi.lib

- myRIO Balancing Robot

- ROS for LabVIEW(TM) Software

- LabVIEW Project Providers

- Power Electronics Development Center

- LabVIEW Digest Programming Challenges

- Python and NI

- LabVIEW Automotive Ethernet

- NI Web Technology Lead User Group

- QControl Enthusiasts

- Lab Software

- User Group Leaders Network

- CMC Driver Framework

- JDP Science Tools

- LabVIEW in Finance

- Nonlinear Fitting

- Git User Group

- Test System Security

- Product Groups

-

Partner Groups

- DQMH Consortium Toolkits

- DATA AHEAD toolkit support

- GCentral

- SAPHIR - Toolkits

- Advanced Plotting Toolkit

- Sound and Vibration

- Next Steps - LabVIEW RIO Evaluation Kit

- Neosoft Technologies

- Coherent Solutions Optical Modules

- BLT for LabVIEW (Build, License, Track)

- Test Systems Strategies Inc (TSSI)

- NSWC Crane LabVIEW User Group

- NAVSEA Test & Measurement User Group

-

Local User Groups (LUGs)

-

Idea Exchange

- Data Acquisition Idea Exchange

- DIAdem Idea Exchange

- LabVIEW Idea Exchange

- LabVIEW FPGA Idea Exchange

- LabVIEW Real-Time Idea Exchange

- LabWindows/CVI Idea Exchange

- Multisim and Ultiboard Idea Exchange

- NI Measurement Studio Idea Exchange

- NI Package Management Idea Exchange

- NI TestStand Idea Exchange

- PXI and Instrumentation Idea Exchange

- Vision Idea Exchange

- Additional NI Software Idea Exchange

- Blogs

- Events & Competitions

- Optimal+

- Regional Communities

- NI Partner Hub

-

ManuelSebald

on:

LabVIEW and Linux Review

ManuelSebald

on:

LabVIEW and Linux Review

-

swatts

swatts

on:

LabVIEW in a Box!

on:

LabVIEW in a Box!

-

Taggart

Taggart

on:

Influence

on:

Influence

-

swatts

swatts

on:

TMiLV (This Month in LabVIEW) - Definitely NOT another podcast!

on:

TMiLV (This Month in LabVIEW) - Definitely NOT another podcast!

-

swatts

swatts

on:

AI is going to take our jobs - everyone should panic now.

on:

AI is going to take our jobs - everyone should panic now.

-

swatts

swatts

on:

LabVIEW Firmata - Easy control of Arduino Microcontrollers

on:

LabVIEW Firmata - Easy control of Arduino Microcontrollers

-

Dhakkan

on:

LabVIEW OpenSUSE Leap15.5 on a LattePanda V1

Dhakkan

on:

LabVIEW OpenSUSE Leap15.5 on a LattePanda V1

-

swatts

swatts

on:

LabVIEW and Linux - Intro

on:

LabVIEW and Linux - Intro

-

swatts

swatts

on:

It's time for me time.

on:

It's time for me time.

-

LFBaute

on:

Sneaky Peak and Some Love to Spread (CTI)

LFBaute

on:

Sneaky Peak and Some Love to Spread (CTI)

Function Point Analysis - The Emery Scale

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

Well Hello programming chums

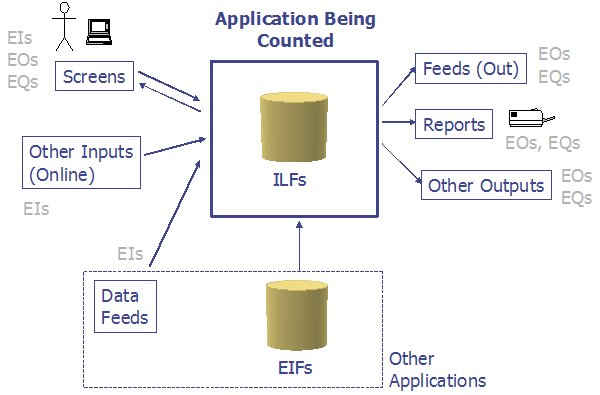

At the European CLA 2015 summit Sacha Emery presented "Actor and benefits of different frameworks" where he described his journey through various design choices when applied to a standard set of requirements. This segued nicely into various topics that were being discussed throughout the summit and it I also hijacked it selfishly to promote one of my interests.

I'm after finding some way of measuring the complexity of a system, now ideally I would like this to be something tangible like function points.

Data Functions:

Internal logical files

External interface files

Transactional Functions:

External Inputs

External Outputs

External Inquiries

The complex problem is how to identify these items within a LabVIEW Block Diagram (I haven't given up on this)

One of the advantages of function points is that you can can get them from requirements and use them for estimation. My primary interest is not for estimation tho' I want a common measure of the the complexity of a project so we can better assess and discuss our design choices. The disadvantage is that it is hard work and takes a lot of time to undertake a study.

So how does Sacha's presentation link to this?, like all good test engineers I like my processes to be calibrated and I think this might be the perfect tool to do this. Here's how we think it will work (and I say we because this is based on conversations with Jack Dunaway, Sach and various other luminaries).

We have a common set of requirements.

We apply a framework/design/architecture to provide a solution.

We tag all the project items that are original items.

We analyze all the items that are in the problem domain (i.e. separate the solution from the architecture).

We apply a change/addition in requirements.

We analyze the additional items that have been generated to satisfy the change.

This should give us several numbers

Items in Basic Architecture (A)

Items to Solve the Problem (B)

Items to Make an Addition (C)*

And this is what we call the Emery scale.

The preconception is that generally the code to solve the problem should be pretty repeatable/similar.

So dumping Function Points for the time being and going back to a more simple idea (care of Mr Dunaway), we should tag the architectural parts and count the nodes using block diagram scripting. As Chris Relf has stated there is some good data to be had from LOC (lines of code) comparisons in similar domains.

And what use is all this work? For 1 it should give us an estimate about the number of VIs our design will have on a given target (The ratio of B:C(#requirements) should give us a size) . This is an essential bit of design information.

Early stages and a long term project. It could be part of my CS thesis if I was doing one...

This is only one potential benefit of the kind of baselining that Sacha has started (from an educational perspective seeing the same problem solved with different frameworks will be invaluable)

Thoughts appreciated

Love Steve

*A late addition to give us some concept of how a change affects the project

Steve

Opportunity to learn from experienced developers / entrepeneurs (Fab,Joerg and Brian amongst them):

DSH Pragmatic Software Development Workshop

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.