- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Preallocate array memory without initializing it

Solved!11-07-2019 05:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Wow thanks. Very useful.

One thing that maybe I haven't understood:

A Functional Global on the other hand, is not by wire. It's a VI, and there's nothing to refer to it. As a consequence, you can't reuse the FG for different purposes. If you want to reuse it, you need to save a copy (which is usually a bad thing).

You mean that is a bad thing to create a preallocated clone of FGV and forward its reference wire?

11-07-2019 05:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@EMCCi wrote:

Wow thanks. Very useful.

One thing that maybe I haven't understood:

A Functional Global on the other hand, is not by wire. It's a VI, and there's nothing to refer to it. As a consequence, you can't reuse the FG for different purposes. If you want to reuse it, you need to save a copy (which is usually a bad thing).

You mean that is a bad thing to create a preallocated clone of FGV and forward its reference wire?

That would work, technically.

But it's sooo complicated (to make and to work with), compared to a wire\value, or even a wire\ref, solution.

When the main stops, you either a) have no more clones->no more data->no more debugging, or b) have dangling clones that are difficult to close.

11-07-2019 05:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Another analogy is that by-value is having the data on a piece of paper in your hand, while with by-reference the data is written on a whiteboard somewhere and you know where that whiteboard is, and can go look at it when you want (as can other people who know that whiteboard).

11-07-2019 06:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ah, just remembered this...

Even if I want a singleton, I usually try to make it by wire!

This is very inconvenient, you need to pass the wire though all the code, why would you want to do this?

Well, it forces me to thing about coupling. Or de-coupling actually. If I have at some point 30 modules, that are potentially reusable, I don't like to find out I've but singletons to other modules. If you make for instance a log singleton, it simply does not belong inside a completely unrelated module A. If module A needs to log, adding the log singleton does not belong inside module A. Failing to decouple the two, will eventually make one big clutter of code. Code dedicated to that single application, and not reusable. Potentially of course, but it tends to happen at least to some degree.

11-07-2019 12:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I misunderstood some things before, maybe I still misunderstand some things. Plunging ahead anyway...

1. The "real-time" aspect of this app isn't very demanding. Accumulating an array of 86 values once a second for an hour (3600 seconds) is pretty trivial.

2. You state that you then can flush this buffer and do some kind of 1 hour averaging analysis that you store for some further historical trending purpose. Again, no biggie.

3. You then kinda lost me with the following:

Then also I have to store the analyzed data. For example, storing the 1h averages from 1 year ago. This will be a 87600×86 buffer that when the first year is reached, the oldest value has to be removed from one extreme and the new added at the other one.

First, there are 24*365=8760 hours in a year, not 87600, so you've overestimated your buffer size needs by 10x. Second, this sounds like the kind of thing you only need to handle once per hour. Again, I don't see why performance needs to be such a big concern.

Third, you describe this as though the program needs to build up this "1 year of hourly averages" buffer in memory by running continuously for a year or more. That seems like a very unsafe approach. While an actual database would be better, you could pretty easily handle this via something as simple as ASCII files.

I would imagine laying out a combo of file naming convention and folder structure first. The "real-time" part of your program would store average data once per hour. At program startup, I'd go find the relevant files and fill my lossy circular buffer with the most recent 1 year of hourly data. An hour later, I'd replace the oldest with my newest. Etc.

On another note, I very much agree with the following comments from wiebe about your use of VI server and cloned FGV's.

@EMCCi wrote:

You mean that is a bad thing to create a preallocated clone of FGV and forward its reference wire?

wiebe@CARYA wrote:

That would work, technically... But it's sooo complicated (to make and to work with), compared to a wire\value, or even a wire\ref, solution.

It just seems like complexity without corresponding payoff. It sounds like you need no more than 2 distinct buffers -- one to accumulate every second for up to an hour, one to update every hour to hold exactly 1 year worth of hourly averages. From where I sit, it seems both could be handled pretty directly with queues without the FGV layer.

If you're really intent on managing 2 distinct buffers with 1 common set of code, you might take this as an opportunity to try LVOOP. What you've coded to handle reentrant clones with VI Server is more complicated than what it'd take to make a class-based implementation. Much of the wiring would probably look just about the same with object wires in place of the VI refs.

-Kevin P

11-08-2019 08:14 AM - edited 11-08-2019 08:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello everybody again. First of all thanks everybody for your answers.

<context>

Then, a little bit more of context: Yes, our application isn't very demanding. But don't be so literal, I have only shown an example. We have 5 buffers: 1) 1s period / 12 min range; 2) 2min / 1day; 3) 15min / 1 week; 4) 1h / 1month; 12h / 1year. So right now the biggest buffer will be the temporal buffer that stores the 1 second incoming data during 12h to flush it and average the data to create a new point for the year buffer. But this is now, if everything goes well we can increase the resolution and range of the buffers. It's not a closed project with fixed requirements.

By the analysis side, we obviously do the averages, but also histograms and percentiles calculation. Again if everything goes well this will increase in the near future. So we try to develope the code with this in mind.

Also the buffers code is used in other applications, where performance is also important. But why are we concerned about performance here if we receive one packet every second for each machine? Because when we upgrade the analysis code to include new calculations, we have to recalculate the old raw data (stored in a DB) in order to obtain the new reprocessed data. We store the raw data for this, to be able to upgrade the analysis code and be able to obtain again the new processed data since the beggining of the application. Right now we have almost 100M of data buckets (1 year of acq from 2/3 machines). So the time to reprocess all this data is a heavy process, that's one of the reasons why we try to optimize. That's the reason because we are trying to avoid the queue way (that is the current implementation) for the new version. Because it returns the data in the "wrong format" and we lose some time in a useless operation, because the data is inherently array 2D.

Then, why we have this buffers in ram? One of the destinations of this data is web display. The latency is lower from RAM than DB. Also we want to avoid to create DB for each new buffer we create and write both the raw and processed data.

</context>

<question>

I have uploaded my progress on the circular buffer code (hope no missing VI now). It works good for me and faster than older code based on queues. I haven't used the in place element structure nor DVR because I think that isn't needed to avoid memory reallocation but I want your opinion. I have keeped the preallocated clone FGV scheme, because it was the template that I already had and because maybe the analysis could be integrated inside the buffer code and make some type of action engine with methods over the buffer data (sort, mean, histogram, percentiles, power spectrum...).

I have some doubts on how to process the buffered data without making copies of it. When I do the read or flush operations, I create o copy of the data that starts on the right index with the right length. I don't know if this could be done without making a data copy.

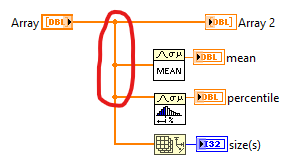

Also for the processing, does this forks create a data copy? If yes, how we could avoid it?

In my mind, you don't need to create a copy of the data calculate the mean or the size of the array, but I don't know how it's managed. Could help implement the processing code inside the "buffer action engine" to avoid the data copy?

</question>

Thanks for your time,

EMCCi

11-08-2019 09:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@EMCCi wrote:

When I do the read or flush operations, I create o copy of the data that starts on the right index with the right length. I don't know if this could be done without making a data copy.

Getting an array subset (right index, right length) does not copy data.

Most likelly, it will create a (sub)array object (internally, you won't be able to tell). That's basically a header that contains subset info and a pointer to the original data. Reverse, transpose, and other functions do this too. All to avoid copies. Of course, once the data is modified somewhere, a copy will be made anyway.

This is very convenient if you don't care too much. It can be hard to understand though. Benchmarking a transpose on a large 2D array will show it can transpose very very fast. But in practice, the price comes later, for instance when autoindexing the transposed array in a for loop.

I'd make a benchmark test VI that uses all the functionality in a realistic manner. Than you can make changes and, somewhat objectivelly, know what is efficient and what no. Somewhat objetivelly, because saving, restarting, making changes seem to ruin the objectivity quite a bit. If you want to know for sure, make changes, restart LabVIEW, dismiss the first run, than run a few 100 times and get the average.

11-08-2019 09:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@EMCCi wrote:

In my mind, you don't need to create a copy of the data calculate the mean or the size of the array, but I don't know how it's managed. Could help implement the processing code inside the "buffer action engine" to avoid the data copy?

Probably.

If you get the data from the FGV, a copy will probably be unavoidable. Processing inside it will help.

However, the more you put in the FGV, the more time you wast waiting for the FGV to finsinh other functions. The instance of the FGV cannot execute twice. The instance will stall until it's done. So you won't be able to add data to the FGV until the FGV is done processing. That might hunt you.

I guess that is unavoidable, you either have copies and parallel processing, or 1 set of data and forced synchronisation.

The more functions you add, the more attractive a class becomes. Than you'd have more control over when the data is copied and when not. As long as the wire is in sequence, you won't get a copy. If you want a copy, split the wire and you'll have a 'snapshot'. Simply put all shift register in the private data, and make a VI for each function.

11-08-2019 10:10 AM - edited 11-08-2019 10:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I still can't shake the feeling that the problems you *need* to solve aren't quite the same as the ones you're *aiming* to solve. Granted, that's easy to say from over here in the cheap seats.

I have a lot of scattered thoughts and comments, here goes.

1. You mentioned having 5 buffers and then listed 4. Their periods and ranges don't really mesh together cleanly, i.e., the range from one does not become the period for another. This complicates things significantly, and may not offer enough payback to be worthwhile.

2. You also stated that this isn't a "closed project with fixed requirements." So it seems to me that if you need the ability to construct somewhat arbitrary groupings of period and range, you'll need to start by planning to store ALL raw data at 1-second intervals in perpetuity.

All the business of setting up the right set of buffers will depend on what periods and ranges are asked for. So to be fully flexible, the program will probably need access to all raw data ever.

3. This is especially true as you now reveal the much-expanded scope of the data analysis. It's not just hourly averages for a year at a time. It's also histograms, percentile calcs, and other not-yet-thought-of methods of analysis.

For some kinds of analysis (like averages), you could store (for example) only the *result* from averaging the 3600 samples each hour, then later average the 8760 hourly averages for a valid yearly average.

Other kinds of analysis simply won't allow for that kind of simplification. This includes *any* kind of future analysis you don't know about yet, and thus aren't saving intermediate results for.

4. At some point, someone's gotta take a big picture view on this thing and accept some bounds and constraints. You can do some buffering to provide fast results for web display. But you'll have to limit the choice of which results can be presented more-or-less instantaneously.

If you're going to support arbitrary future usage, users must accept that some kinds of analysis will take some time to perform.

5. Having said all that, the desire to maintain multiple distinct buffers *does* make more more sense of your cloned FGV (a.k.a. "Action Engine"). The AE structure may be helpful as you put data processing functions inside the AE to prevent making copies of the data buffer it holds.

FWIW, the same would hold true with an LVOOP implementation. The AE shift register corresponds to the private data of the class. Each Action case corresponds to a class member function that can access and operate on the class data. Separate buffers arise by simply dropping multiple objects of that class down onto your diagram. Identifying the distinct buffers (such as during debug) becomes much more straightforward -- just follow the object wire.

-Kevin P

11-08-2019 10:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@EMCCi wrote:

Then, why we have this buffers in ram? One of the destinations of this data is web display. The latency is lower from RAM than DB. Also we want to avoid to create DB for each new buffer we create and write both the raw and processed data.

Just as an aside, if you were asking me how to design your application, rather than how to design a buffer, I would have already brought up the idea of doing analysis in a database (I often use SQLite, but also MySQL or PostgreSQL) and only bringing over reduced (averaged/decimated) subsets for display (one can't see more than about 5000 points on a graph anyway). Note that your database could store pre-calculated averages at various timespans.