Some courses and learning paths on NI Learning Center may not appear on learner dashboards. Our team is actively investigating.

If you encounter access issues, please contact services@ni.com and include the learner’s name, email, service ID or EA number, and the list of required courses.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

- Next »

Preallocate array memory without initializing it

Solved!11-08-2019 10:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello everyobody,

Getting an array subset (right index, right length) does not copy data.

Most likelly, it will create a (sub)array object (internally, you won't be able to tell). That's basically a header that contains subset info and a pointer to the original data. Reverse, transpose, and other functions do this too. All to avoid copies. Of course, once the data is modified somewhere, a copy will be made anyway.

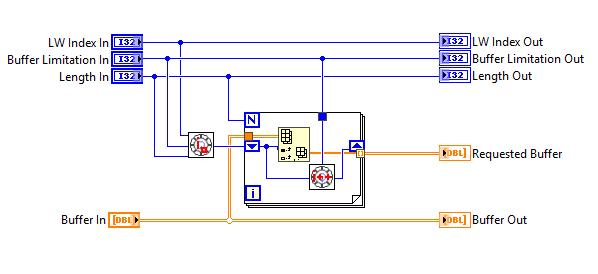

That's very interesting. How I could get the array subset of a circular array without making a copy? For example, if you have an array of size = 10, where the content starts at index = 8 and has a length = 5, you can't directly get a subset of the desired data. I have done it like this, but I don't know if it can be done better:

1. You mentioned having 5 buffers and then listed 4. Their periods and ranges don't really mesh together cleanly, i.e., the range from one does not become the period for another. This complicates things significantly, and may not offer enough payback to be worthwhile.

There are 5. I have missed only the number of the fifth, but there are 5. If the range of one buffer, would be the period of the other would be easier, but we think that this setup is the best for the results interpretation. Plots with periods of 1 day or 1 week have too low resolution.

2. You also stated that this isn't a "closed project with fixed requirements." So it seems to me that if you need the ability to construct somewhat arbitrary groupings of period and range, you'll need to start by planning to store ALL raw data at 1-second intervals in perpetuity.

All the business of setting up the right set of buffers will depends on what periods and ranges are asked for. So to be fully flexible, the program will probably need access to all raw data ever.

That's what we are doing. That's why we need performance. To reprocess all the raw data. Same for point 4. Not all data is already calculated on RAM. If user wants a weekly buffer, but not from the last one, it will have to be processed.

I know nothing about LVOOP. I don't know how many time it will require to learn, and how much the code can improve. So It's difficult to take the decision to go for it now without knowing the payoff. Maybe in the next version.

Just as an aside, if you were asking me how to design your application, rather than how to design a buffer, I would have already brought up the idea of doing analysis in a database (I often use SQLite, but also MySQL or PostgreSQL) and only bringing over reduced (averaged/decimated) subsets for display (one can't see more than about 5000 points on a graph anyway). Note that your database could store pre-calculated averages at various timespans

Probably. But we know very little about DB and MySQL. Just the very minimum to write to it from LV and recover the data. We write the data with a timestamp index column and then the flatenned data in the other column as binary.

Thanks for your time! You are helping a lot!

EMCCi

11-08-2019 11:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

How I could get the array subset of a circular array without making a copy?

Once upon a time I thought I was being clever with the following technique. It costs you having to double your buffer size and write time. The payback is instant subarrays with no reconstruction at read time. How those trade off is situation-dependent.

Here comes the technique. Let's suppose you *need* 3600 rows and 86 columns. Make a buffer with 7200 rows and 86 columns. Each time you receive a new row, write it to both row # i and also to row # (i+3600). This is the wasteful part, the writing and storage.

When it comes time to *read*, you can directly extract a contiguous 2D subarray if you start your read from the 1st half of the rows (rows 0 - 3599).

Dunno if that'll help you, but it's one way to *try* to optimize read speed, albeit at the expense of storage space.

On a separate note, it seems one of your most frequent file access needs is to select and extract arbitrary chunks of data, identified according to a specific range of timestamps. This is a very natural database function. It is not a natural function for a set of binary files.

I suspect that the process of populating buffers from file data is presently your most significant performance limiter. Learning to use some SQL derivative for your data storage and lookup is almost certainly going to pay off.

-Kevin P

11-09-2019 04:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm thinking about an algorithm that would rotate the rows of the 2D array before the read. With the In place Element Structure and the swap values method. If we rotathe the rows until make that the oldest row meets the index 0, then with the length we could get the valid data with the array subset method, without duplicating data.

There is any algorithm to roll the rows of a 2D array swaping them? I'm trying to figure out by myself but didn't success yet.

11-09-2019 05:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I think rotating the array is going to add just as much overhead (or more) than just copying into a new array.

11-09-2019 01:54 PM - edited 11-09-2019 01:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I have implemented your solution Kevin P. It works really good.

The buffer code now runs 5 times faster in average than the queues code. Reading, writing and operating with the data.

Also the general code runs a lot faster, because the absence of multiple memory reallocation. This was what I was looking for. Seems that learning to program fast in labview, is learn how to avoid data duplication and memory allocation.

You are all invited to a virtual beer 😛

Thanks for your time, best regards,

EMCCi

11-11-2019 03:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@EMCCi wrote:

Seems that learning to program fast in labview, is learn how to avoid data duplication and memory allocation.

Don't forget algorithms. It's not really the problem in your situation, but good algorithms is key to efficiency. Redesigning an algorithm from N to Log(N) makes all the difference.

- « Previous

- Next »