- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Interpolate Pixel

04-17-2024 01:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

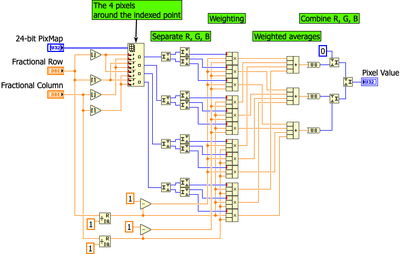

Sometimes I need to interpolate pixels. This is what I came up with.

Any suggestions for improvement?

04-17-2024 02:44 PM - edited 04-17-2024 03:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@paul_a_cardinale wrote:

Sometimes I need to interpolate pixels. This is what I came up with.

Any suggestions for improvement?

Improvement in term of performance or simplification or ...?

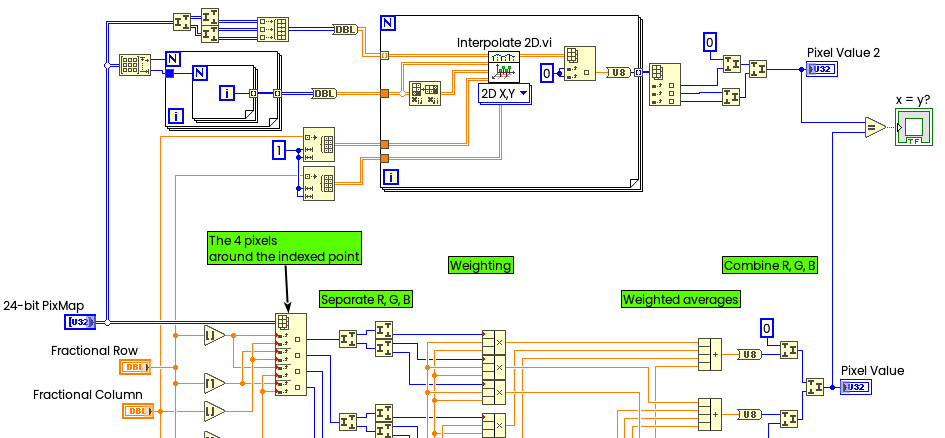

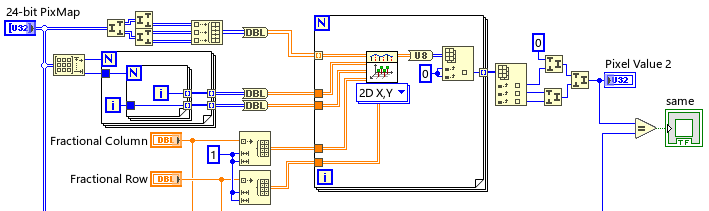

I would like to suggest something like that:

should give you almost the same result (up to rounding), but not sure about non-square images (too late to think), so please check fo bugs, this is just an idea.

Update:

I guess, for non-square images shall be modified like this:

04-17-2024 08:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Andrey_Dmitriev wrote:

@paul_a_cardinale wrote:

Sometimes I need to interpolate pixels. This is what I came up with.

Any suggestions for improvement?

Improvement in term of performance or simplification or ...?

I would like to suggest something like that:

should give you almost the same result (up to rounding), but not sure about non-square images (too late to think), so please check fo bugs, this is just an idea.

Update:

I guess, for non-square images shall be modified like this:

Runs much much slower than mine.

04-17-2024 11:33 PM - edited 04-17-2024 11:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@paul_a_cardinale wrote:

Runs much much slower than mine.

Yes, for sure, because this interpolation is not highly optimized (it's enough to take a look inside the SubVI). Here the only advantages are — less code and in additional four interpolation methods offered by Interpolate 2D, including bicubic and spline (I was under the assumption that perhaps you had reinvented the wheel).

From a pure performance point of view, you can probably get a little bit faster if you wrap the whole code into a DLL. But in such use case (where you have only a "single pixel" interpolation), I would not expect a "dramatic" improvement, because the overhead from the DLL's call will eat up most of the advantages. It only makes sense if you have a lot of calls in a cycle, like resampling or remapping of a large image, then wrapping this cycle into a DLL will give you a performance boost, definitely. But usually, if you're working with images, you already have library functions like IMAQ Resample or OpenCV Remap or similar from Intel IPP, etc.

If I'll attack this problem, then I would probably do one round with AVX2 (for example, based on Bilinear-Interpolation-SIMD /_bilinear.c, which you'll need to adapt for your RGB case, of course), but again, this will only make sense if you need this for the whole image (which, in the ideal case, should be properly aligned in memory, like VDM does), but I don't know your high-level requirements — what the final goal you need to achieve with 2D interpolation...

04-18-2024 12:03 AM - edited 04-18-2024 03:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Looks loosely based on this old code. I doubt you would need that much orange. I'll try a few things.

04-18-2024 03:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Andrey_Dmitriev wrote:From a pure performance point of view, you can probably get a little bit faster if you wrap the whole code into a DLL. But in such use case (where you have only a "single pixel" interpolation), I would not expect a "dramatic" improvement, because the overhead from the DLL's call will eat up most of the advantages. It only makes sense if you have a lot of calls in a cycle, like resampling or remapping of a large image, then wrapping this cycle into a DLL will give you a performance boost, definitely. But usually, if you're working with images, you already have library functions like IMAQ Resample or OpenCV Remap or similar from Intel IPP, etc.

Why would wrapping code in a dll make it faster?

Making a dll from you LabVIEW code will surly not make any difference.

Making a dll from Python code will make things a lot slower.

If you mean, rewriting it in C and make a dll out of that, maybe it will be a tiny bit faster, but not that much. Unless maybe you can get the C dll to use fancy SSE.

LabVIEW's compiler does create pretty fast code nowadays.

As far as the copy of data, there doesn't need to be a copy. You can pass a pointer to the dll... That's what IMAQ does, and what I would do if I'd call an OpenCV function.

04-18-2024 03:37 AM - edited 04-18-2024 03:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

EDIT: Not sure if you want to get 1 interpolated value from a pixel map, or interpolate the entire image. If it's the entire image, read on.

For larger images, you can use 2D FFT to resize (interpolate or decimate).

The trick there is that the amplitude needs to be adjusted by the resize factor.

I think this might be faster, but only at some point. The interpolation will be different, definitely not linear. Maybe better, maybe worse.

Another benefit is you can resize with any factor. After all, interpolating between pixels doesn't result in 2X the width\height. It actually resizes each dimension with (x-1)*2+1...

I'd have to dig if you're interested.

04-18-2024 04:25 AM - edited 04-18-2024 05:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

.Why would wrapping code in a dll make it faster?

Making a dll from you LabVIEW code will surly not make any difference.

Making a dll from Python code will make things a lot slower.

If you mean, rewriting it in C and make a dll out of that, maybe it will be a tiny bit faster, but not that much. Unless maybe you can get the C dll to use fancy SSE.

LabVIEW's compiler does create pretty fast code nowadays.

As far as the copy of data, there doesn't need to be a copy. You can pass a pointer to the dll... That's what IMAQ does, and what I would do if I'd call an OpenCV function.

Yes, this is exactly what I mean — rewriting "bottleneck" code in pure C, using the Intel (or Visual Studio) compiler to compile it to a DLL. Of course, I did not mention Python, LabVIEW, C#, etc. DLL is not a silver bullet, but it can sometimes help — it depends on the particular application and use case.

LabVIEW nowadays is much better than it was 20 years ago, no doubt, but it is still far (well, sometimes not so far) behind highly optimized compilers like Intel's. This is also a reason why under the hood of the Vision Development Module there are mostly just DLL calls and not pure LabVIEW code. If we are working with images and need to achieve the highest possible performance, then LabVIEW's arrays may not be the best choice, because you have LabVIEW's memory manager behind the scenes, and you can't have fine control of the proper allocation which is usually used in high-performance computation. In image processing, line alignment is helpful to get a performance boost (therefore IMAQ images are aligned to a 64-byte boundary, which is helpful to perform computation up to AVX-512, as well as each image being aligned to a page boundary (4096 bytes) which will reduce page faults a little bit). Intel IPP does the same in term of default Line Alignment (under this alignment I mean, for example, if you have 8 bit image with 1000 pixels width, then your line will be 1024 bytes, resulted 24 bytes gaps between the lines). The area where LabVIEW is really good is parallel computation — you can easily get a parallelized for-loop, which will give real performance advantages on multi-core CPUs (for sure, you can do the same in C, but with a little bit higher "coding overhead").

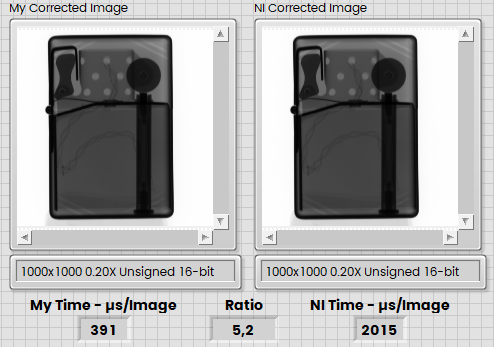

As an example from Vision Forum I can give you this one — Flat Field Correction, where I've got three to five times performance boost compared to IMAQ (and get rid of some unexpected behavior at the same time) only by porting algorithm to AVX (and using NI CVI, which is also far away from Intel, cause based on ancient clang 3.3, but in case of AVX intrinsic the quality of code generation is not so important, its almost like you program everything in pure assembler):

Back to the topic — we need to know what exactly needs to be done with the images and how bilinear 2D interpolation is really used on the whole image (is this resampling or remapping or something else). Then, a 'ready-to-use' suitable library function from OpenCV/Intel IPP or even the Vision Development Module (VDM) may be will help to get better performance without low-level coding.

But it's an interesting topic, I always enjoy such performance tuning exercises.

04-18-2024 05:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Andrey_Dmitriev wrote:

wiebe@CARYA wrote:

.Why would wrapping code in a dll make it faster?

Making a dll from you LabVIEW code will surly not make any difference.

Making a dll from Python code will make things a lot slower.

If you mean, rewriting it in C and make a dll out of that, maybe it will be a tiny bit faster, but not that much. Unless maybe you can get the C dll to use fancy SSE.

LabVIEW's compiler does create pretty fast code nowadays.

As far as the copy of data, there doesn't need to be a copy. You can pass a pointer to the dll... That's what IMAQ does, and what I would do if I'd call an OpenCV function.

Yes, this is exactly what I mean — rewriting "bottleneck" code in pure C, using the Intel (or Visual Studio) compiler to compile it to a DLL. Of course, I did not mention Python, LabVIEW, C#, etc. DLL is not a silver bullet, but it can sometimes help — it depends on the particular application and use case.

LabVIEW nowadays is much better than it was 20 years ago, no doubt, but it is still far (well, sometimes not so far) behind highly optimized compilers like Intel's. This is also a reason why under the hood of the Vision Development Module there are mostly just DLL calls and not pure LabVIEW code. If we are working with images and need to achieve the highest possible performance, then LabVIEW's arrays may not be the best choice, because you have LabVIEW's memory manager behind the scenes, and you can't have fine control of the proper allocation which is usually used in high-performance computation. In image processing, line alignment is helpful to get a performance boost (therefore IMAQ images are aligned to a 64-byte boundary, which is helpful to perform computation up to AVX-512, as well as each image being aligned to a page boundary (4096 bytes) which will reduce page faults a little bit). Intel IPP does the same in term of default Line Alignment (under this alignment I mean, for example, if you have 8 bit image with 1000 pixels width, then your line will be 1024 bytes, resulted 24 bytes gaps between the lines). The area where LabVIEW is really good is parallel computation — you can easily get a parallelized for-loop, which will give real performance advantages on multi-core CPUs (for sure, you can do the same in C, but with a little bit higher "coding overhead").

As an example from Vision Forum I can give you this one — Flat Field Correction, where I've got three to five times performance boost compared to IMAQ (and get rid of some unexpected behavior at the same time) only by porting algorithm to AVX (and using NI CVI, which is also far away from Intel, cause based on ancient clang 3.3, but in case of AVX intrinsic the quality of code generation is not so important, its almost like you program everything in pure assembler):

Back to the topic — we need to know what exactly needs to be done with the images and how bilinear 2D interpolation is really used on the whole image (is this resampling or remapping or something else). Then, a 'ready-to-use' suitable library function from OpenCV/Intel IPP or even the Vision Development Module (VDM) may be will help to get better performance without low-level coding.

But it's an interesting topic, I always enjoy such performance tuning exercises.

Well, that's not fair if you don't show the code 😉.

Turning debugging off helps a lot, apparently even after building a dll or exe, as debugging is not turned off for VIs automatically.

I think LV will use boundary offsets for memory allocation, and obviously the lack of control of memory copies is an almost direct result of the automatic parallel execution.

I did an OpenCV 'by wire' experiment (and presentation, not the best), and having an imaging library work by wire really worked for me. Of course, I probably dislike 'by reference' more than average.

04-18-2024 06:32 AM - edited 04-18-2024 06:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

Well, that's not fair if you don't show the code 😉.

Unfortunately you didn't read my message thoroughly — the link was provided:

Re: Strange deviations of the intensities with IMAQ Flat Field Correction VI.

Also attached to this message (sorry for off-topic) and this time downgraded to LV2018 (but 64-bit only), full C code inside.