- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Why is indexing conditional tunnel 3x faster than shift registers

01-15-2017 03:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Why is indexing conditional tunnel 3x faster than shift registers?

It must have something to do with somekind of optimization code managing the array allocation, but why can't it do it in both cases?

Run attached example.

01-15-2017 03:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Things get really hard for the compiler when you go between diagram structures. So it is likely that NI has not found a good way to detect it and make the optimizations.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

01-15-2017 06:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@jacemdom wrote:

Why is indexing conditional tunnel 3x faster than shift registers?

It must have something to do with somekind of optimization code managing the array allocation, but why can't it do it in both cases?

Run attached example.

What is really going to smoke your noodle is when you replace the case structure with a Select and see a 10x drop in performance.

"Should be" isn't "Is" -Jay

01-16-2017 01:39 PM - edited 01-16-2017 01:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Done more digging into the matter and here is my conclusion :

Stay away from shift registers at all costs even in initialize/replace scenarios.

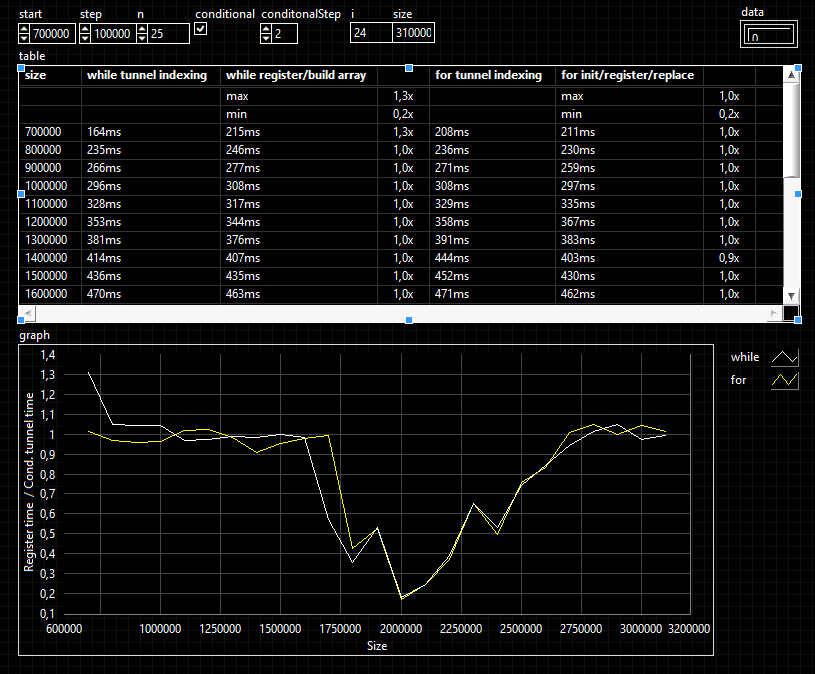

Did some interesting observations when running in non conditional indexing, looks like the while loop array management predictive algorythm is all over the place conpared to the for loop one. Run the example and you will see on the graph. It looks quite impossible to correlate execution time with sample size, instinct pointing that the higher the sample size the higher the gain or maybe the inverse but nothing of the sort seems to be happening. Run the example with n = 100.

As for the reason behind the indexing tunnels being always faster than shift registers, I think I may have found a clue in the help file http://zone.ni.com/reference/en-XX/help/371361N-01/lvhowto/replace_sr_with_tunnel/

"Tunnels are the terminals that feed data into and out of structures. Replace shift registers with tunnels when you no longer need to transfer values from one loop iteration to the next."

Probably as something to do with making the data available for access inside the loop for shift registers vs not necessary/possible for indexing tunnels.

I really was under the impression that shift registers would be faster in initialize/replace scenarios! But it kind of makes sens when you think about making the data available in loop vs not, there is probably some data copying/buffering/whatever going on. Did not evaluate the data usage so no clue on this and not going to do so but if anyone wants to do it, please share your results.

01-16-2017 02:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@jacemdom wrote:

I really was under the impression that shift registers would be faster in initialize/replace scenarios!

I don't see any benchmark for the Initialize and Replace. If you actually initialize your array and replace inside of a loop, it should be the same underlying code as the FOR loop's indexing tunnel.

jacemdom wrote:

Did some interesting observations when running in non conditional indexing, looks like the while loop array management predictive algorythm is all over the place conpared to the for loop one. Run the example and you will see on the graph. It looks quite impossible to correlate execution time with sample size, instinct pointing that the higher the sample size the higher the gain or maybe the inverse but nothing of the sort seems to be happening. Run the example with n = 100.

Of course. The reason being that the FOR loop knows how many times it will run. It can therefore preallocate the memory. With a While loop, the compiler does not have that convienience. So it allocates a small amount of memory and adds more when it runs out (it is half-way smart in how much it adds in the fact that it adds chunks of memory, but it is still a memory allocation). So the lesson here is to use FOR loops whenever possible.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

01-16-2017 02:17 PM - edited 01-16-2017 02:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yourr VIs are in 2016 that I do not have now but I can help a bit.

The issue with the shift registers is most likely repeatedly reallocating memory. The way to avoid that issue is to ctreate an aray prior to the loop and use that as your starting point. The array should be sized to the max you would expect, generally the size of the array that is being indexed at teh input.

With a pre-initialized aray you only take performance hit once when it starts.

Inside the loop replace elements as you need.

After th loop completes, take waht comes out of the SR and apss it to a "resize" array and pass it the actual size of the array (usually index plus 1).

Now what I described above should make the SR faster BUT not as fast as a conditional tunnel. NI optimized that method so it is a bit faster.

And looking back...

The preformance hit was in vovlved in repeatedly reallocating memory.

I will see if I can find a good tag for you to chase down and edit this message if I find one.

Edit:

This is my tag for "LabVIEW_Performance" in the related tags you will find other performance related tags such as...

LabVIEW_Array_Performance

Inplaceness-algorithm

LabVIEW_Memory and other related tags. Look at the tag cloud (Related Tags) to explore the various threads I have tagged related to performance.

Ben

01-16-2017 02:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Ben wrote:

After th loop completes, take waht comes out of the SR and apss it to a "resize" array and pass it the actual size of the array (usually index plus 1).

Array Subset is the other common way to reduce the array.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

01-16-2017 02:23 PM - edited 01-16-2017 02:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@crossrulz wrote:

@Ben wrote:

After th loop completes, take waht comes out of the SR and apss it to a "resize" array and pass it the actual size of the array (usually index plus 1).

Array Subset is the other common way to reduce the array.

I seem to recall Christian indicating the "reshape" can opearte in-place. I figure I can not go TOO wrong emulating Christian.

Edit:

The orignal Q asked "why?"

The reson is "repeatedly reallocating memory (calls to the OS) AND becuase NI optimized teh operation. In the first version of the conditional tunnels it was not optimized.

Ben

01-16-2017 02:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Just to be clearer, I am quite aware of the basic while/for loop advantages/inconvenients as well as the many different optimal ways of managing arrays.

I was mainly concerned about why can't LabVIEW optimize an initialized array/replace all elements to be as fast as an indexing tunnel. If you look at my example code, the for loop part is comparing exactly this, indexing tunnel vs initialized array/replace all elements. I am also a bit curious about the way the while loop array resizing algorythm are actually working.

Thanks to all who contributed, this is and was not really an issue for me, more of an ongoing learning LabVIEW experience that started 22 years ago 🙂

Fot those curious I have attached a LV12 version of the code used to explore the issue, don't forget that this is not production grade code 🙂

01-17-2017 04:20 AM - edited 01-17-2017 04:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

jacemdom - you got parts of the shift-register based solution wrong (and had only put it into the for-loop version). I would also turn off debugging when doing such tests. Attached is a revised version (backsaved to LV2012).

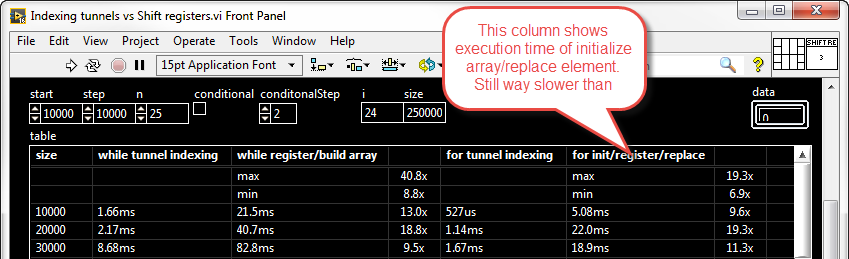

Preallocating the array at its maximum size, then resizing it to the resulting number of elements, makes the shift-register based solution more comparable to the auto-indexing function. With large arrays and the conditional factor low, it is even faster (but uses more memory than needed, which can also partially explain why the conditional has an edge, but not fully...to really understand why the auto-indexing function mostly wins requires more under the hood knowledge than I have...). Here is one example test:

As you can see I have turned on condtional (that's what we are interested in here anyway), and have ramped up the array size. This is also with the corrected code of course.

The conditional auto-indexing has an algorithm that resizes the array "intelligently" (that is, it got such in its second revision, at first it too was a "stupid" build array solution), typically with increasing step sizes (I'm not sure of the exact implementation, but one approach is to double the size every time it needs a resize, so when the array hits a limit at 4 elements it will go to 8, at 8 it will jump to 16 etc...perhaps with a max (if knowable) as a cap on the resizing. This way even very large arrays will not be rezied that many times, and if the resulting array turns out to not be that large the algorithm will not have wasted much memory (at worst by a factor of 2). We could of course implement a similar approach with shift registers (to save some memory when the result is less than half of the max), but not as efficiently as the native LabVIEW code.

AristosQueue for example can probably reveal more about the way this is actually implemented in the condtional auto-indexer(?). I remember I discussed tha lack of speed with him when the first version of it came out (too late to fix it until the next major version though back then).