- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Calculate the time difference between two time stamps?

09-05-2015 04:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you Steve,

I know very well how I could build myself a better timestamp library. But, put it in harsh words, I just don't understand why I am forced to do such an easy and boring task myself, when my company pays NI a yearly license fee for the software. I am not the one who devised, enforce and decided to support (?) a 128-bit timestamp format in my own language, although I appreciate its generality. With generality I mean its fitness to accomodate very large time spans (e.g. geological eras, million of years and more) and very short (e.g. subatomic particles decay time, millionth of seconds and less), which I think is why it has been made so wide.

The 128-bit timestamp is a waste of space for 99% of the applications, but at least it should have the advantage to be useful in any application. But... for the poor guys that need to work in the fields of nuclear fusion, subatomic particle physics, GPS systems, telecommunication systems, PTP synchronization, to cite a few, the fractional part of the timestamp (nanoseconds and down) is much more relevant than the integer part. Working with that, in my opinion is not well supported.

Let's take forther your thoughts, doing the math a bit more precisely. Timestamp "2015/09/05 21:10:40.315" corresponds to 3524325040 + 5813522596330733568*2^{-64} [s]. The doubles can store 52 bits in the matissa which gives 53 significant binary digits. As Log10(2) is about 0.30103, this means that a double can store between 15 and 16 decimal significant digits. As you see, 9 out of these 15/16 are used just for the second fields, therefore we start with 6/7 for the fractional part. This is probably ok for the micro second resolution, but it is not for the nanosecond resolution (we miss 2 decimal digits)! This regarding storing the absolute timestamp in a double.

When I compare two timestamps (that's why measurements are taken) I need to do the difference. Obviously, one can expect the timestamp difference to be several order of magnitudes less than the absolute timestamp (and usually the smaller the resution the smaller this difference), therefore the double floating point format can, and usually does, become large enough to encode the difference with the right accuracy. However, note that If one first subtract using 128-bit integer algebra (pardon, fixed point fractional...) and then converts the result to double, the one is doing the right thing to preserve the instrinsic precision of the time format. If instead, the one first converts the two timestamps and then subtracts them as doubles, the one will get surprises, because all the fractional digits apart the first 7 have been lost in the conversion.

So what?

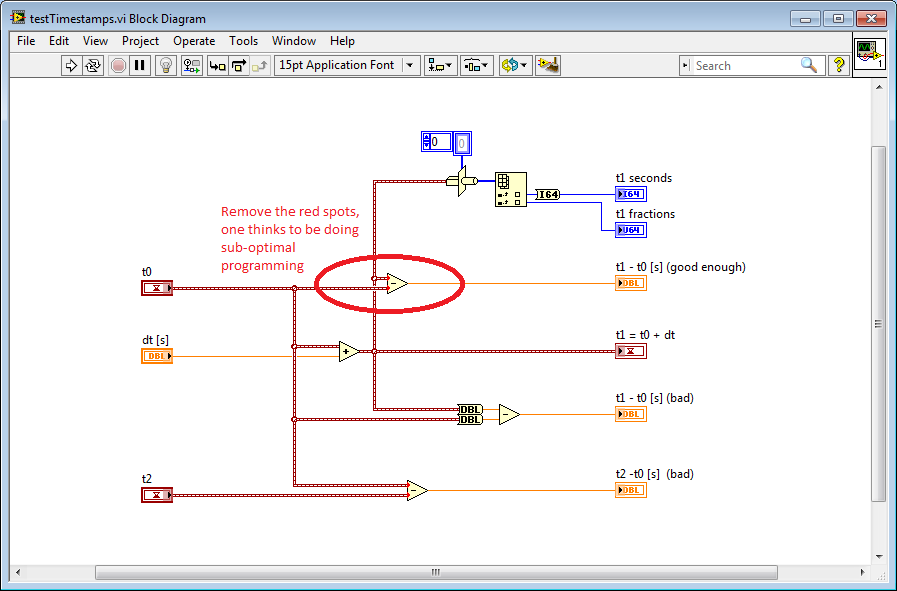

Well, what is LV doing when I subtract 2 timestamps and I get a double? I don't know. Should I care? Yes. Should this be so an unconventional operation to be marked with red spots at the inputs? No. Is it difficult to implement correctly? I would say a job for a smart 14 year-old student. Why this has not implemented when the 128-bit has been released the first time? I do not understand. Why it is not yet implemented in LV 2012? I don't know. I hope it actually is implemented correctly, but it is not communicated to the user in the right way (avoinding the red dots when you do the difference whould help). Is this bad advertisment for LabView? Yes, at least for myself. Are there application where this may be relevant? All those that require timestamps with ns accuracy. The set is not empty. Is LV used in such applications? I don't know. I guess not yet, otherwise this would have been sorted out already.

I write this because I like the idea of the universal 128-bit timestamp and I would like to make it better.

Sincerely

09-05-2015 05:12 PM - edited 09-05-2015 05:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The red dot indicates a coercion. This doesn't mean this is unconventional, but simply gives an indication that there is mismatch in the data types. Most likely this means that the subtract function is converting the timestamp to a DBL and then doing the conversion, in exactly the fashion you don't want it to. LV 2015 still does this.

I can understand why you don't like that. This has never been an issue for me, but I understand why it would be an issue for someone working with absolute times at a ns level. Personally, I only went down to us levels and used relative time (integer number of us), but that doesn't mean this shouldn't be supported.

As Hooovahh pointed out, the correct place to put such a request would be on the idea exchange, where people can comment and vote on it. You can pharse it as a request to modify the subtract function to support timestamps properly by subtracting before converting and explaining the logic. You should probably also verify that this is really how it behaves. As far as I can tell from your description, NI has done everything correctly except adding explicit timestamp support to the implementation of the subtract function (and presumably other math operations).

From a practical standpoint, I would still go with the home made subtraction if that's what you need. As programmers, it sometimes falls to us to do some work to compensate for problems or things that don't work like we want. It's possible that you can also do this by changing the output type of the subtract primitive to extended or fixed-point, but since the coercion seems to happen at the input, it seems unlikely that this would help. You could possibly convert the inputs to extended or fixed point, but I'm not sure LV has the data types you need and it would still require adding that to the code.

___________________

Try to take over the world!

09-05-2015 05:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi tst,

thank you for your comment. I see that this topic is still of interest, much more than I would have expected. I do not agree with you on many points but I can understand the time difference type issue.

> Timestamps are by definition a data type encoding absolute time

Ok, but why in you opinion they have chosen 64-bit for the fractional part if i can't use it? This could be solved by allowing the timestamp to represent absolute or relative values exactly as the double type can. When representing a relative timestamp, the timestamp represents seconds (using the fixed point fractional form...)

> When you calculate the difference between two absolute times, you get relative time, which is by definition not a timestamp (and some of the absurdities of this have been pointed out)

Time differences are what matters. Absolute time is just a time difference with respect of a time known as epoch. The epoch of the LV time is 1904 Jan 1st 00:00:00 UTC. I don't see more absurdities in subtracting times as subtracting lengths.

> not as integer, as you say, but as a fixed point fractional value, which can also be looked at as two integers

Ok, sorry for the confidentiality. Can you explain the practical difference between a 128-bit fixed point fractional value and a 128-bit integer?

> As for calculating a difference in ns, I haven't personally had the need, and I expect that the majority of users don't either.

This doesn't mean that there are no users that need it.

> I haven't done the calculation or testing, but I would expect the DBL data type to easily hold ns values as long as your seconds aren't too large.

See in my previous post the calculations. The seconds field is actually too large for some applications. Again: why has NI chosen a 128-bit timestamp if I do not have the infrastructure to exploit it?

> In any case, it should be easy enough for you to write a very simple VI which will take the timestamp, type cast it into a fixed point value or a cluster of two integers, do the subtraction on those and output the result in any format you wish, something I expect your namesake would have done in his sleep

Of course. Why paying a license fee, if one is not getting such a fundamental thing out of the box?

I think a compromise to the time difference type would be to make it isomorphous to a double. (this would still waste 78 of the 128 bits of the timestamp in the worst case, but I admit that probably it would be not so easy to find an application where this actually happens). Taking a difference of two timestamps to get a double should be allowed without warning and should be done subtracting as 128-bit integers first and then converting to double.

Sincerely,

09-05-2015 07:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@alanturing wrote:

Ok, but why in you opinion they have chosen 64-bit for the fractional part if i can't use it?

The question isn't why they chose 64 bits (that's a fairly natural choice considering the possible values and that you would generally want to align the data type to a nice size), but rather why they didn't get the math primitives to work (again, assuming they didn't). I have no idea what the answer is, but could certainly think of a few - they didn't realize this would be a problem. They decided doing all of the relevant math code for all of the possible combinations would take too much work for the gain. They wanted to but didn't get around to it and it was left on the shelf due to lack of general interest. Take your pick.

Time differences are what matters. Absolute time is just a time difference with respect of a time known as epoch. The epoch of the LV time is 1904 Jan 1st 00:00:00 UTC. I don't see more absurdities in subtracting times as subtracting lengths.

The absurdities are in things like 2015-2014=1904, as pointed out above. Unless there was a new relative time data type, as you suggest, that doesn't make sense, and I don't think there's anything wrong with DBL seconds for representing relative time. It seems to fit pretty much every application. If there are applications where it doesn't, they can be done with special code.

Can you explain the practical difference between a 128-bit fixed point fractional value and a 128-bit integer?

One is an integer and the other isn't? There may not be practical differences, but I really haven't gone through the trouble of analyzing the bits, since it's not worth it even if you do need the functionality.

> As for calculating a difference in ns, I haven't personally had the need, and I expect that the majority of users don't either.

This doesn't mean that there are no users that need it.

Agreed, hence the suggestion to post to the idea exchange. That's still my suggestion, but I'm also practical, so I prefer having more immediate solutions than waiting for new versions if possible.

Of course. Why paying a license fee, if one is not getting such a fundamental thing out of the box?

Because not everyone considers it as fundamental as you do? Sure, logically the suggestion of supporting the timestamp natively makes sense, but I never had the need for it from the perspective you're suggesting and I expect the vast vast majority of users don't either. That doesn't mean it's a bad idea, but it's certainly not critical, and there is an easy alternative.

___________________

Try to take over the world!

09-05-2015 08:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I may have missed this, so I apologize if I am repeating an already covered issue, but I expect a large part of the the reason a DBL is used is for upgrading of old code. Before NI introduced the timestamp, time was always treated as a DBL. If we subtracted two timestamps (DBLs), we got a DBL.

If NI had changed the representation, there was a large risk of upgrade failures for old code. Perhaps NI should have made a different version of the primitive Get Time in Seconds (similar to how they have treated some other primitve changes in the past), so old code used an old primitve and new code used the timestamp, but they chose not to.

As tst has said, I doubt your need is shared among a large LabVIEW population, so it will not be a high priority to NI. The amount of time already spent on this topic could have been spent writing code that does exactly what you want/wish for.

09-08-2015 05:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Sorry guys,

how about a simple test?

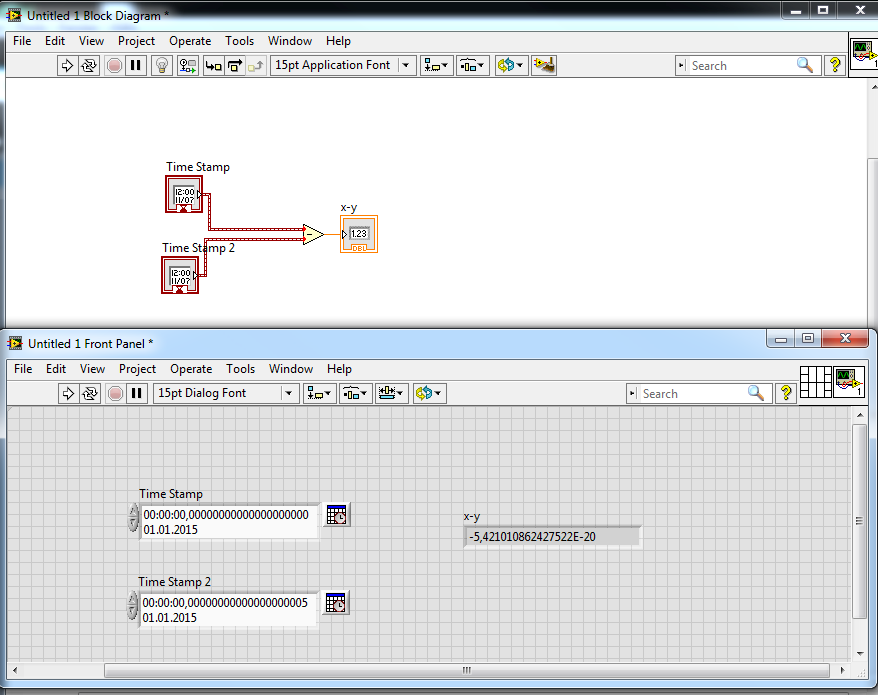

takes longer to write this answer to add two timestamp controls , a substract and a create indicator -> DBL on a vi 😉

change the properties of the timestamp to show up 20 digits of fraction of a second (resolution is about 5E-20) and 16(?) digits for the double?

LabVIEW seems to be smart enought 😄

For a time difference a floating DBL is fine by now, my colleagues work hard to get timing uncertainty down to 10E-18 😄 😄

Henrik

LV since v3.1

“ground” is a convenient fantasy

'˙˙˙˙uıɐƃɐ lɐıp puɐ °06 ǝuoɥd ɹnoʎ uɹnʇ ǝsɐǝld 'ʎɹɐuıƃɐɯı sı pǝlɐıp ǝʌɐɥ noʎ ɹǝqɯnu ǝɥʇ'

09-08-2015 08:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi all,

I posted the topic on the idea exchange as suggested.

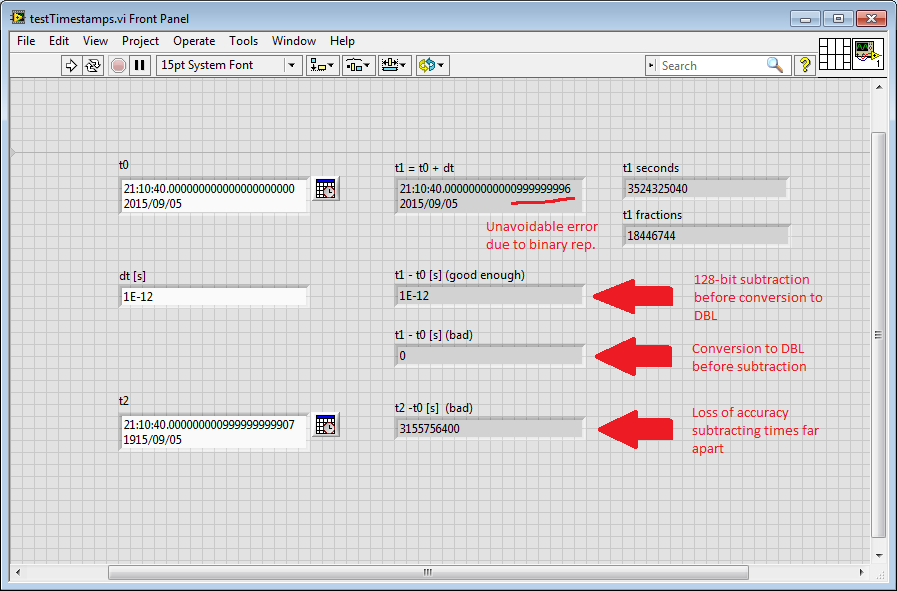

To close the thing, I did my own tests and discovered that currently the subtraction of two timestamps is done performing the algebraic sum of the 128-bit numbers first.

Sincerely,

09-08-2015 10:06 AM - edited 09-08-2015 10:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

My thought on the matter is that it's very rare that you would need to maintain ns accuracy whilst also subtracting large differences in absolute time - if you're measuring something on the order of days/months/years (where the integer part of the difference becomes substantial), is a few ns going to make a difference? Whilst I can't talk for all application areas, generally you stay within a certain time magnitude (e.g. s, ms, us, ns etc.) and in this case you'd probably be better off looking at using relative time (perhaps maintaining a start offset) and a floating point (and it all depends on your clock source anyway!) relative time representation?

Interesting topic though - good that a simple test shows that it does indeed work for very small time differences (as long as you know not to convert to a DBL first).

I do agree that perhaps the coercion dots on the subtraction are misleading, if you were to remove the coercion by adding a 'to DBL' you would potentially lose accuracy!

09-08-2015 10:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

So the bottom line is that everything is actually working correctly, except for the display of the coercion dots?

*sigh*

___________________

Try to take over the world!

09-08-2015 12:37 PM - edited 09-08-2015 12:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I did a similar test, and found out some interesting things.

--- In a TimeStamp control, you cannot enter a YEAR earlier than 1600.

--- In a TimeStamp control, you cannot enter a YEAR later than 3000.

Which brings up the question about the move to the I128 format.

2^63 = 9.223372 e18 seconds

= 2562047788015215 hours

= 292271023045.3 years.

So, why the limit to 1600..3000 , when the format is capable of ~300 billion years?

I realize that the past is complicated by Julian/Gregorian issues and odd leap seconds and misc. stuff. But still...

Culverson Software - Elegant software that is a pleasure to use.

Culverson.com

Blog for (mostly LabVIEW) programmers: Tips And Tricks