View Ideas...

Labels

-

Analysis & Computation

305 -

Development & API

2 -

Development Tools

1 -

Execution & Performance

1,027 -

Feed management

1 -

HW Connectivity

115 -

Installation & Upgrade

267 -

Networking Communications

183 -

Package creation

1 -

Package distribution

1 -

Third party integration & APIs

290 -

UI & Usability

5,456 -

VeriStand

1

Idea Statuses

- New 3,058

- Under Consideration 4

- In Development 4

- In Beta 0

- Declined 2,640

- Duplicate 714

- Completed 336

- Already Implemented 114

- Archived 0

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

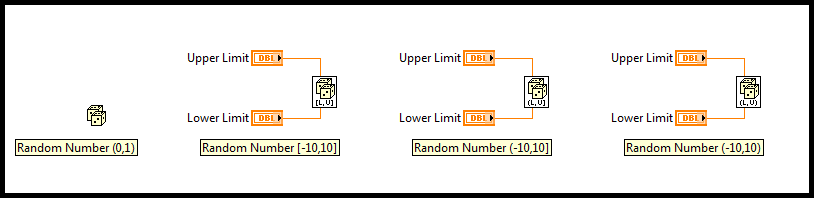

Random number function with option of including/excluding the endpoints

Submitted by

moderator1983

on

02-12-2014

10:06 AM

14 Comments (14 New)

moderator1983

on

02-12-2014

10:06 AM

14 Comments (14 New)

Status:

Declined

I have seen the request of Random number function improvement, however further to that, my wish is to have an option to include or exclude the endpoints (just like In Range and Coerce Function).

Labels:

14 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

National Instruments will not be implementing this idea. See the idea discussion thread for more information.”