- Document History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

myAlexa: Voice Controlled Robot Hand to Interpret Sign Language

myAlexa: Voice Controlled Robot Hand to Interpret Sign Language

Artificial intelligence is getting too smart, how dangerous can this be in the future? The topic of what AI can do in the future has been a huge concern for people over the last few years, however what if it can be used for the benefit of deaf people?

I integrated Amazon Alexa into LabVIEW for the first time, in order to control a robot hand converting speech into sign language. It can display numbers, letters and sequence of letters (words). Using a robot hand with more motors to control the elbow and wrist this can be extended to convert full sentences into sign language. Making it much easier to communicate with people experiencing hearing difficulties. To understand more check out my video.

A Bit About the Developer

My Name is Nour Elghazawy and at the time I did this project I was doing my Industrial Placement at National Instruments. I am studying Mechatronics Engineering at the University of Manchester and I finished this project in one week. Working on the project improved my LabVIEW skills which made it easier to become a Certified LabVIEW Developer. It did also improve my skills in other programming languages such as Python and JSON;

if you have any questions or feedback, feel free to reach out on LinkedIn.

Why this project?

Speech detection can be very useful to be integrated into your project, from your personal assistant to robotic system and even smart homes. Implementing the whole Artificial Intelligence platform from the start can take a long time even after doing so, the quality of the system is will depend on the number of samples used to train the algorithm. Therefore I did this proof of concept for integrating the already built speech detection Algorithm by Amazon Alexa into LabVIEW. This allows you to control ANYTHING in LabVIEW just with your voice!

This was demonstrated with a voice controlled robot hand to convert speech into sign language, which can be a first step to allow all of us to communicate in sign language with deaf people.

System Summary

Intents and Slots were used on Alexa to interpret what you would say, for example if you want to open finger number 1, we have an intent called robotfingerstate this intent has utterances which is what you would say to Alexa. The utterances have slots which are variables inside of the utterances. The utterances can be like "Open finger number one" and on the Amazon Cloud this will be "{State} finger number {Number}". Where {State} and {Number} are slots. State can be either Open or Close. The Number slot represents which finger is being used. It can be 1,2,3,4 or 5. So, you can say open finger number 1. close finger number 1, open finger number 2 and so on. All of these utterances will trigger the same intent.

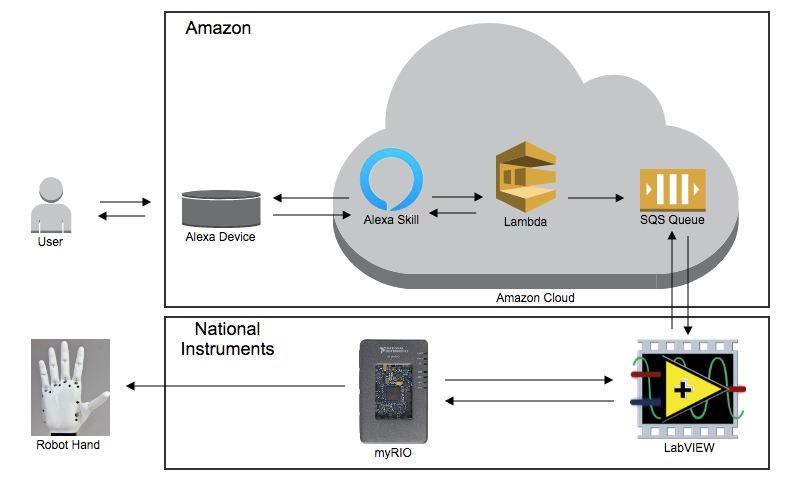

If I said anything which corresponds to an intent, my Lambda Function will be triggered. Lambda is like the brain on the Amazon side. It will know which intent triggered it and put a message in the SQS Queue based on the intent and slot. For example if the user said open finger number 1. The message will be onefingerstate,open,1.

LabVIEW has a Queued Message Handler architecture. It reads the message from the SQS Queue, decodes it and update a Network Published Shared Variable on the Real Time of the myRIO, which controls the Motors of the hand.

Hardware

- myRIO

- 3D Printed Robot Hand with 5 H-King HK15298

- Power Supply This one has a maximum of 3A which isn't the best for the torque needed to drive the 5 motors simultaneously therefore a power supply with higher Power would be better.

- Amazon Echo Dot however it's not needed. You can send the commands from the Alexa Developer Console.

Software

How to use the code?

- You need to create AWS account, follow the steps in "Create An AWS Account" in this document.

- Get your Access key ID and your AWS Secret Key, follow the steps in section "SETUP SECURITY AND ACCESS CREDENTIALS" in the same document.

- Create a Standard Amazon SQS Queue, this Queue will have the messages from our Lambda Function into LabVIEW. Follow the steps in the "CREATE QUEUE IN AWS SQS" section in the same document. Record the ARN and name of the Queue.

Create Lambda Function:

- Go to AWS Management Console

- Login with your AWS account created in step one, and under AWS services search for Lambda.

- Once you are in the AWS Lambda Service press on Create Function.

- Choose Author From Scratch, Specify your Function name LabVIEW and your runtime to be Python 3.6.

- Under Permissions Expand "Choose or create an execution role".

- Choose "Use an existing role".

- Now under Existing role choose lambda_basic_execution.

- Press Create Function.

- Under the Function code Remove any code in the lambda_function.py, then copy and paste what's in the Python Code text file in the attachment.

- In the code specify your Access Key, Security Key and Region from step 2.

- In line 6 change the queue_url, to the ARN Specified in step 3

- In line 8 change the QueueName to the name specified in step 3.

- Specify lambda_function.lambda_handler as the name of the Handler and Save.

Create Alexa Skill

- First Create an Alexa Developer Account.

- In the Alexa Developer Console press on Create Skill.

- Specify a Name like Robot_Hand then choose custom skill and provision your own, then on the top right Create Skill.

- Choose the Start from scratch template.

- In the JSON Editor delete everything in the editor. Copy and paste what's in the JSON code text file attached. Then Save Model.

- Choose End Point, Select AWS Lambda ARN, record your Alexa Skill ID (you will use this in your Lambda Function).

- Now Go back to your Lambda function created previously to link it with this skill.

- In the Designer Tab Choose Alexa Skills Kit, this says that you want the skill to be able to trigger this function.

- In the skills ID put your Alexa Skills ID then press add and Save.

- On the top right of the page you should have the ARN of your Lambda function (Record this), you will use it in step 12.

- Go back to your Alexa Developer Console End Point Tab.

- Put your Lambda ARN in the Default Region, then Save End Point.

- Go to the Invocation Name, this is how you will open your Alexa Project for instance if it's robot hand you will say Alexa open Robot Hand.

- In the top Save and Build Model.

- From the top go to the Test Window, Choose "Development" instead of "Off" next to "Test is disabled" for this skill.

Now go to LabVIEW and open the Project attached

- In the main VI under the PC target, Put your credentials and the SQS name.

- Run the RT VI. (if you are just looking to connect Alexa to LabVIEW, remove all Network published shared variables from the project)

- Run the PC VI.

- From the Test Window in Alexa developer console put the instruction Open robot hand to open your project.

- Put the instruction Display number one.

- See the message in your PC VI.