- Document History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

S.M.A.R.T - Smartcamera based Maneuverable Autonomous Robotic Tank - for Student Design Competition 2013

Contact Information

University: Technion - Israel Institute of Technology

Team Members: Daniel Alon and Aviad Dahan (B.Sc in July 2013)

Faculty Advisers: Oren Rosen and Kobi Kohai

Email Address: danielalon1991@gmail.com or roseno@tx.technion.ac.il or kohai@ee.technion.ac.il or aviad.d@gmail.com

Submission Language: English

Project Information

Title: S.M.A.R.T - Smartcamera based Maneuverable Autonomous Robotic Tank

Description:

This project was done during our B.Sc. studies of Electrical Engineering in the control, robotics and machine learning laboratory at the Technion – I.I.T.

The main goal of the project was to rapidly develop and design of a semi-autonomous robotic tank, utilizing a smart camera as its visual system, while using NI software and hardware.

Products

NI software and hardware:

Software:

- NI LabVIEW 2012 - with FPGA, Real-time, Vision, and Robotics modules.

- NI Vision Assistant 2011.

Hardware:

- Controller - NI SB-RIO 9631.

- Camera - NI Smart Camera 1742.

Other software and hardware:

- Robotics connection traxster II chassis.

- TRENDnet TEW-431BRP router.

- EDIMAX EW-7318Ug wireless adapter.

- android or iOS based smatphone eqquiped with touchOSC application.

- PC

The Challenge

In this project, the goal was to develop, from the drawing-board to a proto-type, a semi-autonomous robotic tank, based on NI hardware and software, including a NI smart camera.

A project as such as this one, is a great challenge, since it requires the knowledge of Hardware and software development, and multidisciplinary knowledge in electrical and electronics engineering.

The greatest challenge of them all, was creating perfect integration between all the different elements of the tank, creating a larger, properly working system.

The Solution

In order to begin the project, a Traxster II motorized tank was chosen, and it will be the Chassis of the robotic tank.

The robot is equipped with a National Instruments Single Board RIO 9631 controller. This controller holds the relevant code that was written for the movement and control parts of project.

The camera chosen for this project is the National Instruments Smart Camera 1742 which holds a built –in processor and holds the vision and image processing algorithms.

The remote control is applicated by a smartphone, running on an Android or iOS operating system. The data transfer is conducted using OSC protocol via WIFI. This data contains parameters of the smartphone accelerometers which induce movement after translation to movement parameters as such as power and steering angle.

The image processing algorithm in this project was an ellipse detection algorithm which was programmed for working in real-time frame rate, and discovering the target – a black circle on a white board, in various positions and angles.

The autonomous tracking algorithm was designed using a Scan-Lock-Act method. At first, the robot scans the perimeter, looking for a target using the ellipse finding algorithm, while rotating around itself with a maximum scanning angle of 360 degrees. When a target is detected, the robot locks on it, placing it in the center of his viewpoint. Once a target is acquired, the robot acts, and moves towards the target. Any interruptions through one of these steps will cause the algorithm to fall back to one of the prior steps.

The project achieved its goal, of implementing and designing a working prototype rapidly (in 8 months), while using NI hardware & software development tools, thanks to the ease of implementation, and flawless communication between the software and hardware by NI.

Future development possibilities:

- Sequel project at the CRML lab - smartphone based robot for indoor mapping and navigation.

- Durability improvement - handling an properly working in rougher terrain.

- Improving the tracking algorithm - making it more efficient using gradient descend or steepest descent, for an example.

- Reducing power consumption.

- Utilization for military uses - injured soldier carrier robot.

Detailed information is found in the project book, attached to this post.

The Project poster is attatched, as well.

The final result:

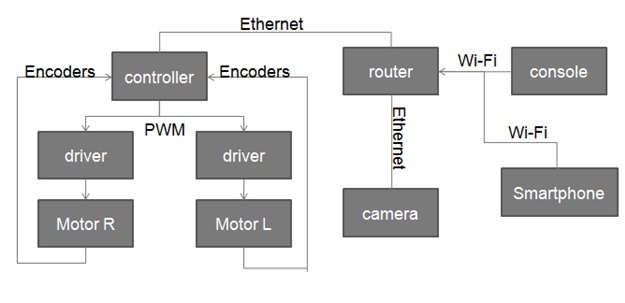

the block diagram of the project:

A demonstration video of the autonomous target tracking and movement: