- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Video transmission from one computer to another computer over TCP

Solved!07-15-2022 09:53 AM - edited 07-15-2022 09:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@billko wrote:

See, that is the beauty of having a standard header with the length (which you calculate before sending) of the rest of the message. You read the header - which is a known length - parse the length field, and read that many more bytes. You can get fancy and add some kind of checksum if you are paranoid about data integrity, too.

If I am using TCP I would skip adding any type of checksum or CRC to my protocol. TCP already handles that for you at the lower level. Why add on yet more checks for the data that really don't provide any additional value. There are better methods for confirming data validity such as a MD5 hash. But this is used more to ensure you got that correct data itself and not whether it was corrupted during transport. Here you are checking it against a known expected value which you already have. Let the TCP protocol detect if there were any issues during transmission.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

07-15-2022 11:11 AM - edited 07-15-2022 11:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Mark_Yedinak wrote:

Ok, so I see the basic issue. Create a new typedef and call it "Message Header". This typedef should contain the message ID and length. Modify the message typedef to use the new header typedef. Make sure that the order of the cluster is that the message header cluster is the first element of the cluster and the data field is the next item in the cluster. In the receive message VI replace the typedef wired to the unflatten from string to use the new header typedef. This should correct the issue that you are seeing.

Note: I am not at a computer that I can update the code for this post. If you still have issues I can post an update later. The current client code would need to be re-worked to support the auto-receonnect.

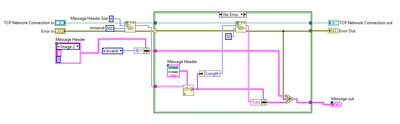

I guess you meant something like this, right?

I replaced the newly created "Message Header" typedef with the previous one everywhere.

But still the problem is not solved, the previous one continues to give the same error.

07-15-2022 11:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

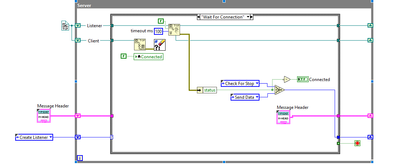

No, you did not do as I suggested. I said to create a new typedef for the message header. The message typedef is updated to include the header typedef and the data.

See the attached code.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

07-15-2022 11:57 AM - edited 07-15-2022 11:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Mark_Yedinak wrote:

@billko wrote:

See, that is the beauty of having a standard header with the length (which you calculate before sending) of the rest of the message. You read the header - which is a known length - parse the length field, and read that many more bytes. You can get fancy and add some kind of checksum if you are paranoid about data integrity, too.

If I am using TCP I would skip adding any type of checksum or CRC to my protocol. TCP already handles that for you at the lower level. Why add on yet more checks for the data that really don't provide any additional value. There are better methods for confirming data validity such as a MD5 hash. But this is used more to ensure you got that correct data itself and not whether it was corrupted during transport. Here you are checking it against a known expected value which you already have. Let the TCP protocol detect if there were any issues during transmission.

My main point was that it was so easy to develop a protocol with a simple header that takes all the guesswork out of reading and decoding a message. However, I forgot that TCP has that kind of robustness stuff built in. That's the whole idea behind TCP.

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.

07-15-2022 12:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@billko wrote:

@Mark_Yedinak wrote:

@billko wrote:

See, that is the beauty of having a standard header with the length (which you calculate before sending) of the rest of the message. You read the header - which is a known length - parse the length field, and read that many more bytes. You can get fancy and add some kind of checksum if you are paranoid about data integrity, too.

If I am using TCP I would skip adding any type of checksum or CRC to my protocol. TCP already handles that for you at the lower level. Why add on yet more checks for the data that really don't provide any additional value. There are better methods for confirming data validity such as a MD5 hash. But this is used more to ensure you got that correct data itself and not whether it was corrupted during transport. Here you are checking it against a known expected value which you already have. Let the TCP protocol detect if there were any issues during transmission.

My main point was that it was so easy to develop a protocol with a simple header that takes all the guesswork out of reading and decoding a message. However, I forgot that TCP has that kind of robustness stuff built in. That's the whole idea behind TCP.

I understood your point completely. I have just seen so many TCP protocols that include an additional CRC and always wonder why it was added when the underlying protocol took care of that for you. However, once you have a defined header it is easy to extend the protocol as needed. For instance, a source ID and/or destination ID can be useful when implementing a publish/subscribe type protocol. A message ID is also useful (I should really change my header definition to call that the message type) which can be used in an asynchronous protocol to track missing responses at the application layer.

Anyway, the whole point of this discussion is that when sending data between applications/tasks in your system it is a good idea to have some level of an application layer protocol.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

07-15-2022 01:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

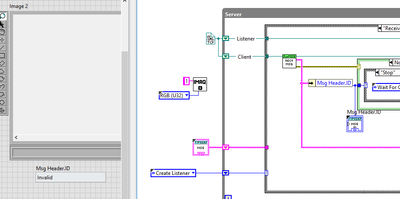

Of course I didn't understand correctly.

Now I am encountering another interesting error.

What could be the reason for an error like the following to occur here?

Do you think everything is okay here?

07-15-2022 01:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Mark_Yedinak wrote:

@billko wrote:

@Mark_Yedinak wrote:

@billko wrote:

See, that is the beauty of having a standard header with the length (which you calculate before sending) of the rest of the message. You read the header - which is a known length - parse the length field, and read that many more bytes. You can get fancy and add some kind of checksum if you are paranoid about data integrity, too.

If I am using TCP I would skip adding any type of checksum or CRC to my protocol. TCP already handles that for you at the lower level. Why add on yet more checks for the data that really don't provide any additional value. There are better methods for confirming data validity such as a MD5 hash. But this is used more to ensure you got that correct data itself and not whether it was corrupted during transport. Here you are checking it against a known expected value which you already have. Let the TCP protocol detect if there were any issues during transmission.

My main point was that it was so easy to develop a protocol with a simple header that takes all the guesswork out of reading and decoding a message. However, I forgot that TCP has that kind of robustness stuff built in. That's the whole idea behind TCP.

I understood your point completely. I have just seen so many TCP protocols that include an additional CRC and always wonder why it was added when the underlying protocol took care of that for you. However, once you have a defined header it is easy to extend the protocol as needed. For instance, a source ID and/or destination ID can be useful when implementing a publish/subscribe type protocol. A message ID is also useful (I should really change my header definition to call that the message type) which can be used in an asynchronous protocol to track missing responses at the application layer.

Anyway, the whole point of this discussion is that when sending data between applications/tasks in your system it is a good idea to have some level of an application layer protocol.

Don't get me wrong - I super-appreciated the reminder! 🙂

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.

07-15-2022 09:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Here is an updated client and server. I removed the image stuff so I could test it. There were a few minor issue with the encoding and decoding the data. Depending on the size of your image data I am not sure how fast you will be able to transfer the data. I updated the client so it will auto reconnect. The serve will handle one client. This is the basic concept. This would not be what I would put into a production system. There are more things that would need to be done to make it more robust. If you need a fairly decent frame rate for your image data I would not handle both images on a single connection. I would have separate tasks to handle each image individually.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

07-16-2022 01:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

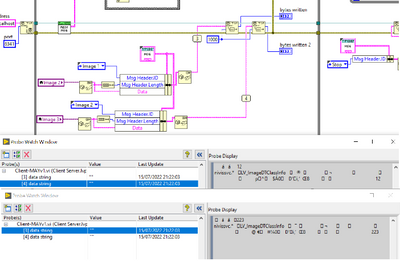

@Mark_Yedinak wrote:

Here is an updated client and server. I removed the image stuff so I could test it. There were a few minor issue with the encoding and decoding the data. Depending on the size of your image data I am not sure how fast you will be able to transfer the data. I updated the client so it will auto reconnect. The serve will handle one client. This is the basic concept. This would not be what I would put into a production system. There are more things that would need to be done to make it more robust. If you need a fairly decent frame rate for your image data I would not handle both images on a single connection. I would have separate tasks to handle each image individually.

The example seems to work fine. Why did you stop sending the data length?

Right now I guess I'll have to fix a few issues.

There are a few problems you foresee above.

-There is some slowdown in video reception and transmission. The video is not fluid.

-I can't change the data transfer rate. There is slowness in both sent data and received data.

PS: I believe it should be faster than this. Because it was able to transfer Data faster in my previous few tests.

-What do you mean by processing in separate connections so that the video transfer is fast?

By the way, I must say that you have very informative help.

07-16-2022 10:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I removed the length because the flatten to string automatically is adding it. That was one issue that the previous example had. The length was getting added twice and throwing off the protocol. So allowing the flatten to string to handle that for the protocol was sufficient for it to work.

I don't know what size your video is. I know you said 640x480. For a color vision this can be over 1MB of data. If you handle both images in the same data stream that would mean you have to transmit and process over 2 MB of data for each frame. TCP will fragment this data. I noticed when sending the simulated buffers which were 500KB the application was having trouble keeping up. I noticed that there were 0 window size messages in the TCP stream which means the receiver was not removing the data from the buffer fast enough. TCP handles this but it does mean the maximum throughput has been reached. If you handled each image in its own TCP stream you have effectively cut the data in half giving each stream a better chance at keeping up. This would mean the application would have to support multiple streams either by using multiple servers or a server architecture which would handle multiple clients or establish multiple connections for each image stream. You have never said what type of frame rate you are trying to achieve.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot