- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

TDMS Defrag Locks up LabVIEW

04-09-2010 11:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

TDMS defrag locks up LabVIEW (including shared variable engine) until defrag done.

I'm using LabVIEW 2009. Tested on WinXP (2GB RAM, dual core) and Win2008 Server (8GB RAM, dual core).

The shared variable reading VI is set to time critical priority. The tdms wirte/defrag VI is set to "Other 1" thread.

I also tried make tdms write/defrag an exe, it still locks up my shared variable reading exe!

The lockup time propotion to tdms file size. For a 1GB file, lockup time can go up to 40~60 Sec.

I need to defrag from time to time. So that other users on the local network can read the tdms file during the test.

The tdms file is big. Up to 2 GB. The data is an array of double.

Any one run into this problem before? Is there a work around?

TIA.

04-09-2010 12:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

HI George,

I don't have a solution but I have some thoughts.

This sounds like it could be a memory management issue since LV will unless you invoke the 3G switch run in the low 2G of virtual memory. TDMS, from what i know does it thing by mapping the TDMS file to memory. Since a Defrag of a TDMS file amounts to remapping the file to block on disk, I suspect that is the OS memory manager invloved.

Closely realted to that scenario is is if the TC code is getting swapped out due to running in virtual memory, so...

Is LV using more than the available physical memory?

The Trace Execution Toolkit may shed some light on what you are seeing.

If you figure this out, could you please post back? It is only a matter of time until I see the same thing.

Thank you,

Ben

04-12-2010 10:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ben,

Thanks for reply.

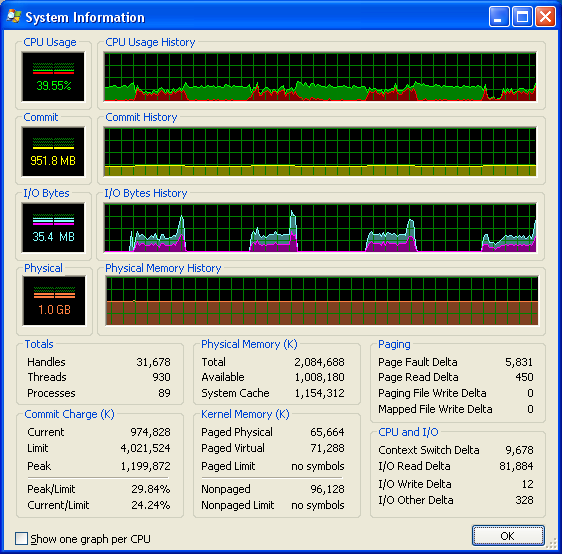

The attached is the memory usage on my laptop. The periodically peaks are defragment time.

I also tried just close and re-open the TDMS file without defragment. The lockup time is 100~200 ms. That means I'm still loss data.

04-12-2010 10:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi George,

I am going to request an NI Engineer get involved but my final thoughts are focused on the high Kernal mode times (red on CPU Usage). Kernal handles all memory management functions and if the delay is indeed the memory manager then this may end being a Disk I/O botleneck where the only solution is to move to a solid state drive that can handle the I/O demands.

If you look you will also see your CPU dropping off slightly durring the defrag implying the code is in a Page Fail Wait state while the memory manager rearranges the furniture.

Like I said, I'm pinging for support.

Ben

04-12-2010 10:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Is windows 2008 a 64 bit operating system?

If not, you have 3.5GB available on the server.

Can you increase the cache size of the file systems to about 4Gbytes?

04-12-2010 01:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks, Guys. I contacted NI support before post. AE passed the info. to R&D.

The Server is a 64 bit system. I don't know how to change the cache size on the server. I'm not familiar with that OS.

04-12-2010 01:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

George,

the way the TDMS functions are implemented, they lock each other, but they wouldn't directly be locking anything else in the same process. Does your application access TDMS from multiple areas in parallel? That way, one TDMS function could keep another one from executing. Any function that has a data dependency on the locked function would have to wait until the function that has caused the lock is done.

A few questions regarding fragmentation ...

To what extent are your files fragmented (the size of your tdms_index file should be a good indicator for that)?

Why is it fragmented that way? Are you only writing arrays of doubles, or are you writing other data, too? Do the arrays vary in size?

Have you tried anything to reduce fragmentation, e.g. the NI_MinimumBufferSize feature?

Herbert

04-12-2010 02:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Herbert,

Thanks for reply.

In my application, one VI does the open, write, close, defrag, re-open, running on server 2008.

I have other applications running on the local network read the file in read-only mode.

The index file is about 150kb after defragment. I defrag every 1 min. I can defrag less frequently. But I've to update the index file, so that other applications can read the latest data.

The array size is fixed during the test. But may be different for the next test. I write properties at the begining and the end. Only writes the array in between.

I've tried the set the NI_MinimumBufferSize to 10,000 (is this bytes?). I set it as the property of the file.

I also tried to avoid defrag, just close and re-open to update the index file. But I'm still loss data (shared variable read stop).

And at the end of the test, the file size is about 1.3 GB. Defrag cause LabVIEW lang. Corrupt the tdms file when I kill the process.

04-12-2010 02:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

George,

if the issue you are trying to fix is that the reading application can see only the data that was in the file by the time the application opened the file, maybe you can fix that within the reading application. The writing application will keep the index file up to date all the time, so all it might take is to move the close/re-open from the writing to the reading app in order to refresh the information the reading app is looking at.

The NI_MinimumBufferSize property might still be helpful, but it needs to be set on every channel. It won't work on a file or group (I know ... sorry). You can use different values for each channel. The property value is the number of data values (based on the channel data type) that will be collected in memory for this channel before LabVIEW writes it to disk. This of course might cause a longer delay between acquiring data and that data being visible for the reading application.

Hope that helps,

Herbert

04-12-2010 03:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Herbet,

Thanks for reply.

The problem starts when I relize it took 20 sec to open a tdms file, not including read the file. The file size was ~100 MB, and index file was ~5 MB. There were 3 properties for each channel.

After defrag, the file size reduced to 40 MB, and the index file to ~1 MB. The opening time reduce to < 1 Sec.

If the file size is 500 MB before defrag, I can't defrag it on my laptop. I got memory full error. That's why I'm avoiding set properties for each channel.

The reading applications can not be allowed to close file, otherwise write application can't write.

The read applications open the tdms file in read-only mode, can't update index file. Wirting application does update the index file.

Can't read the latest data isn't the big problem. The big problem is reading applications open the file tooooo slow if not defrag; and loss data if defrag.