- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

TCP Read - problem parsing data

Solved!09-19-2019 09:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

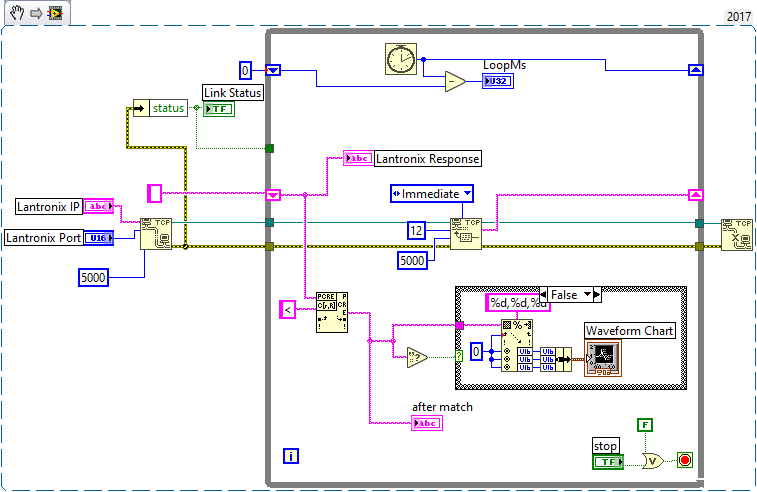

I am trying to read a TCP packet and parse the data and plot it.

The incoming data is in the format " <123,456,78 " The character '<' is the start marker and there is no CR or LF after the string. Data string sent once every 100ms.

I have two issues … occasional packet drop. While this is not a major issue as the data pertains to temperature , I would still like to handle it properly as the plot shows it as a spike to zero. Can this be eliminated if I first get the data packet size and then feed that into the TCP Read ??

Second … the Scan from String always returns a error even if I invoke it only for valid data string. Whats wrong ?

LabVIEW to Automate Hydraulic Test rigs.

Solved! Go to Solution.

09-20-2019 02:57 AM - edited 09-20-2019 03:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You make the classical parsing error, assuming that your computer program is slow and magical enough to only call TCP Read in Immediate mode when a complete message has arrived. If the interval between the messages is long and the message itself is very short, then the chance for that is very high, but never ever 100%.

Imagine TCP Read executes at the moment the other side is sending data and only reads the first byte (or the first few bytes). TCP Read in immediate mode will return ... indeed immediately as soon as it sees at least one byte in the incoming buffer! Your parser concludes: Hurray I got a message, passes it to the Scan from String function which concludes, wtf I didn't get enough data and throws an error.

Now the solution seems obvious: use the error indication of the Scan from String function to determine if the message was valid and if it wasn't just put the string in the shift register anyhow to wait for the next round and prepend the previous string to the received message.

EXCEPT: what happens when you called TCP Read when all but the last or last two characters of a message like <123,134,145 has been received?

Exactly: Scan from String will be happy and decode it into 123, 134, 1 and present it to you as a valid measurement sequence and you are still in trouble.

There is only one fairly fool proof way to avoid that: If you got any valid data with your start marker in there back, you need to do a second TCP Read with a small (significantly shorter than 100ms timeout) and repeat that until you do not receive a string with a start marker. That entire string starting from the last occured start marker you then pass to Scan from String and whatever this function doesn't consume if any you put in the shift register for the next round. What I don't like about such a solution is the inherent timing used in this scheme. It assumes that the sender never will get changed to send messages faster than 100ms or it all falls apart. Generally timing fixes such as delays or whatever in protocol handling communication is a sure way to get into trouble later on. But in this case with such a stupid protocol I can't think of something else.

This all may sound complicated and it is in fact, but that's what you get from a stupid protocol like this that doesn't have a fixed size AND no termination marker either. The only possible termination marker left is the fact that after the end of the message there won't be more characters for almost 100 ms. The usual excuse that it doesn't need any protocol characteristics as it was only meant to be displayed in a terminal also falls totally flat on its face: Try to display such a string in a terminal without at least a <CR> and/or <LF> at the end of the string. Guaranteed headache for anyone trying to make any sense of the numeric gibberish in the terminal screen.

09-20-2019 04:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Rolfk

That was a detailed explanation of whats happening and what ought to happen. Thanks.

Yes the termination marker is something which I have been wanting in the stream. I can then kind of analyse the incoming stream to make sure if the Start and End markers are present and only then pass it to the Scan from String to stop it from calling me names.

I do agree that a packet with fixed length and start/end termination markers is ideal. But then the world is never ideal and so requires people like us to make it so .. ha ha !

LabVIEW to Automate Hydraulic Test rigs.

09-20-2019 10:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Rolfk

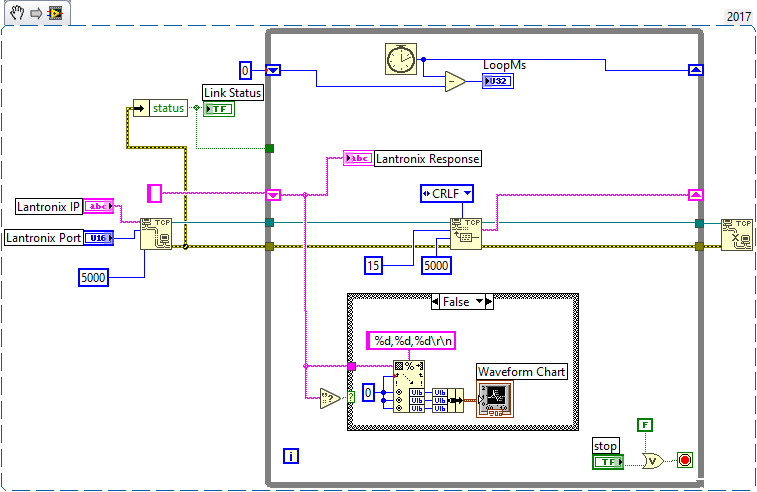

Thanks to the analysis of the problem, it helped to open up new avenues. I have now got a solid TCP Read. Two things helped :

1. Increase the Serial Baudrate of the device server ( Serial to TCP convertor ) 9600 to 115200 bps.

2. Add a CRLF to the end of data string and use that as a delimiter in the TCP read .

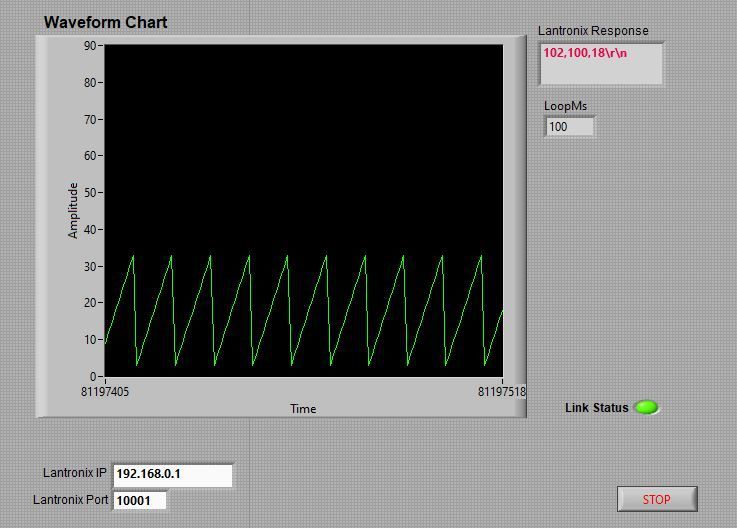

Worked great ... been running for almost an hour and not a single dropped packet. To observe this I was sending a saw tooth waveform and plotted it ... you can see it below alongwith the code that worked .!

LabVIEW to Automate Hydraulic Test rigs.

09-20-2019 10:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Since you are now using a termination character sequence, you really should set the Bytes To Read to be more than any message could possibly be. I would bump it up to more like 50, just to be safe. The read will still end when the CR LF sequence is read, but this gives you a lot better chance of not being caught off guard when a message comes in that is longer than you expect (maybe 20 characters instead of 12).

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

09-20-2019 10:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@crossrulz wrote:

Since you are now using a termination character sequence, you really should set the Bytes To Read to be more than any message could possibly be. I would bump it up to more like 50, just to be safe. The read will still end when the CR LF sequence is read, but this gives you a lot better chance of not being caught off guard when a message comes in that is longer than you expect (maybe 20 characters instead of 12).

Done. Thanks for reminding !!

LabVIEW to Automate Hydraulic Test rigs.

09-20-2019 11:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm curious, why would changing the BAUD rate on the device have any effect on the TCP communications?

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

09-20-2019 11:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Understand your point.

Let me say what I feel ...

The window available for sending say 12 odd bytes is 100ms and it can get shorter also in future if client wants a faster logging rate - say 20ms.

OK then @9600 to send each byte we need about 1.04ms. So we occupy about 13ms to send all 12 bytes. Given the various latencies I think its better to keep this time as short as practical. And be ready for a faster rate if the need arises.

LabVIEW to Automate Hydraulic Test rigs.

09-20-2019 11:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@MogaRaghu wrote:

Understand your point.

Let me say what I feel ...

The window available for sending say 12 odd bytes is 100ms and it can get shorter also in future if client wants a faster logging rate - say 20ms.

OK then @9600 to send each byte we need about 1.04ms. So we occupy about 13ms to send all 12 bytes. Given the various latencies I think its better to keep this time as short as practical. And be ready for a faster rate if the need arises.

But your communications are over the network, not serial. So why would the BAUD rate affect timing or a network connection. I fully understand how BAUD rate affects serial communications. In fact, I have classes for connections where I dynamically calculate timeout values for serial communications based on the BAUD rate and the size of the data. I still see no relationship between serial settings and network communications.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

09-20-2019 01:03 PM - edited 09-20-2019 01:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

As I see it increasing the baudrate did minimize the window in which this problem could occur with the original code. But that's not a fix as it still could occur. The real fix is of course to add proper termination signaling as you have done. With that change, increasing the baudrate has no other effect than increasing the power consumption ![]() . And yes it may be helpfull when the client wants faster updates, but honestly how fast can you possibly want environmental values to update? These values won't typically change much over the course of a second so sampling them more than once or twice a second is likely overkill.

. And yes it may be helpfull when the client wants faster updates, but honestly how fast can you possibly want environmental values to update? These values won't typically change much over the course of a second so sampling them more than once or twice a second is likely overkill.