- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

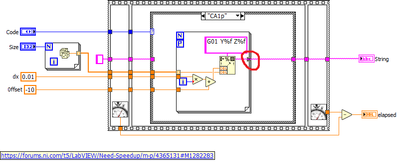

Need Speedup

Solved!04-08-2024 07:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

04-08-2024 08:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Yamaeda wrote:

Making a preallocated string and replacing content might be a good (and old school) alternative.

Wait wait wait I'm one of the younger ones here! *shrivels up*

Still the defacto way anytime you do embedded C 😉

04-08-2024 08:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

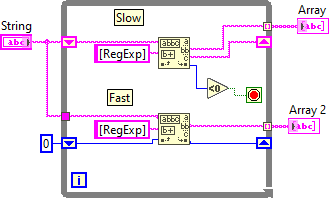

The format into string might also be slow.

Format Into String probably parses the format string each iteration. While the string can't change in this example, I don't think the function will change into another function if the format string is a constant.

There's a chance though that concatenating a string from constants and more primitive functions is slower that parsing the format string.

This could be benchmarked, but for me refactoring Format Into String usually made things faster...

Of course, Format Into String is easier to read...

04-08-2024 08:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@IlluminatedG wrote:

@Yamaeda wrote:

Making a preallocated string and replacing content might be a good (and old school) alternative.

Wait wait wait I'm one of the younger ones here! *shrivels up*

Still the defacto way anytime you do embedded C 😉

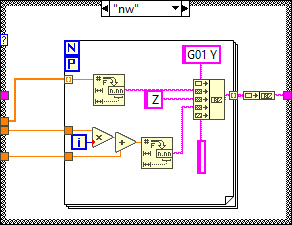

Sadly, pre-allocating the entire string requires a shift register, and you'll loose parallel execution of the for loop.

If you can pre-allocate all lines, you might gain a little, but that seems a corner case to me.

With the pre-allocation, you loose flexibility. The numbers aren't fixed size, as a negative number has an extra '-'. That doesn't have to be a problem, but the result won't be 100% the same.

04-08-2024 10:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

Sadly, pre-allocating the entire string requires a shift register, and you'll loose parallel execution of the for loop.

If you can pre-allocate all lines, you might gain a little, but that seems a corner case to me.

With the pre-allocation, you loose flexibility. The numbers aren't fixed size, as a negative number has an extra '-'. That doesn't have to be a problem, but the result won't be 100% the same.

True, i haven't used it, i was just spouting ideas. In this case (always with strings?) it seemed to benefit a good amount from parallellisation.

In a similar problem i remember having a VI that looked for some string and send the rest back through a shift register until all was parsed and it was _slow_. By simply changing to sending the Index found-number in the shift register and use as offset for future lookups it became 10x faster. No memory managment and string copies.

04-12-2024 10:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

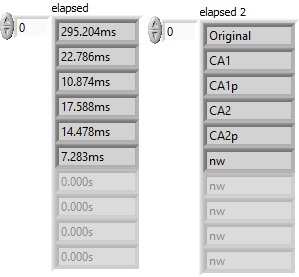

As a side note: Starting with LV 2021, the Concatenating tunnel mode works with strings.

04-12-2024 11:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@paul_cardinale wrote:

As a side note: Starting with LV 2021, the Concatenating tunnel mode works with strings.

WHY O WHY don't they tell us stuff like that??

04-12-2024 12:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

@paul_cardinale wrote:

As a side note: Starting with LV 2021, the Concatenating tunnel mode works with strings.

WHY O WHY don't they tell us stuff like that??

I assumed that, because the down-converted code was broken and a concatenating string tunnel would have fixed it. 😄

(apparently, they did not bother implementing a down-conversion substitution, e.g. as with the IPE, for example)

04-12-2024 01:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

wiebe@CARYA wrote:

@paul_cardinale wrote:

As a side note: Starting with LV 2021, the Concatenating tunnel mode works with strings.

WHY O WHY don't they tell us stuff like that??

I assumed that, because the down-converted code was broken and a concatenating string tunnel would have fixed it. 😄

(apparently, they did not bother implementing a down-conversion substitution, e.g. as with the IPE, for example)

I suppose concatenating the string in the tunnel is faster? I'd guess building an array and concatenating that takes an extra step.

04-12-2024 03:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

@altenbach wrote:

wiebe@CARYA wrote:

@paul_cardinale wrote:

As a side note: Starting with LV 2021, the Concatenating tunnel mode works with strings.

WHY O WHY don't they tell us stuff like that??

I assumed that, because the down-converted code was broken and a concatenating string tunnel would have fixed it. 😄

(apparently, they did not bother implementing a down-conversion substitution, e.g. as with the IPE, for example)

I suppose concatenating the string in the tunnel is faster? I'd guess building an array and concatenating that takes an extra step.

My (wild) guess is that the compiler will probably create nearly identical machine code after all optimization. 😄