- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Let’s make Machine Learning easy with scikit-learn on LabVIEW

01-21-2022 05:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

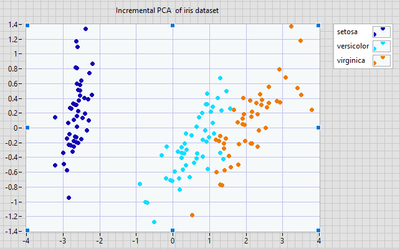

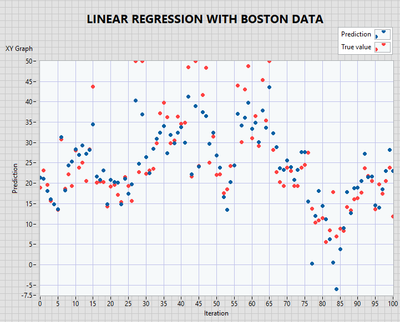

TDF team (Technologies de France) is proud to propose for free download the scikit-learn library adapted for LabVIEW in open source.

LabVIEW developer can now use our library for free as simple and efficient tools for predictive data analysis, accessible to everybody, and reusable in various contexts.

It features various classification, regression and clustering algorithms including support vector machines, random forests, gradient boosting, k-means and DBSCAN, and is designed to interoperate with the Python numerical and scientific libraries NumPy and SciPy from the famous scikit-learn Python library.

Coming soon, our team is working on the « HAIBAL Project », deep learning library written in native LabVIEW, full compatible CUDA and NI FPGA.

But why deprive ourselves of the power of ALL the FPGA boards ? No reason, that's why we are working on our own compilator to make HAIBAL full compatible with all Xilinx and Intel Altera FPGA boards.

HAIBAL will propose more than 100 different layers, 22 initialisators, 15 activation type, 7 optimizors, 17 looses.

As we like AI Facebook and Google products, we will of course make HAIBAL natively full compatible with PyTorch and Keras.

Sources are available now on our GitHub for free : https://www.technologies-france.com/?page_id=487

Youssef Menjour

Graiphic

LabVIEW architect passionate about robotics and deep learning.

Follow us on LinkedIn

linkedin.com/company/graiphic

Follow us on YouTube

youtube.com/@graiphic

02-08-2022 03:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I hope you will upgrade the library to LabVIEW 2021 version; *.vip files to *.vipd file.

02-08-2022 03:42 AM - edited 02-08-2022 03:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

We will make a complete update in may.

As it's a total open source project i use intership from engineering schools to work on it.

You have to be a little patient.

Actually my ressource are working on the HAIBAL project a complete deep learning library in native labview and totaly compatible with Keras tensor flow.

Ngene library is too expensive, lite with not enought modular.

Here is our status

- Over 100 different types of layers

- 22 initializers

- 15 types of activations

- 17 native losses

- 7 optimizers

Possibilities to do your own architeture like Keras or Pytorch

Youssef Menjour

Graiphic

LabVIEW architect passionate about robotics and deep learning.

Follow us on LinkedIn

linkedin.com/company/graiphic

Follow us on YouTube

youtube.com/@graiphic