- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Labview receive data from the serial port.

04-03-2019 09:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi everyone!

I have a small test related to receiving data from a computer USB port.

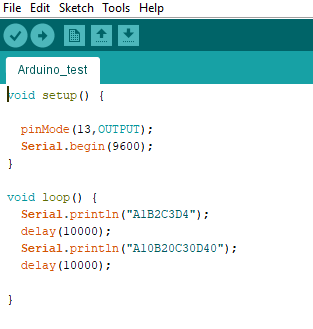

I use Aruino and programmatically transmit the sequence to the computer via the serial port.

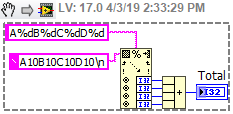

they are the strings "A1B2C3D4" and "A10B20C30D40" and after the first string transmission, after 10s, I transmit the next string, the process can be seen in the picture below.

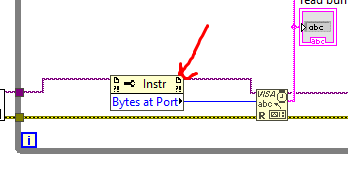

I created a VI to read these posts, then split the numbers and their sum.

The problem is that after getting the first data "A1B2C3D4" I calculated their sum of 10. After the first calculation, it again calculated the sum until the 10s were out and waited for the next string (the next string is "A10B20C30D40 ") come. So how do you calculate the sum only once after it receives the incoming string, and wait until a new string of characters is submitted after the next 10 seconds and then calculate the total once and then wait. In this example I can adjust the time to send the characters from Arduino to the Serial port but with a real problem I want to make the strings send up with any interval. So is there a way for it to count once and wait for a new string to process the post.

04-03-2019 11:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The VISA Read function is set to wait for either 10 seconds or 16 bytes (characters in this case). What I'm guessing is happening is that it is waiting for both strings before processing anything and flushing the second string. Why don't you try to use the "Number of Bytes at Port" property node to define the number of bytes you want to read and it will only read one full message at a time.

Incidentally, your timeout on your VISA Read is being left at the default 10 seconds. The time delay between messages fro your Arduino is set to the same amount of time. Maybe make the Read timeout slightly longer than the delay between messages (maybe 1 second longer?) so you don't miss any messages.

04-03-2019 01:33 PM - edited 04-03-2019 01:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@User002 wrote:

Why don't you try to use the "Number of Bytes at Port" property node to define the number of bytes you want to read and it will only read one full message at a time.

NO NO NO.

Bytes at Port is the wrong thing to use 99% of the time, and certainly the wrong thing to use here!

To the original poster, why are you flushing the receive buffer in your code? If there is data in there from the second string, you've wiped it away.

What is good about your code:

1. Your Arduino uses Println which means it is putting a line feed character at the end of every write. That is a good termination character.

2. Your LabVIEW code as the Serial Configure. Though you don't have those terminals wired, the defaults of Terrmination Character Enabled, and Termination character of Line Feed match with what your Arduino code is doing.

3. You are trying to read 16 bytes which is longer than each of the 2 strings you are sending. So the VISA Read will finish once it receives the termination character. However, I'd consider making that number 16 larger, perhaps even 1000, in case you change your code that you wind up sending longer strings than just 16 characters.

What is bad about your code:

1. You have a continuous while loop because of the False constant wired to the stop terminal. The only way to stop your VI is to hit the abort button. That means the VISA Close will never execute leaving your serial port open.

2. You have a VISA Flush Buffer. You should never need that in the course of good operation. If you use it, it should perhaps be just one time before the while loop starts.

3. Your Delay in the Arduino code is 10 seconds. The default VISA timeout is 10 seconds. Pretty much the same. That means your VISA is going to timeout right about the same time your Arduino is getting ready to send the next message. Try wiring up a larger timeout value to the VISA configure subVI.

4. There is a lot going on to parse your message, though it seems like it probably works. If you look at regular expressions, there is a way to separate numbers from characters in a fraction of the block diagram space. If you know you are always A#B#C#D#, you can do it in one node with a scan from string. it would look like this.

04-03-2019 01:46 PM - edited 04-03-2019 01:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Since I don't have LabVIEW on this computer but des938 was kind enough to post a picture of part of your vi I have some suggestions.

Delete the bytes at port...you don't need it since you are using println command in sketch:

println()

Description

Prints data to the serial port as human-readable ASCII text followed by a carriage return character (ASCII 13, or '\r') and a newline character (ASCII 10, or '\n'). This command takes the same forms as Serial.print().

So just set your Configure serial port to use a terminal character (defaults to true) and set the character to newline. Also set the timeout value to greater then your expected loop rate. This is setup out side your loop. Then inside your while loop set the read.vi to more bytes then you ever expect. It will stop reading when one of three conditions are fulfilled: it times out, it reads the number of bytes you specified or it sees a newline character. Do your processing in the loop and then the loop will start again. Don't forget to close the visa session outside the loop and make sure that you don't have the Arduinos' serial monitor open at the same time you are running your vi.

PS of course Ravens beat me too it.

04-03-2019 02:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@RavensFan wrote:NO NO NO.

Bytes at Port is the wrong thing to use 99% of the time, and certainly the wrong thing to use here!

Just for my knowledge in the future, so I don't give people the wrong answer. I get why you are suggesting using the termination character (I did not notice the PrintLN() command, good catch), but is there something inherently wrong with using the Bytes at Port property for variable size messages?

04-03-2019 02:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Because everybody who ever tries to use it, probably because that is shown in the NI Serial example which is actually a poor example of serial communication, gets it wrong and has problems. Incomplete messages, 0 bytes at port, can't parse data properly.

I find 99% of the time (not scientifically determined number) that you don't need bytes at port. A well defined protocol with ASCII characters and a termination character doesn't need it. I well defined binary protocol that gives some header bytes and a message length, doesn't need it. Anything that is command/response with a defined message structure doesn't need it.

Bytes at Port is only useful for some sort of terminal program where you just want to throw up on the screen and append to any existing data coming from a device continually streaming data.

Even in this situation, it could have a use. If you have a small while loop that continually reads bytes at port to determine when a message starts (Bytes at Port >0), then you can stop the while loop and proceed to a VISA Read where you read a specific number of bytes (not the value coming from Bytes at port). It can be useful here where there is a long timeout between messages. You can wait for a byte to arrive, then when it does, read the 16 or 1000 bytes relying on the termination character to end the message for you. The advantage is that the timeout doesn't start immediately. If you have a 10 second timeout, but the slow communication frequency means the message doesn't start until 9.5 seconds later, you would risk the read timeout out if the actual message took longer than a 1/2 second to arrive. If you wait to read until you get the first byte, then the rest of the message should come quickly after that, and you can receive all the bytes without a timeout error.

04-03-2019 03:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@User002 wrote:

@RavensFan wrote:...but is there something inherently wrong with using the Bytes at Port property for variable size messages?

As RavensFan mentioned it is often misused and is pretty much considered bad mojo by most of the contributors in this forum.

Where I have used it when I am dealing with a protocol that transfers raw binary (e.g. CR may be part of the data stream) AND the messaging is not fixed size. In those case I am often looking for a series of sync-bytes, STX EOT etc.

Ben

04-04-2019 01:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

thanks everyone. maybe people have misunderstood my question. My problem is reading the signal from the USB port connected to the Arduino, I let the 10s delay time just want to show that the program calculates after reading the data (here is the addition) that will count multiple times with one signal sent only. ie after reading it and calculating it for the first time, after calculating it, it still uses that data to calculate it a few more times until it reads the new data sent from the Arduino and calculates another result. Here I want people to show me how to know if a signal is posted and activate the calculation program and give the results to the screen, from the time of the first calculation until the time when A new data (about 10 seconds) I want the program to process it will stop working until there is a new string posted, it reads and then processes the data and waits until new data is available.

conclusion:

- How to know if the labview reads a string sent from the serial port, for example, there is a message, for example, to know if there is a post.

- How does the program calculate (this small example is the addition and the display) only works when there is a post. It only works once when there is a notification that a new string has arrived then stops working and waits until there is a message that a new string arrives then waits and keeps repeating as so.

- Notice this problem I use Arduino to send the string to labview so I can configure how many seconds between the two strings. But in my real problem, this time is not available, so the process must be properly synchronized when a new string is sent to labview.

My idea is that there is a message that a signal sent to this message will trigger the following calculation, after the calculation has been completed (only once) will wait for the next message. Anyone with another idea, please tell me. Thanks everyone.

04-04-2019 07:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

When you need code where something else has to continue to happen while you are waiting for something else, then you need parallel while loops.

Have one while loop handle the serial communication. Have another while loop do all the other stuff that you want to have happen event when you haven't received serial data. Then use a messaging scheme between the two loops so the serial loop can update the "always do stuff" loop when new data arrives. A notifier, or a queue is a good way to pass data. The serial loop becomes the producer, the other loop the consumer, and you have a "producer/consumer" architecture. But you will likely want a small or zero timeout on the Dequeue Element function (if using queues) or Wait on Notification (if using a notifier) in the consumer loop so that the loop can continue if nothing new has arrived.

04-04-2019 08:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you, but I don't know how to do it, do you have any examples?