- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

LabVIEW microcontroller communication

Solved!09-14-2020 05:50 AM - edited 09-14-2020 06:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I have a system with a CPU microcontroller that controls a different microcontroller. From the second microcontroller we get a digital value which is either a voltage or a current. How the system works is that the labview program gives the instruction code to the microcontroller CPU to get the digital value from the second microcontroller using VISA write and then there is 150ms delay and then using visa read we get the value from microcontroller CPU. Why is there this 150ms delay. Because when I tried to reduce it below 150ms the output I am getting is 0. When I talked to my supervisor he said that the microcontroller CPU is so fast that all it needs is 5-10ms max, so why is that value going to 0 when it is below 150ms. Also, my task is to find out how long will it take to get stable readings from the second microcontroller. Because currently how the system is it will take 5 readings by repeating the same action above. But I was told to take many readings first at 5ms, next at 10ms and so on. But when I put any time below 150ms, the output from microcontroller CPU is always 0 and when it is above 150ms the output is always max stable value. Any idea what is the problem here and can anyone tell me what should I know to correct this problem.

Solved! Go to Solution.

09-14-2020 05:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi govindsankar,

@govindsankar wrote:

Any idea what is the problem here and can anyone tell me what should I know to correct this problem.

The problem most probably is buried in your software. There can be problems in LabVIEW, in the first microcontroller or in the 2nd microcontroller.

You need to debug all of them to find the core problem!

09-14-2020 07:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@govindsankar wrote:

Why is there this 150ms delay. Because when I tried to reduce it below 150ms the output I am getting is 0.

This line makes me think you are using the Bytes At Port, which you should not ever be using to tell the VISA Read how many bytes to read. But we need a lot more information in order to help you out here. I guess you could start here: VIWeek 2020/Proper way to communicate over serial

1) What is the message format between the PC (LabVIEW code) and the CPU? I'm not referring to "RS-232" as that just defines the hardware. I am talking about how the data sent over the bus is interpreted. I have seen a very wide range of options here, so we need you to be specific.

2) You really need to share code for use to be able to help debug the code.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

09-14-2020 07:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

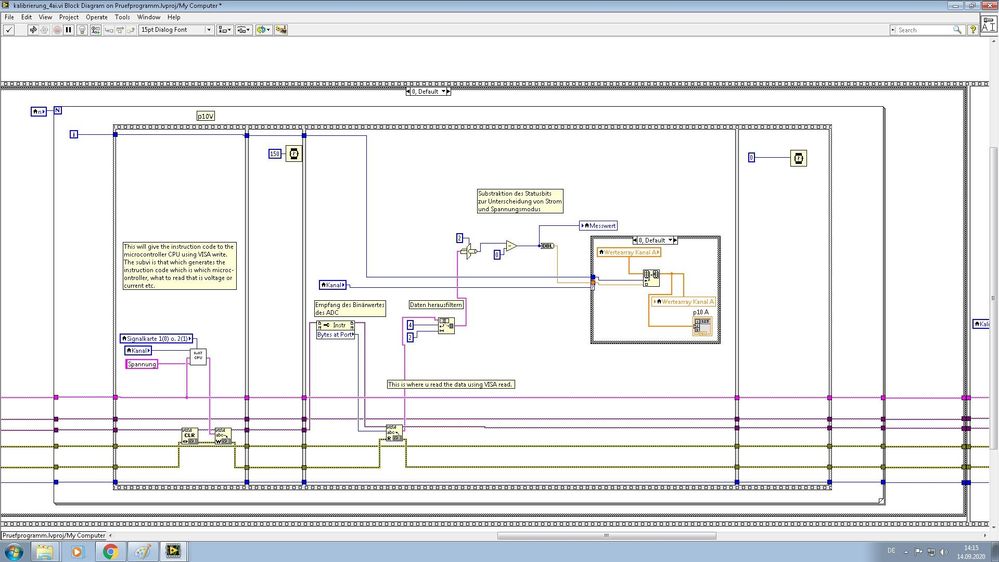

Yes, I am using bytes to read. The message that is sent from LabVIEW to the CPU microcontroller is this 0701 0003 0000. 07 is the command code for getting the current digital value. 01 is which slot is the microcontroller in. There are two slots in the testing machine and 01 for 1st and 02 for second. 00 is to determine which canal of the microcontroller is being tested now. 03 is the data bytes. 0000 is telling the microcontroller CPU that we need to get the digital equivalent value of the voltage. I have shared the screenshot of the part of the code because the whole code is too big.

09-14-2020 07:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@govindsankar wrote:

Yes, I am using bytes to read. The message that is sent from LabVIEW to the CPU microcontroller is this 0701 0003 0000. 07 is the command code for getting the current digital value. 01 is which slot is the microcontroller in. There are two slots in the testing machine and 01 for 1st and 02 for second. 00 is to determine which canal of the microcontroller is being tested now. 03 is the data bytes. 0000 is telling the microcontroller CPU that we need to get the digital equivalent value of the voltage. I have shared the screenshot of the part of the code because the whole code is too big.

Am I to assume the data coming back is in the same format? Frankly, this protocol is kind of junk. A proper protocol needs a way to verify you go the full message and are properly lined up to the messages. This is typically done with a start byte (0x02 is common as it is defined as the Start of Text character in ASCII) at the start of the message and a checksum or CRC at the end of the message. If you got a bad checksum, then you throw away that data and start looking for the start byte again.

But if we assume the CPU is only sending data back when data is requested and always this 6 byte format, we can get away with what you currently have. First, get rid of the Clear Buffer as it should not be needed. Then get rid of the wait and the Bytes At Port. Instead, just wire a 6 to the VISA Read since your message is 6 bytes long. And, finally, make sure you have the Termination Character turned OFF where you configure the serial port. If it is turned on, then the VISA Read will not read the full message if a termination character (default is 0xA, line feed) just happens to be in your data.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

09-15-2020 03:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you very much. It worked and I got the output.