- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

LabVIEW 2009 slow performance

05-20-2010 11:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

My machine has .NET frameworks 1.0, 2.0, 3.0, and 3.5SP1 installed. The .NET frameworks are designed to be backwards-compatible, so if you link against something that was introduced with .NET 2.0, that same API is available going forwards.

I believe the specific test we did linked against .NET 2.0 version of System.Data.SQLClient.

I'm really not very knowledgeable about .NET. We've had good results using it for things that LabView doesn't support (such as ICMP "ping" and writing to the Windows Event Log) but those are not performance-critical. We looked into SQLClient only because we were having memory leaks with ADO under Vista and later. We found that it did work but the performance was poor. The ADO memory leaks turned out to be Microsoft's fault so after applying the suggested workaround we went back to ADO.

05-20-2010 12:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Rob,

Is the above problem related to ADO or ADO.NET? There are a few out there and then there's LINQ which I have not used. I use ADO.NET quite a bit on the server and client side under Visual Studio 2005. I'd be in big trouble without it but then there is LINQ.

Jared,

What are you talking about? I can not find this menu anywhere.

This problem with time being all messed up went away after unplugging the network cable. This is only helpful in looking at the state of the project since network variables sort of need a network to operate... If I reboot the machine after unplugging the network cable the time problem goes away. Something is going on with the NIC driver and network "shared" variables. It's particularly awkward in the case of making .NET DLLs slow or broken since that would be another path to sharing data that I can not use.

Bob

05-20-2010 12:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The memory leak applied to ADO (only) in Vista and Windows Server 2008.

ADO and ADO.NET aren't related in any way other than name.

05-20-2010 12:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks, Rob!

That's what I suspected. I've used ADO.NET for most database access here in the lab for the last three years. I don't think I want to give it up for current code unless I have to since I understand it much better than LINQ right now.

Bob

05-20-2010 03:53 PM - edited 05-20-2010 03:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

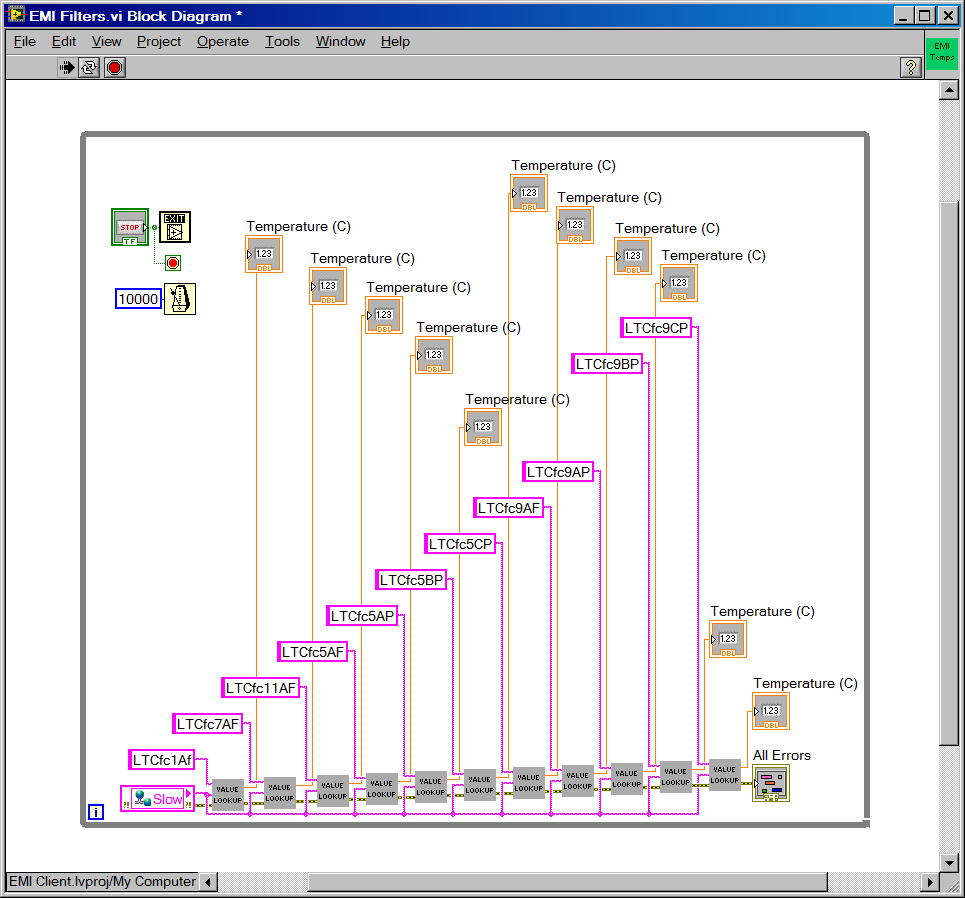

The revised vi:

Note that the clock error I saw on the Windows XP machine is happening on the Vista PC. It only happens when the ethernet cable is installed. The Vista machine is really a bog plain Intel chipset small profile machine. Hardware manager identifies the chipset as G31/G33/P35 Express (likely the latter since the machine is so small). It has 2 GB RAM and has an E4600 CPU. This needs to be adressed by NI. I need these machines because I can't imagine having to revisit this purchase from a year ago because it is incompatable with LabVIEW. I'll get laughed out of my boss' office.

05-20-2010 04:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Bob,

You should open a support ticket with NI (see http://www.ni.com/support/) rather than wait for someone to pick up your issue on the forums.

-Rob

05-20-2010 04:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Rob,

I already have. This is under evaluation. This is the second incident of it's kind for two versions of the software. I reported the issue last summer for LabVIEW 2009. The machine that is now running Vista was an XP machine when this was first discovered. It messed that machine up pretty good. Now I'm in the same place I was then. There is a roll-out of Windows 7 coming and I wanted to test with something close to that. Display rendering for Vista and Win 7 is similar. I have lots to fix if we ever upgrade these systems. There's nothing like doing the same work over and over again.

Bob

06-01-2010 03:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I have followed up with NI tech support on this issue, and have sent them a VI to test. They have confirmed that the Slow Save issue does indeed exist with LV2009, and that it is a function of the size of the VI. It is caused by a change in the compiler, which produces more efficient code, but slower compiles. It seems that one needs to create more SubVIs with LV2009, with larger VIs (perhaps > 500 kB on disk).

However, by accident, I discovered a work around. While experimenting recently with my VI, by eliminating blocks of code to see how this affects the save time, I discovered that, if the VI has a broken arrow, a save takes only 3-5 sec, not 30 sec! It seems that, as long as I maintain a broken arrow (e.g. by dropping an unused Arithmetic function somewhere on my BD), I can go ahead and code all day, saving every few minutes, and only lose 3-5 sec each save. This seems to be independent of how drastic is the code edit. In between, I can temporarily delete the unused function to see if I get an unbroken arrow (but NOT issue a SAVE!), and then issue an Undo Cmd to restore the unused function. Finally, when done, I can delete the unused function, and do a final save and compile. NI thinks this is a good workaround.

FYI, here is the full response on this issue from NI:

" There are different kinds of unsaved changes (front panel item moved, BD item moved, VI needs to be recompiled). When the recompile flag is set LabVIEW has to recompile the source and save it within the VI. If a block diagram item simply moves, LabVIEW only has to save the BD and not recompile the code which goes much shorter. You can test this out by saving after simply moving a wire. You will see that this save will be dramatically faster. While this doesn't help alleviate the problem it should help understand where the longer time is coming from, the compile process.

I tried to see if this was caused by a bug in a particular part of code or the simple fact that our compile process takes longer. Because of the proportional delay based on how much I disabled I think it is safe to say that this is simply a product of compilation being slower. We have added many compiler settings that are aimed to improve the runtime performance.

Because we are looking for more optimizations it is logical to assume that the compile time will be longer. Because of the way LabVIEW works we need to compile the code when we save. The decision was made that a sacrifice of save speed (compilation) was okay if it meant faster runtime performance. I have presented all of this to R&D and they have reluctantly confirmed everything. I can understand why you are affected by this and I have filed a Corrective Action Request (Bug Report) on your behalf which is #225473."

06-01-2010 03:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If compilation delay is the issue then why do binary applications take so long to start up? Why does the environment compile code first rather than wait for attempted execution of new code after editing to compile code opened from a project file (like most IDEs out there)?

I'm sure this is interesting but does not effect the issues I've raised at all. If the compiler is that much more inefficient then why was it released like this?

Bob Taylor

08-29-2010 12:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Unfortunately the problem seems even in LV2010 still present.

With a bit larger VIs compilation on an up to date PC can easily Increase to 30sec per save.

If it is really only because of the compiler optimization settings maybe an optional switch for the optimization level would be an option. For many applications the optimization is not that important however, waiting 30 sec for any save of an VI really slows coding down (at least if one save often to prevent data loss...)