- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

LV2019 Maps vs. Variant Attributes: Performance?

06-04-2019 11:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I would like to know if I should port my existing code using variant attributes to the new LabVIEW 2019 maps. My code is quite performance sensitive.

How do the maps perform relative to the variant attributes?

Is it the same algorithm implementation in the background?

I am especially interested for the case where the values are big clusters (of dynamic size) and the keys are either strings or integers.

06-04-2019 11:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I know that Christian Altenbach is pretty familiar with Maps, and probably could answer your question. However, you'd do the entire Community a great service if you made a copy of your code and replaced the Variant Attributes with Maps, then ran some timing tests. There's nothing like "real data" in a "real-world implementation". My guess is that you find two things:

- Programming your application is considerably easier using Maps.

- Speed will be comparable between the two implementations.

As far as Speed goes, I'd be surprised if Maps were significantly slower. I would not be surprised to find them faster, but don't have direct experience. However, the first point is also important -- it doesn't matter how fast the code runs if it doesn't do what you think you told it to do, so code transparency and ease of use may be worth a few percentage "loss of speed" ...

Bob Schor

06-04-2019 12:06 PM - edited 06-04-2019 01:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

They are quite comparable, so don't expect a huge performance improvement. I will post my slides and example code once they are cleaned up and in the public LabVIEW 2019 version. Hopefully ready by the end of this week.

Sets and Maps use standard libraries and NI did a great job in the G implementation. The main advantage is that basically any datatype is allowed and there are no reserved values. (For variant attributes, the "name (=key) must be a string and an empty string is not allowed). OTOH, A set is basically a map with only a key and no value. Use a set if you only use the variant attribute name and ignore the value.

Of course the code is so much cleaner with sets and maps!

If the current code is working well, there is no real reason to upgrade, but upgrading is typically very easy because the functions are nearly pin compatible. How big are your collections?

I would do a quick branch of your current code and do some benchmarking to compare the two versions.

06-06-2019 12:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ok, I installed LabVIEW 2019 and re-did all my benchmarking from the talk on a better machine (more cores, bigger CPU cache, etc.) And the map code is now consistently faster by a few % (2..3%) than the variant solution on the lotto example. Still not worth bothering. I'll do a few more tests.

(For the talk, I had an old dual core laptop as only testbed).

06-06-2019 01:39 PM - edited 09-20-2020 01:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

And the map code is now consistently faster by a few % ...

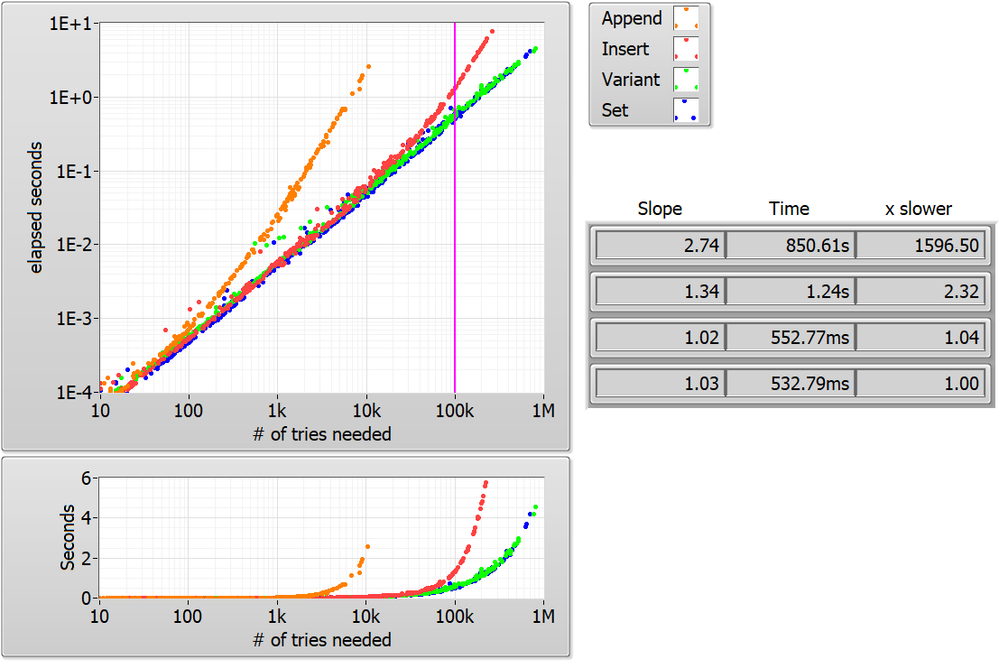

Here is a typical benchmark result. Note that Set results (blue) form the lower boundary at all x-values, but variant is about the same (within noise).

The code generates random lotto numbers and returns the # of tries and the time needed to generate a lotto number that has already been generated during the same run. The collection of numbers to be compared grows linearly, so the code returns surprisingly fast.. The table shows the time and slope at 100k, i.e. where the cursor is. The slope for each curve is calculated from a quadratic polynomial fit (least abs. residual to ignore outliers) to the log-log data.

Note that if we divide the elapsed time by the # of tries, sets and variants are basically flat (slope~0), i.e. almost no penalty with collection size. 😉

06-08-2019 03:30 PM - edited 06-09-2019 01:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

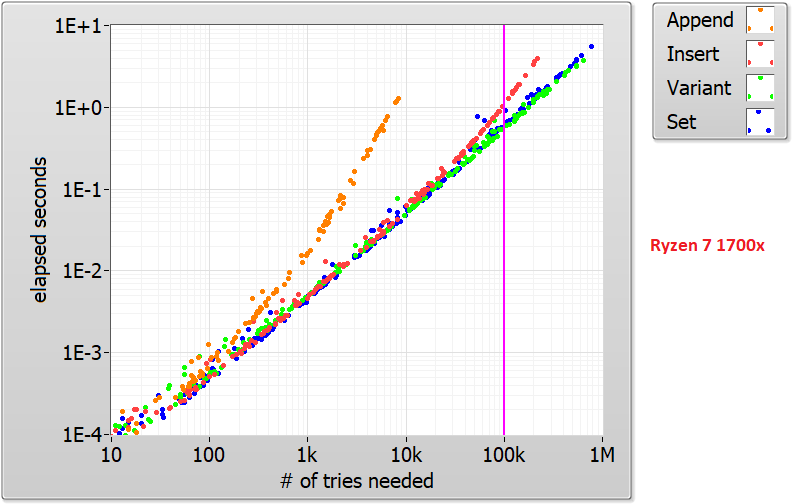

Variant attributes and Set performances do vary with CPU and probably depend in a complicated way on e.g. cache sizes and other things. For all practical purpose, they are the same.

In contrast to the above Dual Xeon E5-2787W, the 8 core AMD Ryzen 1700x slightly prefers variant attributes at very large sizes.

12-15-2019 06:30 PM - edited 12-15-2019 07:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

One penalty of the Variant approach is the need to flatten and unflatten the data.

Is it possible to get your code posted somewhere so I can try it out?

BTW, for those interested in the presentation Christian did on Maps and Sets, here's the video:

https://www.youtube.com/watch?v=5L9tqv3a5TU&feature=youtu.be

Thanks.

12-15-2019 07:03 PM - edited 12-15-2019 07:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@MichaelAivaliotis wrote:

However, is it possible to get your code posted somewhere so I can try it out?

Sorry, I was trying to polish it up a little for distribution and got side-tracked. I'll try to dig it out and post it.

All code variations operate on the same raw data, so everything is included. But yes, to determine duplicates, the data is irrelevant in this particular test.

12-15-2019 07:36 PM - edited 12-16-2019 01:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

OK, here's my latest version of the above benchmark code. I changed it to dynamically stop testing any algorithm once the accumulated time for it is >10s (reasonable, but changeable on the diagram).

See how far you get. It probably needs a little bit more documentation and tweaks.