- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Improving AI Sample Rate and Code Performance

Solved!11-21-2017 12:58 PM - edited 11-21-2017 01:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hey all,

I'm running into an interesting issue that has had me stumped for a few days. I have a very busy GUI where I'm reading in 17 analog voltages (via 9205) and plotting in real-time to a handful of waveforms (after a conversion factor is applied), as well as controlling various actuators, heaters, writing to a spi device, etc -- but let's just focus on the 17 analog voltages.

15 of these can be read at pretty low sample rates (even 2 Hz is probably sufficient), however, the remaining two signals need to be read/ plotted much faster. These two signals are each linked to high-performance solenoid valves and in order to guarantee that I catch the 7 ms voltage spike to 12V in the waveform, these signals needs to be read/ plotted at 200 Hz, at least.

As shown in the attached screengrab, I've dedicated a case statement to the 15 low-speed signals which allows them to be downsampled while the two high-speed signals can run at the designated rate (in this case, I have ALL signals running at 10 Hz just to make it easier to see what's going on). When I run the code with high-speed signals at 200 Hz and low-speed signals at 2 Hz a clear lag starts to emerge between the 'absolute time label' on the x-axis of the various waveform plots, and the real-time clock on my computer. This lag accrues at about 7 seconds/ per minute.

I made a separate test VI where I am doing nothing but reading and plotting the two high-speed signals and it has no issues whatsoever plotting at up to 2500 Hz without lag. On the other hand, in the primary code, I can run everything (all 17 signals) at 10 Hz, also without lag. But as soon as I ramp up the two high-speed signals even to 50 Hz, I think LabView needs to compensate by slowing down time (i.e. the source of the lag that I am seeing).

At this point, I am thinking that this is purely a code performance issue due to how busy the GUI is, in general, and how much computational power it takes to plot waveforms at high rates. Any suggestions on what I can do to bring the two high-speed signals up to the needed 200 Hz without losing the ability to plot in real-time? I realize that LabView isn't known for being a "fast" program but there must be some way around this.

Perhaps I could do something regarding manually controlling buffer sizes? Albeit, I'm not too familiar with editing buffers.

Worth noting that our technology (including this VI) is proprietary, so unfortunately I cannot post the .vi or the front panel, but will post snippets from the code. Thanks for the help!!

Solved! Go to Solution.

- Tags:

- lag

- NI9205

- samplerate

11-21-2017 01:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hmm. We routinely sample 16 channels at 1KHz on a PXI system that is probably less capable than your cRIO setup, and stream it to disk. I'm a little concerned about the nature of the "high-speed" signal, which you describe as a 12v spike that is 7 ms wide -- makes me think of a Trigger Pulse, and the desire to "generate an interrupt" (or "throw a LabVIEW Event") when it happens.

If you are getting bogged down by what seems to be fairly modest data rates, and are reluctant to show code (and, please no pictures, just isolate the code in a sub-VI and attach that -- we really need to examine and maybe even test the code, and it only wastes time squinting at static pictures), I wonder if you are stuffing everything into a single loop so that the code runs at the rate of the slowest sequence of functions, ignoring LabVIEW's ability to run multiple operations in parallel. Please tell me that the plotting/graphing routines are not in the same loop as the acquisition (DAQmx) code, and please tell me that you are using "real" DAQmx, not the Dreaded DAQ Assistant ...

Bob Schor

11-21-2017 02:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hey Bob_Schor, thanks for the reply:

1. I am using cDAQ for our setup, if that makes a difference (don't know much about cRIO). From what I understand PXI relative to cDAQ is like comparing a tricycle to a formula 1 (in terms of data acquisition capability) because PXI is designed to be able to handle 100's or even 1000's of high speed signals at once.

2. To clarify, I am using a simple digital write to control the pulse being send to trigger the solenoid. To ensure that this valve is actually switching I'd like to be able to see that distinct 12V spike appear on the waveform. The issue is that the pulse is only 7ms in duration. So for example, at 10 Hz sampling rate, I only have a 7% chance of actually seeing that pulse.

3. Fair point -- here is a paired-down version of my VI which only includes the primary AI/ DO operations. Let me know if this is sufficient. Sorry, didn't mean to break an unwritten law of the labview forums by posting a photo.

4. Yes, I am using DAQmx and not daq assistant

5. Yes, I am reading the signals and writing at the same time. However, it seems to me that there is no way to get around this? Since I want to write to my waveform plots in real-time, wouldn't those need to happen in continuous back-to-back fashion?

Thanks!

11-21-2017 08:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@PFour wrote:

Hey Bob_Schor, thanks for the reply:

5. Yes, I am reading the signals and writing at the same time. However, it seems to me that there is no way to get around this? Since I want to write to my waveform plots in real-time, wouldn't those need to happen in continuous back-to-back fashion?

One way around this is the Producer/Consumer Design Pattern. You set up two loops -- the Producer, which only acquires data, so it runs at a rate fixed only by the timing of the acquisition process, generally governed by hardware timers, and hence very accurate, and a Consumer Loop that gets the data passed to it by the Producer and "does something with it". One usually passes data from Producer to Consumer on a Queue (or a Channel Wire alternative), which trades "time" for "space" and can provide buffering so that as long as the Consumer "consumes", on average, faster than the Producer can "produce", the Producer never slows down, and all the data is acquired and processes without introducing delays.

Once you have all the data, you might find that you need to "consume" it selectively. For example, if you are acquiring points at 1 KHz, you might have trouble displaying 1000 points/second continuuously, but if you averaged 10 points at a time, you'd cut the number of points to display by a factor of 10, so would only have 100 points/second to display. And if you displayed every over average, the Consumer loop would only have to display 50 points/second, which should be a piece of cake.

Divide and Conquer. Take advantage of LabVIEW's ability to do parallel processing by having parallel loops doing things "simultaneously".

Bob Schor

11-21-2017 09:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Some thoughts:

- You have property nodes on the Block diagram, that may cause everything to run in the UI thread(slow), not sure completely though.

- You are using a formula node/mathscript node. Not sure if the formulas can evaluated in parallel.

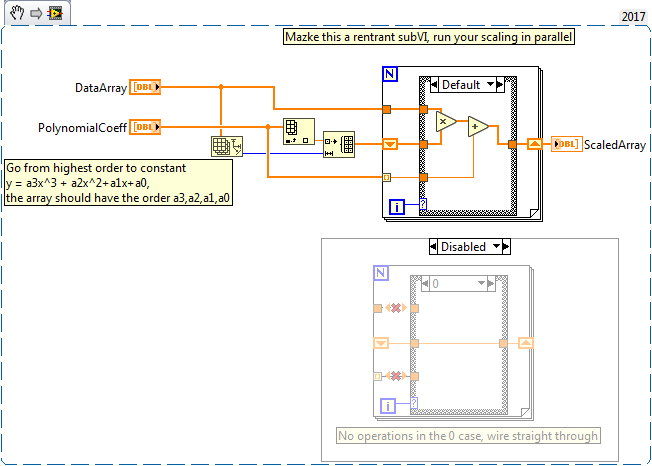

Break down your scaling into a subVI that is reentrant, like below.

mcduff

11-21-2017 09:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

One more thing, from your picture is looks like you are taking 1 point at a time. Download 20 points at time that way your loop runs at 10Hz for 200HZ sampling rate, or 50 points at a time, etc.

mcduff

11-27-2017 11:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Bob_Schor,

It certainly makes sense why that would speed things up -- I'll give it a shot using the 'obtain queue' function. Also, in reference to your second paragraph, I think that I have a functional downsampling system in place to avoid processing every single data point from the low-speed channels. Thanks

-PFour

11-27-2017 12:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for taking the time mcduff,

I think I am understanding what you're suggesting. In the image that you attached, you're breaking each calibration into a handful of parallel, synchronous tasks -- this is because you think that this will be more effective because the formula node might not be performing calculations in parallel?

However, I believe that the formula node does run ops in parallel. Please take a look at the quick and dirty vi that I've attached. When I run this and select 'highlight execution' you can see that each output is being created at the same time, as opposed to alternating back and forth. Not sure if this is reasonable proof that the node runs in parallel though.

Cheers,

-PFour