- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to convert cluster containing bytes into array of bits?

Solved!08-10-2022 06:44 AM - edited 08-10-2022 07:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I have a cluster which contains field as follows:

preamble (4 bytes) | SYNC word 1 (2 bytes) | SYNC Word 2 (2 bytes) | Packet_ Counter (4 bytes) | Payload

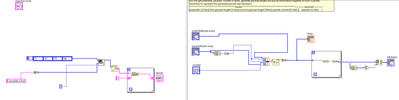

I would like to convert this struct into a bit stream. I am attaching VI block diagram as screenshot.

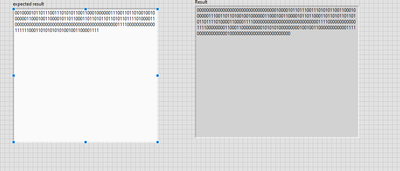

When I run the program in the display panel, I do not get expected result. The result I got is completely different from result I was expecting to get.

Could you let me know what I might be doing wrong here?

Thank you.

Edit 1: Sorry for being so vague. I tried to find the source of the problem. I noticed that after flatten to string and string to byte array conversion, some unwanted information is being added. The source of the problem is either flatten to string or string to byte array conversion. But I don't know what the problem is....

Solved! Go to Solution.

08-10-2022 07:39 AM - edited 08-10-2022 07:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

1. Your Preamble is an array of 16-bit values. Is that a 2 element array or is it supposed to be 4 U8 values?

2. You are putting your payload before the packet counter.

3. One of the issues with the Flatten To String is that it will prepend the array size. There is a Boolean input to turn that off. However, that input is ignored for anything inside of a cluster.

4. Your converting to a Boolean string can be greatly simplified by using Reverse 1D Array and a Format Into String inside of a loop.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

08-10-2022 09:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi @crossrulz,

thank you very much for responding.

Quick question though:

1. As you can see in the screenshot in the question, the sub VI I created tells me that the input is 16 bit whereas in the main VI, the array is specified as U8. How do I correct it? How do I make the sub VI read the input as 4 u( array instead of 2 U16 array, as it is reading now?

2. Yeah..... my bad!!

3. Why did you do reverse 1D array there? Is it because the LSB and the MSB get interchanged somewhere? Where does it happen?

4. Instead of build array, if I want to use clusters, how do you propose we do that?

Thanks again.

Warm Regards,

08-10-2022 10:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@labview_wannabe wrote:

Quick question though:

1. As you can see in the screenshot in the question, the sub VI I created tells me that the input is 16 bit whereas in the main VI, the array is specified as U8. How do I correct it? How do I make the sub VI read the input as 4 u( array instead of 2 U16 array, as it is reading now?

2. Yeah..... my bad!!

3. Why did you do reverse 1D array there? Is it because the LSB and the MSB get interchanged somewhere? Where does it happen?

4. Instead of build array, if I want to use clusters, how do you propose we do that?

1. Just right-click on the control and change the representation to U8.

3. I reversed the array because of the way you were building the string in the FOR loop of your main VI.

4. Why do you have to use a cluster? You will have to have an array of bytes of some sort (an array of U8 and a string are literally the same format under the hood) in order to do the ASCII conversion.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

08-11-2022 03:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

One last question:

How do I convert the string at the end into array of bits? Is it string --> U8 --> Num_to_array --> Bool_to_(0,1)? Or is there a smarter way of doing it?

08-11-2022 05:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@labview_wannabe wrote:

How do I convert the string at the end into array of bits? Is it string --> U8 --> Num_to_array --> Bool_to_(0,1)? Or is there a smarter way of doing it?

I skipped that part and just took the byte array straight to your ASCII Binary format. So I guess the real question is what format do you actually need the data to be in. Be extremely specific or we will likely be talking past each other.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

08-11-2022 08:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@crossrulz wrote:

@labview_wannabe wrote:

How do I convert the string at the end into array of bits? Is it string --> U8 --> Num_to_array --> Bool_to_(0,1)? Or is there a smarter way of doing it?

I skipped that part and just took the byte array straight to your ASCII Binary format. So I guess the real question is what format do you actually need the data to be in. Be extremely specific or we will likely be talking past each other.

Hi,

So I need something like bitstream in I8 format. If I have string in the form "10001" then the corresponding bitstream must be in the form 10001. Further simulations (like modulation etc) are then done on these bistreams.

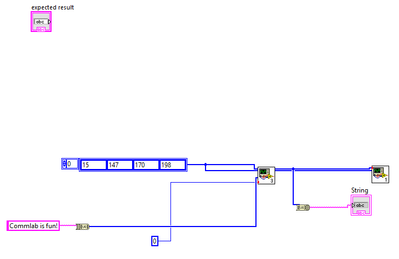

I tried to do it on my own. The main file is

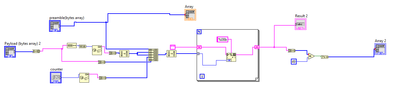

And the sub VI is

When I run the main VI, I get string output as

I have verified that the string in the sub VI is accurate. So some encoding error happened when performing byte to string conversion.

Also, if I need to verify the bits at the receiver and parse the bitstream, how do I do it? I know that I need to do auto correlation and then peak detection. But how do I parse after the auto correlation? That was the main reason I wanted to use cluster, because it was easier to parse the bitstream into individual fields. Now with just byte array, how do I do that?

08-11-2022 08:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

08-11-2022 08:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@GerdW wrote:

Hi wannabe,

why don't you attach the VIs? We cannot edit/debug/run images of code in LabVIEW!

Why are there so many coercion dots?

Here are the vi files. I think the coercion dot mainly due to type mismatch. my bad.

08-12-2022 06:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Gerd,

The first file lab1_commlabs_is_fun.vi is the main VI. The other two are sub VI. packet_formatter.vi combines all the byte array into a single array of bytes and then convert it into bits. I am trying to read this bitstreams as string in the main VI where I am getting errors.

In packet_parser.vi file I am trying to parse the bit array into individual fields. I am trying to detect the preamble and then extract the payload. Here too, I am not sure how to proceed. I did try to perform detection by myself, but as you can see, I did make some mistake.

if you need any other information, please let me know. It is my first time using LabView, so if you want me to raise this as a separate issue, please let me know.

Warm Regards,