- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to Optimize Camera Speed (fps)

Solved!08-11-2019 09:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Here's a little Tutorial on using the Producer/Consumer Design Pattern to acquire a Selfie AVI using the WebCam built into your (well, my) Laptop. If I open MAX and look at the Devices and Interfaces, I can see an Integrated WebCam that MAX gave some generic name -- I changed it to "WebCam" so I'd know what to call it in my code.

A Producer/Consumer Design Pattern needs three things -- a Producer Loop that "creates" data, a Consumer Loop that "accepts" the data and does something with it (like display it or save it to disk), and a means of asynchronous communication to get the data from Producer to Consumer. The traditional way to do this is to use a Queue, but in LabVIEW 2016, NI released the Asynchronous Channel Wire, and I became a Channel Wire Enthusiast.

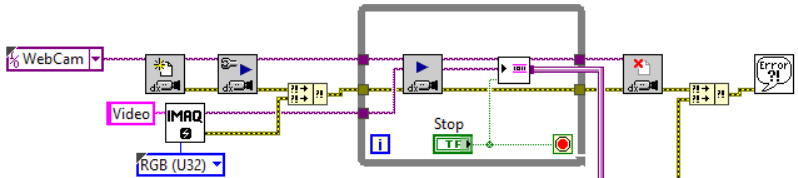

Here is the Producer. Note that this is not a Snippet -- the Snippet comes later.

The initial code sets up a Grab situation, using a fixed Image Buffer (here called "Video"). Note that since our WebCam saves color images, the Image is set to be RGB (U32). Inside the Producer Loop, we grab a frame (no timing is needed -- the Camera takes 30 frames per second) and hands it off to a Stream Channel Writer (see next paragraph). This continues until the Stop button is pushed, a"Lt which point the Camera is Closed and the program ends.

The rectangular VI that accepts the Image Out from the IMAQ Grab2 function is a Stream Channel Writer. Note the "pipe" output, and notice that it sits "on top of" the While Frame, indicating that the data flows right out of the Loop, essentially ignoring the Rules of Data Flow -- as the data is "produced" by the Grab function, it is "streamed" out of the Producer Loop via the Stream Channel. The Stream Channel VI has an additional input, Last Element?, that also gets the Stop signal and sends it along to the Consumer, shown here:

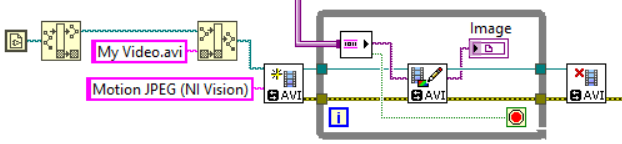

The Consumer starts by opening a file, My Video.avi, to hold an AVI Video file. The IMAQ A VI2 Create function has an input for the Video Codec -- the native NI Motion JPEG Codec name I got by running IMAQ AVI2 Get Codec Names. Inside the Consumer Loop is a Stream Channel Reader, which waits for an Image to be sent to its Writer, whereupon it produces it as its output. We write it to the AVI file and display it on an Image display. When the Last Element? input returns True (because the Producer wrote True to it), we stop the Consumer and close the AVI.

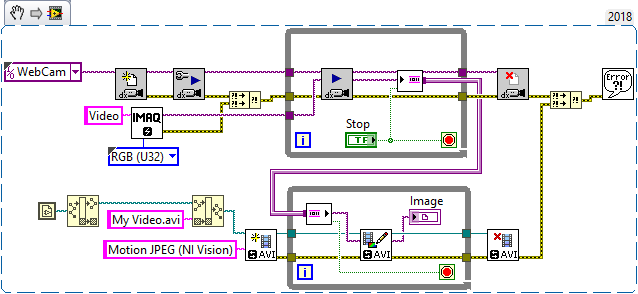

Here is the whole thing as a Snippet:

This routine has no problem keeping up with the Camera. The Producer Loop runs at exactly the speed of the Camera, so if the Camera can output 30 fps, then the loop will run at 30 Hz. Basically nothing else happens inside the loop except to pass the address of the Image Buffer to the Consumer via the Stream Channel Wire, which probably takes a few nanoseconds. Similarly, the Consumer loop has no problem writing an AVI file at 30 fps and displaying it at that rate.

Give it a try. If you, instead, want to write a series of PNGs, you should be able to adapt the Consumer loop to write a series of sequentially-numbered files, one per image, as well.

Bob Schor

08-14-2019 03:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you, I have tried to create the code with my own file directory attached. For some reason the AVI is not being saved inside of the folder path. The folders are being created but nothing is saving inside of them. I'll attach the program here as well.

I have also began working with the AOI controls to increase frame rate.

To confirm, I should be able to pull individual frames from the AVI to be processed later? I ask because I have not been able to play with it yet and maybe you're familiar with it. I'm still writing out code and attempting the save problem. I likely have a misunderstood channel writer.

Thank you for taking the time to offer advice.

08-14-2019 04:16 PM - edited 08-14-2019 04:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Fixed it. Program works great with saving. Going to have to play with the frames for processing.

I am noticing that it runs for 3 seconds but records 1 second of data. There's also corruption in the videos. Is there something missing here?

08-15-2019 01:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

What kind of camera are you using? Network or usb? Try lowering the frame rate / image size and see if that helps. If that is the case your harddisk maybe cannot keep up with the data flow.

08-15-2019 01:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I am using a acA2440-35 um Basler camera with a USB-3 connection. I need to first be able to access the 30 fps that the camera soft-caps at without changing AOI controls. Lowering the resolution, I can achieve up to 136 fps but I would like to be able to hit the soft-cap at the regular resolution first. Once I can do that, the lab may purchase a higher speed camera knowing that the program can still achieve the faster camera's soft cap speed. In a way I'd like the program to be solid to where changing out a camera would be a very small adjustment if any to the code.

The ultimate goal of this (which will sound crazy), is to run the program for up to a month and only capture/save images when a particle is detected. Which may be impossibly hard or I may have to do something else for this but that's research for ya.

Thank you

08-20-2019 10:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@JohnnyDoe771 wrote:

The ultimate goal of this (which will sound crazy), is to run the program for up to a month and only capture/save images when a particle is detected. Which may be impossibly hard or I may have to do something else for this but that's research for ya.

The Good News is that your "ultimate goal" has a few novel parts, but is (a not-too-difficult) modification to the Producer/Consumer design I mentioned earlier.

What you want is two independent routines running, one dedicated to getting the Camera to take (and save in its internal buffers) Images while monitoring (and responding to) a "Go" signal that means "Start saving". This is the Producer, of course. As it has to be doing multiple things depending on external (and internal) signals, I like to code these as variants on a Queued Message Handler. Since I'm a Channel Wire "nut", I use Messenger Channels instead of Queues, and call this a Channel Message Handler (CMH).

The Consumer is another CMH dedicated to acquiring Images and saving them in named AVI files. It has "States" such as "Open AVI", "Save Frame", and "Close AVI".

So here's the basic idea: The Producer goes along, initializing your Camera, setting it up, setting up the (DAQmx?) code that will acquire the "Go" signal, and then starting to acquire Images at the Frame Rate you select. However, until it gets the "Go" signal, it doesn't send anything to the Consumer.

When it gets the "Go" signal, it first sends the Consumer the Open AVI message (with whatever parameters you need to build the file), and sets a flag for itself to start sending Images (but see below for a possibly "better way") to the Consumer. You probably have some criteria for how much data to send ("save 5 seconds" or "save until you get a "Stop" signal), at which point you send the Consumer the Close AVI Message.

So here's some Advanced IMAQdx. The Camera has user-configurable buffers, allowing it to save in computer memory not only the current Image, but also the N-1 preceding Image. This gives you a little "wiggle room". For one thing, you can save images that were acquired before the Trigger signal -- if the Trigger occurred at Buffer 1000, and you are taking 30 frames/sec, then Buffer 970 holds the Image one second before the Trigger arrived. When you configure a Grab, you can specify the number of buffers (the default is 5, but you'll want more!).

If you tell the Consumer the Buffer Number at the time the Trigger occurred, the Producer basically doesn't really need to tell the Consumer to save Images (saving a trivial amount of communication time) -- the Consumer can ask the Camera for the number of the Current Buffer, and can simply save "older" Buffers as fast as it can until it "catches up" to the Current Buffer (at which point it can "go to sleep" for 1/10 of a second). When the Producer finally says "I'm done", the Consumer asks the Camera for its Buffer Number, which becomes the "Last Buffer to Save", after which the Consumer closes the AVI.

I know this may sound a little complicated, but it is definitely doable, and works rather nicely. We routinely use this technique to monitor animal behavior for 6-10 "stations" consisting of a camera and a "Go" sensor, with the code running on a 7-8 year old Pentium Dual CPU PC (I'm sure it would run better and faster on a modern PC).

Bob Schor

08-20-2019 02:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you,

I can try implementing that into my code. The problem for now is that it doesn't seem to be sending all of the buffer images out to the consumer loop as it should.

Can you also please provide an example of the trigger>capture method you mentioned? I'm working on learning channel writers and took out the previous queues that may have been slowing the consumer loop down. The producer is operating at the 35 fps but the consumer can't keep up. I saw mention of bottle-necking and may have to get an SSD. Though your computer is likely an older model like you mentioned, so I am unsure why mine is operating slower.

Do you have a separate sensor for the "Go" function or is it that your camera detects something and begins a short burst of film? Ideally, I'd like for the program to notice when a particle has entered the frame and begin taking images for 2-4 seconds, and then go into a "sleep" mode until another particle comes along.

How does the camera ask the camera for the buffer number? Before I had a queue set up that sent data to the consumer loop but it made no change in time.

I'll attach the JPG code that I am using without the queues. I know there's some compression going on but if the images are within the previous buffer, should they still save, just a second delayed?

I am open to using AVI instead of JPG, but have experienced the AVIs not saving past 1 second if i run the code for 3 seconds.

The time elapsed that I have now are just so I know how long the code is running for to compare that to the images saved/seconds ran = saved images per second. It is currently saving images at 18 images per second.

For one run I received 65 images saved with a 3 seconds run time, which should be 105 images. The loss of the 40 images is what really concerns me. The camera operates at 35 fps and the producer loop is showing that it's there, just not transferring the information.

I have code that uses a boolean to initiate the processing later, but I've completely separated that code out to work with the image saving speed only.

08-20-2019 04:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@JohnnyDoe771 wrote:

Thank you,

I can try implementing that into my code. The problem for now is that it doesn't seem to be sending all of the buffer images out to the consumer loop as it should.

Can you also please provide an example of the trigger>capture method you mentioned? I'm working on learning channel writers and took out the previous queues that may have been slowing the consumer loop down. The producer is operating at the 35 fps but the consumer can't keep up. I saw mention of bottle-necking and may have to get an SSD. Though your computer is likely an older model like you mentioned, so I am unsure why mine is operating slower.

Do you have a separate sensor for the "Go" function or is it that your camera detects something and begins a short burst of film? Ideally, I'd like for the program to notice when a particle has entered the frame and begin taking images for 2-4 seconds, and then go into a "sleep" mode until another particle comes along.

How does the camera ask the camera for the buffer number? Before I had a queue set up that sent data to the consumer loop but it made no change in time.

I'll attach the JPG code that I am using without the queues. I know there's some compression going on but if the images are within the previous buffer, should they still save, just a second delayed?

I am open to using AVI instead of JPG, but have experienced the AVIs not saving past 1 second if i run the code for 3 seconds.

The time elapsed that I have now are just so I know how long the code is running for to compare that to the images saved/seconds ran = saved images per second. It is currently saving images at 18 images per second.

For one run I received 65 images saved with a 3 seconds run time, which should be 105 images. The loss of the 40 images is what really concerns me. The camera operates at 35 fps and the producer loop is showing that it's there, just not transferring the information.

I have code that uses a boolean to initiate the processing later, but I've completely separated that code out to work with the image saving speed only.

How are you stopping the attached code? I don't have IMAQdx, but your producer loop runs forever, it has a false wired to the while loop. Stopping with the abort button, you will always lose data. You should stop the producer with a control. The consumer should then finish processing the data before you destroy the queue/channel.

mcduff

08-20-2019 09:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@mcduff wrote:

ow are you stopping the attached code? I don't have IMAQdx, but your producer loop runs forever, it has a false wired to the while loop. Stopping with the abort button, you will always lose data. You should stop the producer with a control. The consumer should then finish processing the data before you destroy the queue/channel.

mcduff

I also noticed that you had a False constant (instead of a Stop button) wired to the Producer's Stop (and the Stream's Last Element? line), but was too foolish to point this out to you! Put the Stop button back -- this will stop only the Producer, but since you wired Last Element?, when this gets to the Consumer, it will (also) stop the Consumer, as nice an illustration of a "Sentinel" as I've seen.

But I bet you're still losing frames. Time to talk about Buffers. Inside the IMAQdx driver, memory is allocated when Grab is configured to hold Image Data using a structure called a Ring Buffer. The default is to allocate space for 5 images -- as they are acquired, they are put in slot 0, 1, 2, 3, 4, 0, 1, 2, 3, 4, 0, ... and are numbered 0 .. N (always increasing). Let's imaging you're saving images and you pass the Image (meaning a pointer to the Buffer) to your Consumer. You'll pass Buffer 0, 1, 2, 3, 4, 0, etc. However, if the Consumer is a little be slow at times, it might "lag" sufficiently so when it is passed Buffer 2, the camera might have just saved Image 7 in there, and you'll miss five of them.

The solution is to allocate more than enough buffers (start with 30, but don't be afraid of using 60 -- memory is cheap). But where do you specify the buffers? Open the Low-Level IMAQdx functions and use IMAQdx Configure Acquisition.

Here are some answers to several of your other questions:

- How do you "detect" a signal? If your goal is to maximize saving of Images for later off-line analysis, you might not want to be doing on-line analysis to see if the Images contain a "Start looking at me" signal! I'm not saying you couldn't do Image Analysis to decide when to start saving images for later Image Analysis, but it would be easier if you had a "gross detector" that could quickly (and crudely) say "Hey, Dude, Something Happened!".

- You want a QMH/CMH as the Video "Consumer" (that gets "instructions" from the Producer and responds by making an AVI). Here are some example States:

- Init Video, gets the IMAQdx Reference to the Camera so it can look at Camera Properties.

- Init AVI, called when it's time to start the AVI. Using Video reference, it uses Property Node to get current Buffer Number. If you want to save images from before the trigger event, use earlier buffer numbers (another reason for lots of buffers).

- Save AVI saves the current Buffer's Image (gets Image from IMAQdx, writes to AVI, and calls itself if it hasn't yet "caught up" to the Camera's current buffer).

- Close AVI gets the current Camera Buffer, saves all remaining buffers up to that one, then closes the AVI, ready for another Init AVI.

- Exit AVI is sent by the Producer as its Exit signal, to stop the Consumer.

In my experience (modest speeds, modest image sizes, modest number of buffers), I haven't had a problem saving color AVIs of 640 x 480 at 30 fps (from 7-10 cameras running simultaneously, but saving 3-10" AVIs at 4-10/hr for 2 hours).

Bob Schor

08-21-2019 10:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Bob,

did you see this....?

A post by The Father of LabVIEW himself!

Ben