- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Display value

08-08-2014 09:38 AM - edited 08-08-2014 09:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

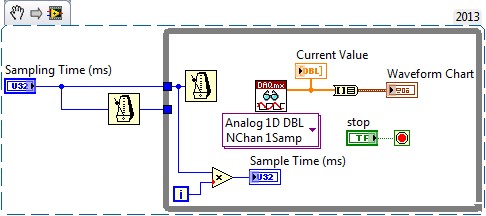

Im kind of new to LabView. Right now I have a chart as an output. However, I have to read out values while I am doing my experiment. I need to read out the values out of the graph during the experiment but its hard to read them from the graph. Can I build in a display which is constantly presenting the values of the sensor?

Thank you very much!

Henk

08-08-2014 09:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Henk,

your screenshot shows a VI block diagram, which already displays data continuously on a chart. As the chart keeps a history, everything is in the chart (and in the file you append to).

My guess is that you are struggeling with the history length of the chart which is by default 1024. You can increase it by right click on the chart and select "History Length".

Please note that this will increase the memory consumption of your VI, so there are limits to the length....

Norbert

----------------------------------------------------------------------------------------------------

CEO: What exactly is stopping us from doing this?

Expert: Geometry

Marketing Manager: Just ignore it.

08-08-2014 09:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You use an indicator.

This is a very, very basic question.

You really need to learn about LabVIEW by looking at some online LabVIEW tutorials.

LabVIEW Introduction Course - Three Hours

LabVIEW Introduction Course - Six Hours

08-08-2014 12:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Depending on your sampling rate, you could simply display the most recent sample in an indicator. Note you can choose to display with "reduced precision" which can "filter out" some digitization noise. For example, if your sample ranged over ±10v, if you display 0 or 1 decimal place, you could ignore the millivolt variation.

I've attached a snippet showing what I mean, and also illustrating a better way (I think) of "clocking" this loop. It uses "Wait until next multiple" instead of a simple "Wait". The problem with "Wait" is it adds additional time to the loop, whereas "Wait until next multiple" will only wait "as long as needed". Note that there is an additional single "Wait" outside the loop -- you need this to get the clock on the right "multiple" tick. Finally, instead of computing the current sample time by subtracting two other timers, you can reconstruct it by multiplying the loop index by the Sample Time (as shown).

BS

08-08-2014 12:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Bob_Schor wrote:

The problem with "Wait" is it adds additional time to the loop, whereas "Wait until next multiple" will only wait "as long as needed". Note that there is an additional single "Wait" outside the loop -- you need this to get the clock on the right "multiple" tick.

Not true. The regular wait doesn't add any time to the rest of the code execution unless you happen to wire that in a way that it executes in series. A wait until next could cause it to wait twice as long.

Let's say the wait time is 100 msec.

Rest of code Wait Total iteration time of loop

10 msec 100 msec 100 msec

100 msec 100 msec 100 msec

120 msec 100 msec 120 msec

Rest of code Wait until Next Total iteration time

10 msec 100 msec ?? 10-100 msec unknown on first iteration (depends on the current multiple of the PC clock), 100 msec iterations after that

100 msec 100 msec ?? 100-200 msec unknown on 1st iterat. 100 msec after that

120 msec 100 msec ?? 120- 200 msec unknown on 1st iterat. , 200 msec after that

The wait until next would need a lot of testing under different timing scenarios to prove out that table. But overall, the timing is much more unpredicatable since it is directly depending on the current value of the PC clock as it causes the wait to only finish when the clock reaches a multiple of the requested time.

08-08-2014 01:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Your points are (mostly) well-taken. In the code from the original post, the Wait was not done in parallel, but was wired through a sequence frame (which, admittedly, would not cause, in the given case, much time loss). Also, I not only did the initial Wait until Next outside the loop, I mentioned the importance of it.

If the Wait is truly running "in parallel" inside the loop-to-be-timed, and if you "know" that the loop will finish before the Wait expires, then it seems to me that using Wait and Wait until Next Multiple are entirely equivalent (provided you do the additional Wait until Multiple outside the While loop). If this is not the case, then you have a choice of which is "least bad" -- having samples exactly evenly spaced at N ms, but with possibly one (or more) samples "missing" (because you waited two ticks of a "multiple" clock) or having an "elastic" time base where additional delays of unknown length are injected. My preference would be for the former, as it might be easier to detect a "missing" sample than to detect random "drifts" in the data points ...

BS