Some courses and learning paths on NI Learning Center may not appear on learner dashboards. Our team is actively investigating.

If you encounter access issues, please contact services@ni.com and include the learner’s name, email, service ID or EA number, and the list of required courses.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

DAQmx TDMS data logging

Solved!03-22-2021 04:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

I'm currently working on a project where i have to acquire enormous amount of data, up to 2,4E9 samples.

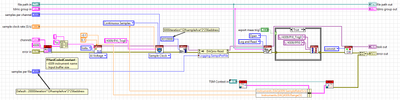

The Daqmx task is configured to acquire in continuous, with an external clock and a sample rate up to 900 kS/s on 8 channels, 1channel/ADC of the card PXIe-4309.

DAQmx internal logging system is used to retrieve the samples.

A PXI 6570 digital card is used to trigger the PXI 4309 acquisition (PXI_Trig0 trigger line).

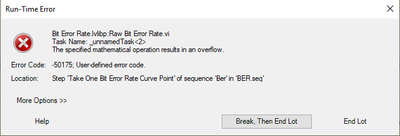

This strategy seems to work up to 245 760 kS per channel. But if i increase that number, i then get the following error on the output of the DAQmx Read VI:

Did anyone already faced that issue?

Is the strategy chosen appropriate ?

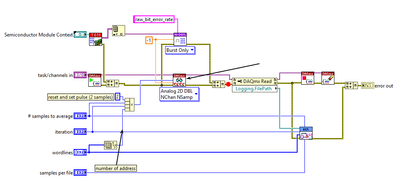

The only mathematical operation i can think of is the conversion from raw data format to DBL which is internally done by the logging mechanism. So i tried to change from analog -> multiple channels -> 2D DBL to Raw I32 datatype.

But i get another error :

Nicolas

Solved! Go to Solution.

03-22-2021 07:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The problem seems to be that i set a number of samples per channel to high. For some reason, there must be an internal computation that reaches I32 limit, my number of samples per channel was 399 360 000. Multiplied by the number of channels (8), it overflows for a I32, that's my guess.

03-22-2021

07:47 AM

- last edited on

11-06-2025

11:59 AM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Maybe someone who's used DAQmx logging more than me (i.e., almost anyone who's used it in a full app, ever) will have more insight, but one thing I see that you might try is to reduce your buffer size considerably.

There should be no need for a 30 mega-sample buffer with a sample rate around 1 MHz. I would expect 100k to be big enough. But maybe you could start with something like 500k and work your way down.

I have no specific insight how such a humongous buffer would lead to those specific internal errors. I just see that it's far far larger than it should need to be, so it goes on my list of suspects to be checked out.

-Kevin P

[Edit: I just saw your followup after posting. Your i32 suspicion makes sense to me. An i32 can have a max positive value of about 2^31 which is just over 2 billion. A buffer pointer for 300 million samples/channel * 8 channels would need to reach a memory offset of 2.4 billion.

Perhaps a bigger buffer would be available with 64-bit LabVIEW, but I think your real solution is to make it *smaller* and let DAQmx handle the need to service the circular buffer "fast enough". The default buffer sizing DAQmx does for higher-speed continuous acquisition is often 1 second worth or less.]

03-22-2021 09:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for your answer.

I confirm the problem appears when the number of sample per channels*number of channels is higher than the I32 max positive value.

I will decrease the number of sample per channels and call the read DAQmx multiple times by software using a while loop i think.

Well i needed to change the default buffer size, as in continuous the default size is the number of samples per channels, thus the default buffer size was even higher than 30M sample.

In general what is the recommandation for choosing the buffer size ? Should i find the smallest buffer size that allows reading faster than the buffer is overwritten ?

03-22-2021 09:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Have a look at the shipping examples for continuous acquisition. It's normal and expected to use a loop that does a manageable-sized read each iteration when you configure for *continuous* acquisition. Looks like the examples default to a 1000 Hz sampling and reading 1000 samples at a time, so 1 second worth.

A more typical rule of thumb is to read 0.1 second worth. This is generally a very manageable rate to keep up with in software, while offering almost-imperceptible 0.1 second latency on data you present to the user. A good place to start for the trade-offs.

Like I said before, I'd bet you can set up your ~1 MHz sample rate and then read & process 100k samples per iteration. Also keep in mind that this might just be a real-time convenience operation -- TDMS logging is preserving all your original data so you can always come back to the file to do post processing if needed.

-Kevin P

03-22-2021 09:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Not sure about your errors they seem related to the digital part of your task.

Concerning the part acquiring high speed samples and buffer sizes. The procedure I use is a bit byzantine but it seems to work and be stable for acquisition for days to weeks.

- Use unscaled data.

- Make the number of points you acquire each update an even multiple of the disk sector size. I also like to make the number of points a multiple of both the disk sector size and the page size. (Not sure if this helps but it does not hurt)

- Make the buffer size an even multiple of (2). I like to have a buffer that is at least between 2-4 seconds of data.

- Do not display huge data arrays on the front panel; decimate your data before displaying.

mcduff

03-22-2021 01:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for the tips both of you. I'm going to make few changes to my buffer size, and the number of samples per channels.

McDuff, it looks like by using daqmx logging the data is already saved in raw data.

"If you enable TDMS data logging, NI-DAQmx can directly transfer streaming data from the device buffer to the hard disk.NI-DAQmx improves performance and decreases disk space usage by writing raw data to the TDMS file and separately including scaling information for use when reading the TMDS file"

I don't know if it changes something to change the DAQmx read to unscaled data when logging is enabled as it already takes 4 bytes/sample instead of 8bytes, have you ever tried both ?

Concerning the error of my initial post, the error is really outputed on daqmx read output error cluster. I tried to change the samples per channels for the task and on the input of the daqmx read vi and the error appears just after I32 max positive value/number of channels is reached.

03-22-2021 02:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@nlefoulon wrote:

Thanks for the tips both of you. I'm going to make few changes to my buffer size, and the number of samples per channels.

McDuff, it looks like by using daqmx logging the data is already saved in raw data.

"If you enable TDMS data logging, NI-DAQmx can directly transfer streaming data from the device buffer to the hard disk.NI-DAQmx improves performance and decreases disk space usage by writing raw data to the TDMS file and separately including scaling information for use when reading the TMDS file"

I don't know if it changes something to change the DAQmx read to unscaled data when logging is enabled as it already takes 4 bytes/sample instead of 8bytes, have you ever tried both ?

Concerning the error of my initial post, the error is really outputed on daqmx read output error cluster. I tried to change the samples per channels for the task and on the input of the daqmx read vi and the error appears just after I32 max positive value/number of channels is reached.

In your diagram you used the "Log and Read" mode. When it reads it converts it to double (scaled). Depending on your set up this could be okay. I typically do my scaling, any calculations, decimation in a different loop than my DAQ. That means I need to acquire the data in one loop and analyze, plot, etc in another. That is two data copies. Depending on the number of channels and sample rate, that could or may not be a problem.

mcduff

See NI diagram below

03-22-2021 03:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Okay, that makes sense, thanks ! That's a smart move, you reduce the risk of having any troubles during the acquisition.

03-17-2022 10:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This is an old topic so lets see if I'm a little late with my question.

I'm trying to use DAQmx Logging directly and I want the TDMS to save the RAW data and the scaling coefficients. But I don't see where I can tell DAQmx Logging to save the RAW data instead of the Doubles from my AI task.

Is there a settings node I have to find to switch between DAQmx Logging saving in doubles or RAW? DAQmx Configure Logging.vi does not have that option as an input...

Thanks for any help on this.

Henrik