- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Convert byte to integer, have troube in type cluster

09-11-2019 12:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@GerdW wrote:

Hi feel,

you should ALWAYS show the display mode indicator with string constants. Expecially when you use data in hex display mode!

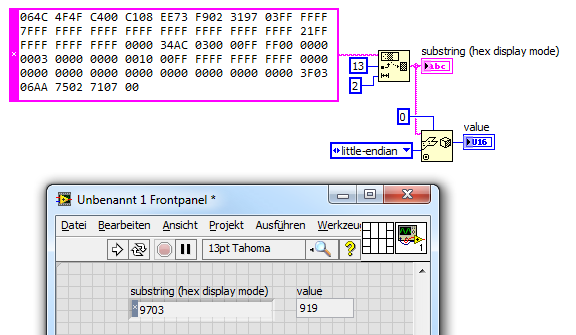

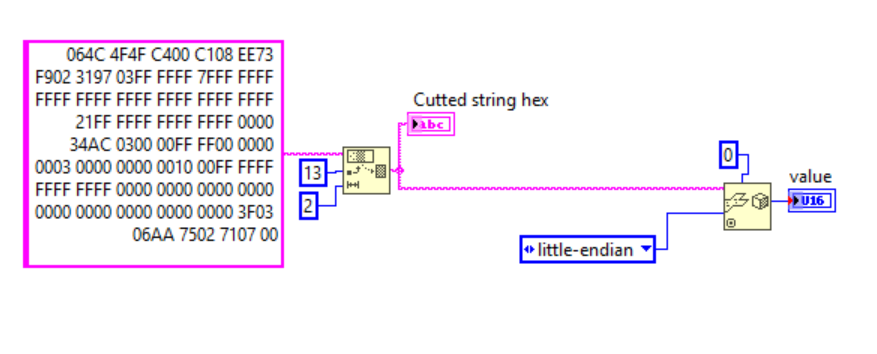

When you want the two bytes "97 03" from your (hex formatted) string you should get a substring starting at index 13 with a length of 2:

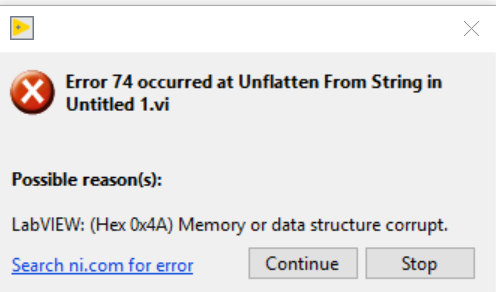

Thanks for your suggestion! It works! There is an error pop out said Memory or data structure corrupt. I think this is the same problem posted in the following link. But why don't you have the same error? I'm copying your code.

09-11-2019 12:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I suspect that you copy and pasted the data into the string constant and what you are seeing is actually ASCII data, not the actual raw data string.In addition, you have a coercion dot on your indicator which means that the type for the 0 constant wired into the unflatten from string is NOT a U16. I would also add that you probably want to use an I16, since temperatures can be negative.

I'll still go back to my original suggestion that you use the larger cluster definition to retrieve most of the data. You do not have to decode all 99 bytes, but certainly the first 15 bytes provide useful information. You will probably end up using some of those other values down the road.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

09-11-2019 02:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Mark_Yedinak wrote:

I suspect that you copy and pasted the data into the string constant and what you are seeing is actually ASCII data, not the actual raw data string.In addition, you have a coercion dot on your indicator which means that the type for the 0 constant wired into the unflatten from string is NOT a U16. I would also add that you probably want to use an I16, since temperatures can be negative.

I'll still go back to my original suggestion that you use the larger cluster definition to retrieve most of the data. You do not have to decode all 99 bytes, but certainly the first 15 bytes provide useful information. You will probably end up using some of those other values down the road.

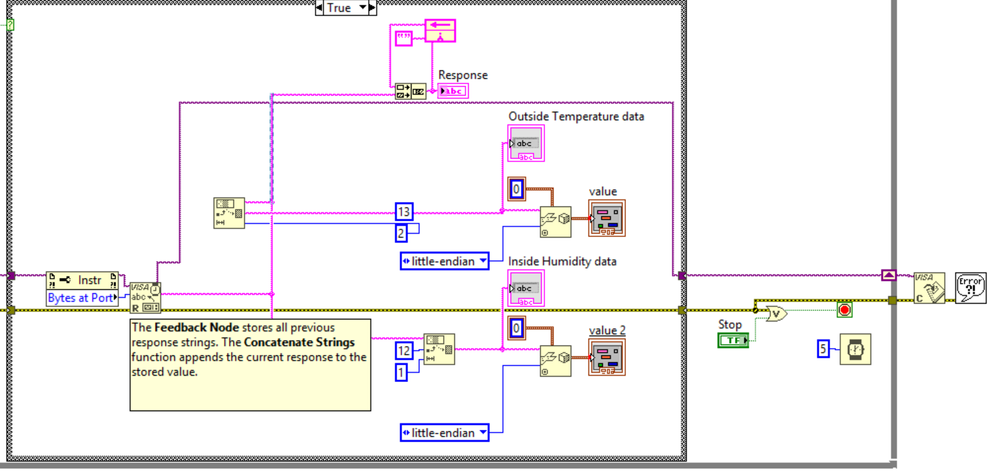

Mark,

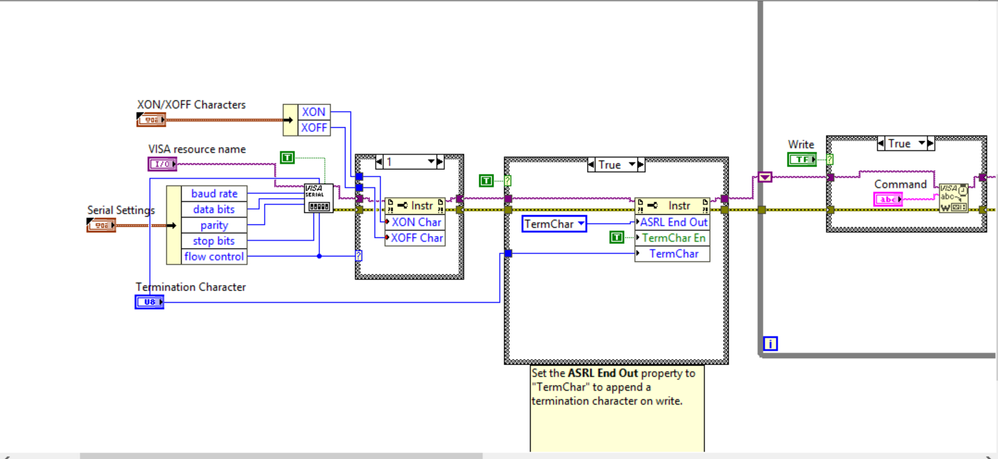

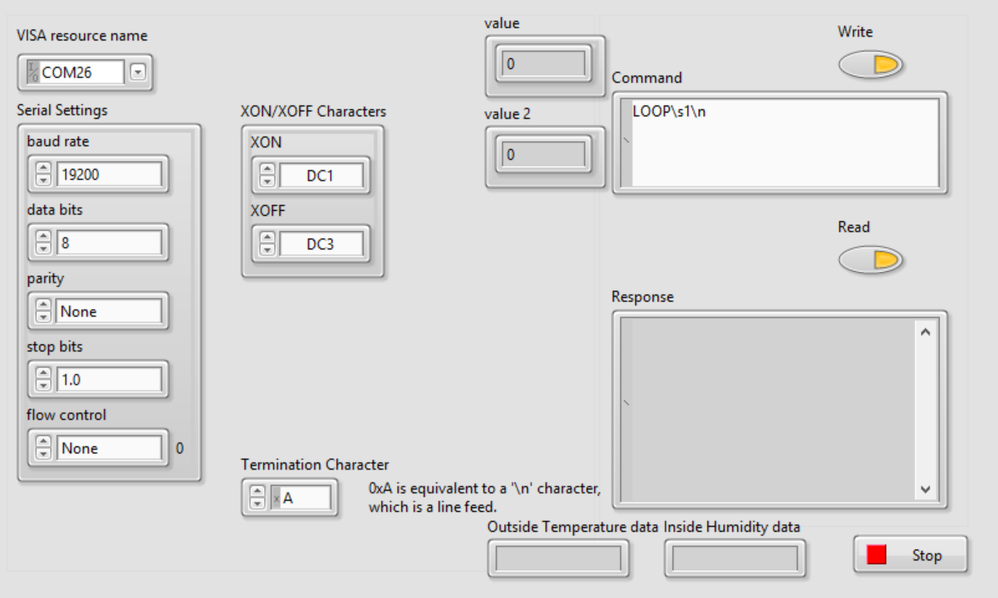

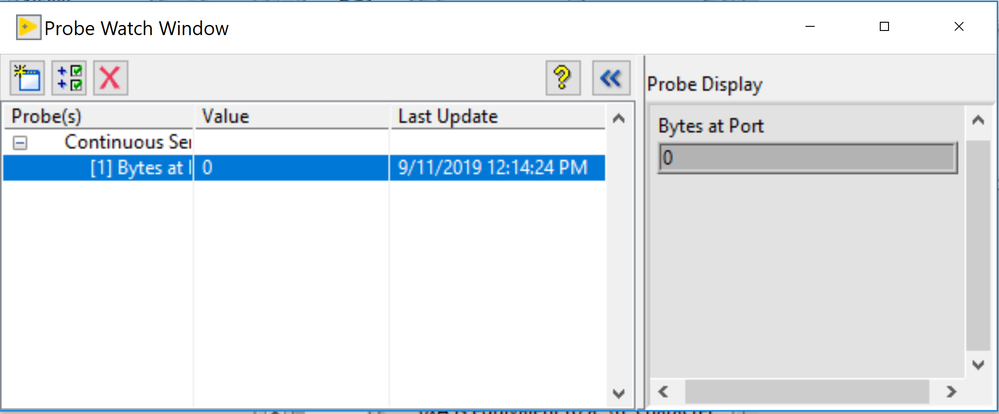

Thanks for your suggestions. After I fixed it, it worked. However, when I add this part to the Serial Continuous Read and Write example, it showed there is no data to read. I tracked the byte at the port. It is zero. The command control is in \n mode. I tracked I/O, there is nothing wrong but no data response. Do you know what's wrong?

09-11-2019 03:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

09-11-2019 03:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

First, I never use the "Bytes at Port". It is not reliable. I suspect your issue is a delay with the instrument. Bytes at Port will return the count at that moment. If you check it immediately after sending the command it is probable the device has not had a chance to respond yet. Or you will get a value that is for a partial response because the entire set of data has not been written out yet by the device. Either your protocol should define how many bytes a response should be or it will use a termination character. Use either of those to control your read.

The other issue you may be having is your command that you are sending. Is the control set to display "Nomral mode" or to display "\" Mode? If it is set to Normal you are not actually sending th eline feed character but rather you are sending the characters '\' and 'n'. Make sure your control is set to "\" Display and that you are truly sending the termination character.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

09-11-2019 04:01 PM - edited 09-11-2019 04:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@GerdW wrote:

Hi feel,

using BytesAtPort is wrong 99.9% of all times. Especially when you enable the TermChar!

There are so many threads about serial port issues: read them...

Hi GerdW,

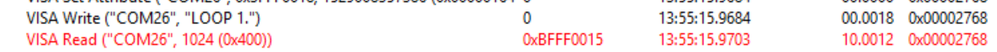

Thanks for your suggestions! I used BytesAtPort is because when I used fixed-size bytes, there is a timeout error. The bytes size is 1024 so it should be enough.

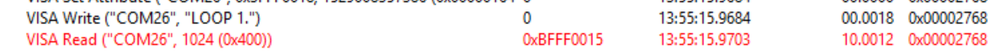

VISA: (Hex 0xBFFF0015) Timeout expired before operation completed.

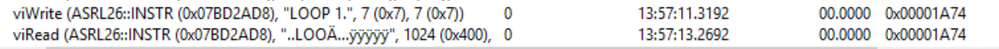

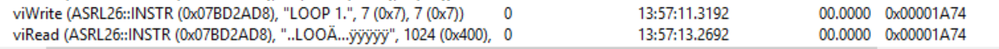

I tracked I/O when I run the code in Labview and test is in NI MAX. It worked well in NI MAX. The configuration setting is the same.

09-11-2019 04:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Mark,

@Mark_Yedinak wrote:

First, I never use the "Bytes at Port". It is not reliable. I suspect your issue is a delay with the instrument. Bytes at Port will return the count at that moment. If you check it immediately after sending the command it is probable the device has not had a chance to respond yet. Or you will get a value that is for a partial response because the entire set of data has not been written out yet by the device. Either your protocol should define how many bytes a response should be or it will use a termination character. Use either of those to control your read.

The other issue you may be having is your command that you are sending. Is the control set to display "Nomral mode" or to display "\" Mode? If it is set to Normal you are not actually sending th eline feed character but rather you are sending the characters '\' and 'n'. Make sure your control is set to "\" Display and that you are truly sending the termination character.

Hi Mark,

Thanks for your suggestions! I checked the potential problems. I changed the Bytes at Port to fixed size of 1024. This is long enough. Then I added a waiting time in the read loop as 3000 ms, still a timeout error.

My control is in "\" mode. I tracked it in I/O. The commands I sent in Labview and VISA test panel seem to be the same. The delay between write and read can be very short.

09-11-2019 06:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If you really want help, please post THE COMPLETE protocol definition. You are giving use bits and pieces and expect us to know how to advice you on what you need to change. The protocol will define how the response is terminated or it will tell you EXACTLY how many bytes you need to read. Simply specifying 1024 as the number of bytes to read without the correct termination character specified WILL result in the timeout like you are seeing. If you continue to provide us will incomplete information we will not be able to help you.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

09-11-2019 06:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Mark_Yedinak wrote:

If you really want help, please post THE COMPLETE protocol definition. You are giving use bits and pieces and expect us to know how to advice you on what you need to change. The protocol will define how the response is terminated or it will tell you EXACTLY how many bytes you need to read. Simply specifying 1024 as the number of bytes to read without the correct termination character specified WILL result in the timeout like you are seeing. If you continue to provide us will incomplete information we will not be able to help you.

Sorry I didn't notice this. I'm really sorry for being annoying. The attachment is the protocol. The definition is in Part X's beginning for command LOOP. The total size is 99. I set it as 1024 because this is the default setting in NI VISA test panel and it worked well. The termination character is \n or \r for write and \n\r for write. I'm think about maybe I can set the termination character as \r then I can use one Termchar for both write and read. But the problem is the waking up command is \n in Part V.

09-11-2019 09:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Read page 12 and 13 of your manual.

It says the response to a Loop is the ACK and a 99 byte Loop packet. Sounds like you should be requesting 100 bytes!

Also, you may wan to disable the termination character on the serial configure. Since you are reading binary data, a termination character of hex 0A is just as likely to end up in the middle of a data packet as any other byte, and it does not signal the end of a packet as it would if you were reading ASCII data.