- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Convert byte to integer, have troube in type cluster

09-09-2019 01:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

I'm trying to translate the bytes string I got from VISA read to an integer. The string I read directly from the indicator is random unreadable characters. But from the python program I run successful, I know it should be like.

b'\r\x06\x00\x1c\' \x03\xff\x7f\xd0\x02\xce\x02\x00\x00\x00\x00\x85t \x00\x00\x00\xfa\x02A\xff\xff\x00\xff\xff\x00\x00 \x00\xffK\xff\xff\xff\xff\xff\xff\xff\xff\x00\xff\xff\xff\xff\xff\xff\xff\xff\xff\x1c\'!\x03\xd1\x02\xd1\x02\xcf\x02\x00\x00\x00\x00\x84t \x00\x00\x00\xfa\x02AL\xff\x00\xff\xff\x00\x00 \x00\x00K\xff\xff\xff\xff\xff\xff\xff\xff\x00\xff\xff\xff\xff\xff\xff\xff\xff\xff\x1c\'"\x03\xd0\x02\xd1\x02\xd0\x02\x00\x00\x00\x00\x82t!\x00\x00\x00\xfa\x02AL\xff\x00\xff\xff\x00\x00!\x00\x00K\xff\xff\xff\xff\xff\xff\xff\xff\x00\xff\xff\xff\xff\xff\xff\xff\xff\xff\x1c\'#\x03\xd1\x02\xd1\x02\xd1\x02\x00\x00\x00\x00\x83t!\x00\x00\x00\xfa\x02AK\xff\x00\xff\xff\x00\x00!\x00\x00K\xff\xff\xff\xff\xff\xff\xff\xff\x00\xff\xff\xff\xff\xff\xff\xff\xff\xff\x1c\'$\x03\xd2\x02\xd2\x02\xd1\x02\x00\x00\x00\x00\x86t!\x00\x00\x00\xfa\x02AL\xff\x00\xff\xff\x00\x00!\x00\x00K\xff\xff\xff\xff\xff\xff\xff\xff\x00\xff\xff\xff\xff\xff\xff\xff\xff\xff\xff\xff\xff\xff\x0b\x8e\n'

The data I need is at offset 12 and its size is 2. It can be decoded as decimal integer. I tried Typecasting and Unflatten From String from this topic.

https://forums.ni.com/t5/LabVIEW/Convert-3-Bytes-to-16-Bit-Decimal-Number/m-p/3666285#M1030530

My problem is I'm confused by the type cluster. There is no description of how I could define them. When I tried to put a constant into it, it automatically shows it is a long 32-bit integer. But on the topic, it is U8 and U16. And for me, I need U16. Besides, why they are all zero?

09-09-2019 01:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Attach your actual VI where you attempted this. Be sure your string is saved in their as default data.

09-09-2019 01:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

09-09-2019 03:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi GerdW,

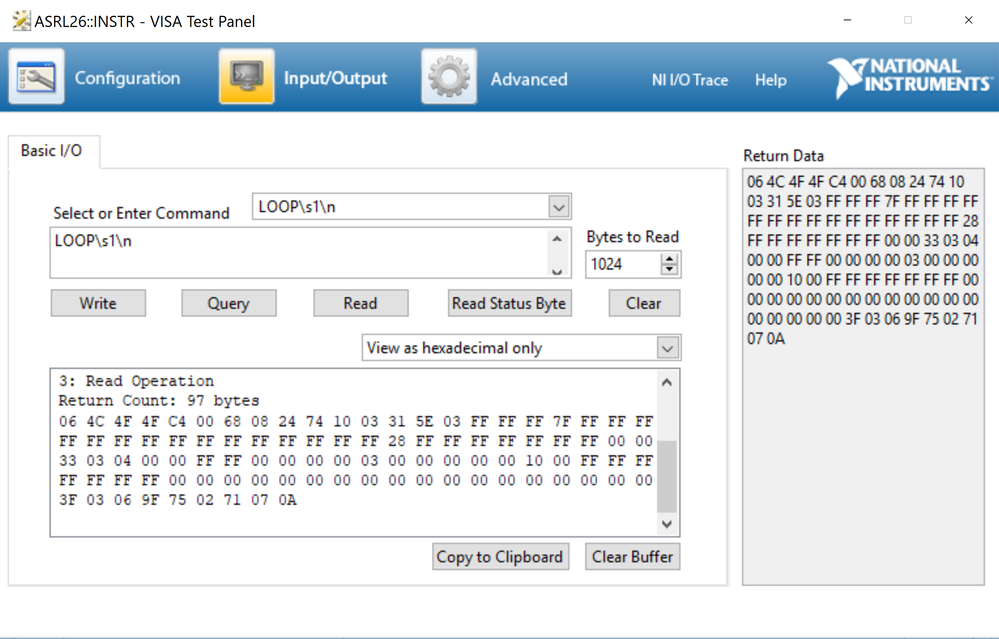

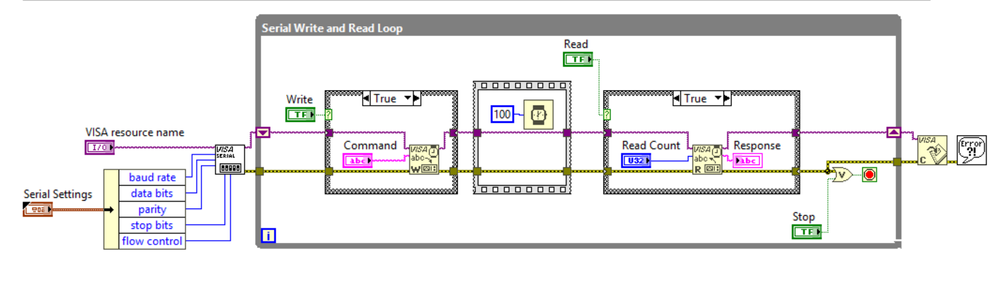

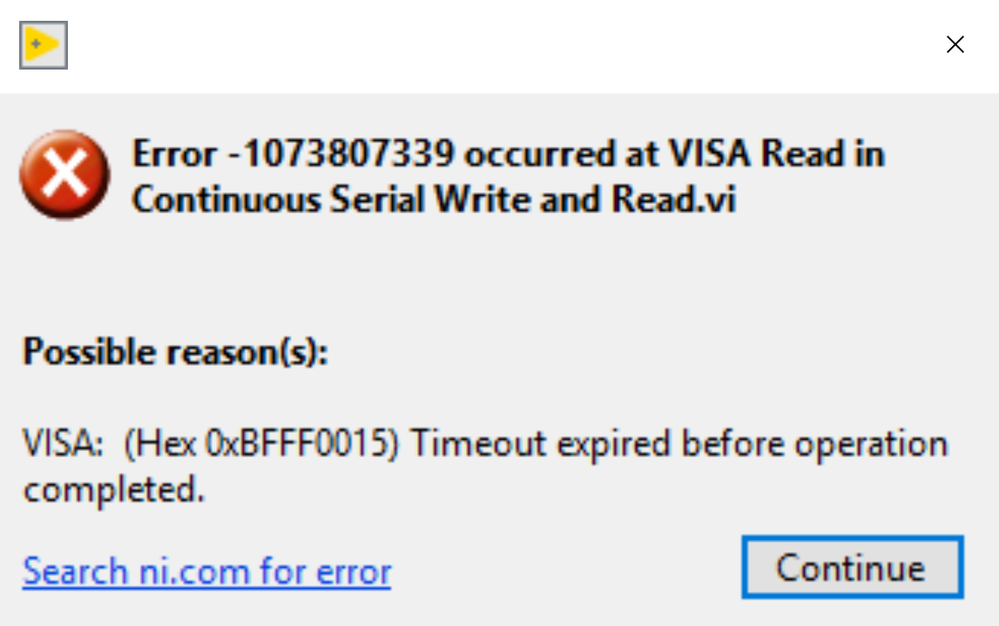

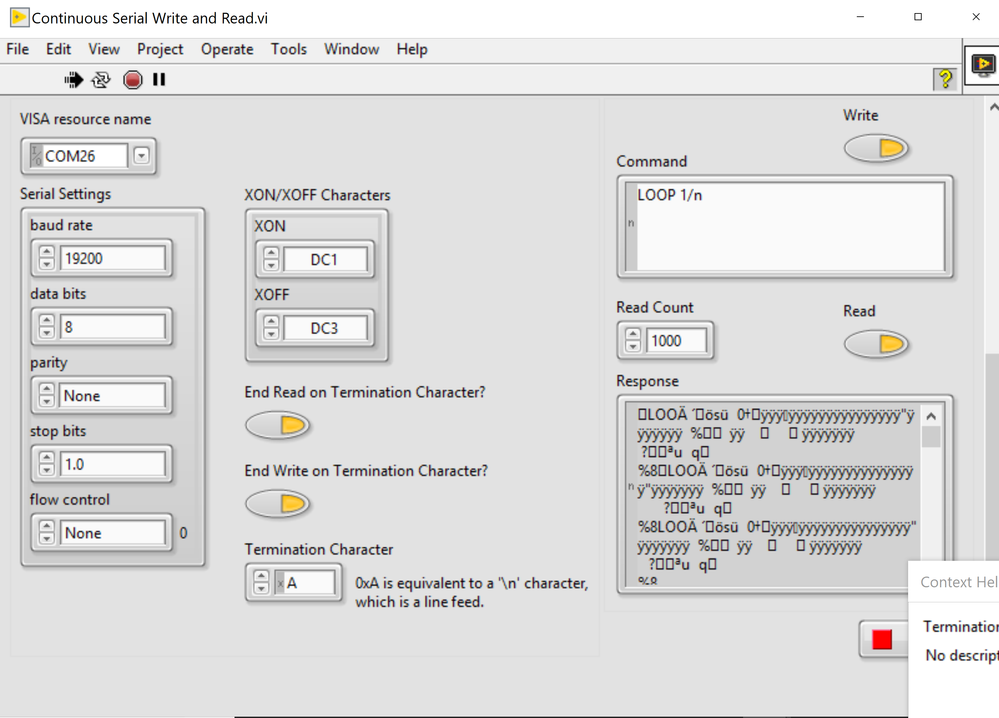

Thanks so much for your reply! I met the timeout error when I'm using Serial read and write example VI as below. I can communicate with the sensor well in NI MAX so I'm guessing nothing wrong with the hardware. Do you know anything I could change?

09-09-2019 03:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Sorry, I forgot to change the capture picture's names. I'm wondering if this is because I didn't set the termination characters.

09-09-2019 03:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@feelsomoon wrote:

Hi GerdW,

Thanks so much for your reply! I met the timeout error when I'm using Serial read and write example VI as below. I can communicate with the sensor well in NI MAX so I'm guessing nothing wrong with the hardware. Do you know anything I could change?

No idea because you didn't attach your actual VI, just a couple of pictures of a test panel and a block diagram. The block diagram one doesn't show us what you have typed into that Command control.

Did your Command include the line feed termination character like your test panel shows?

09-09-2019 04:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

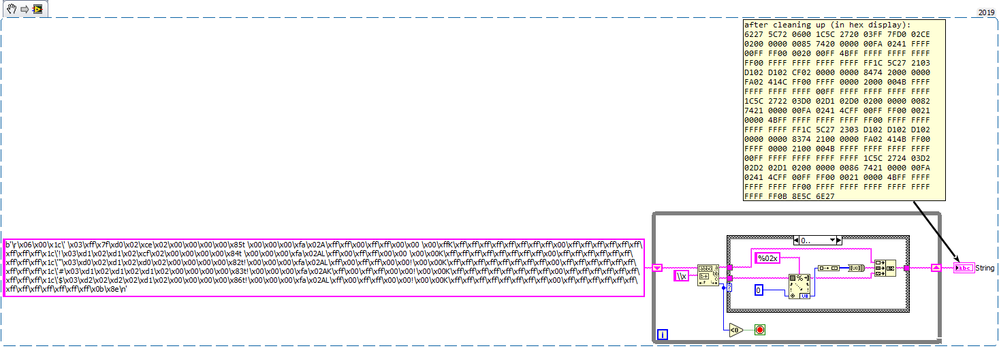

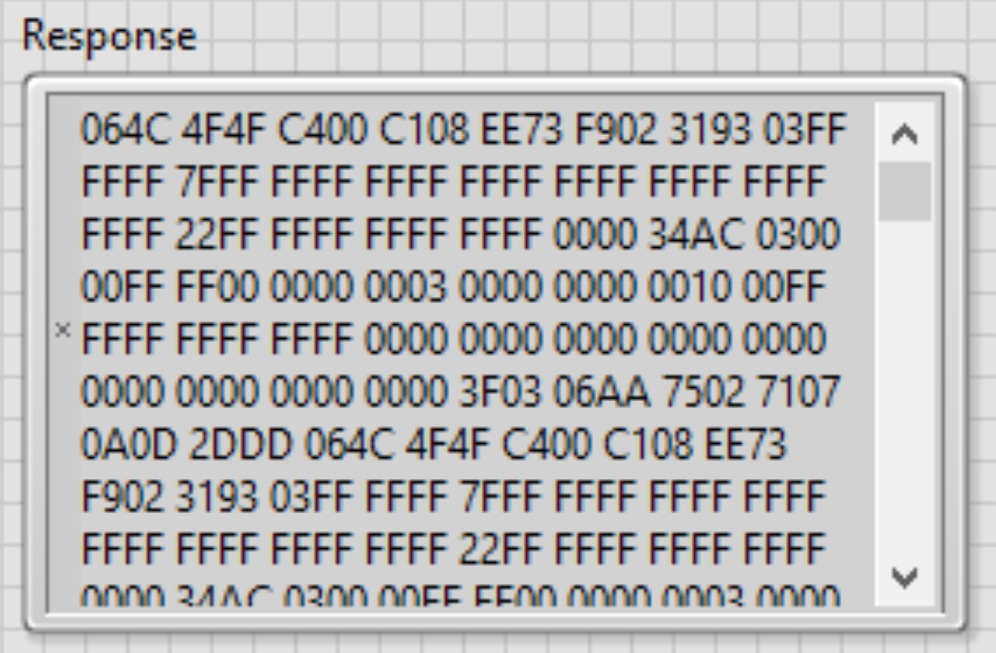

Yes, I did. I tired the original example and it worked. But the return data in the indicator is like. Can I add your code after the read Serial VI?

09-09-2019 04:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Sorry to bother you again. Just to clarify, I'm confused is because the return data in the indicator is not like the data from NI test panel. I'm not sure if I could use match pattern like that.

09-09-2019 04:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Right click on your Response string control and select "Hex Display". Then it will look almost like the display in the VISA Control panel. The difference will be that the VISA Control panel shows each 8 bit value as an independent 2 character hex value, while the LabVIEW string display will group 2 such 8-bit values into a 4 character hex value but that is just cosmetics.

09-09-2019 04:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

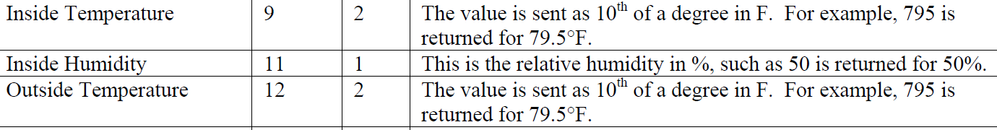

Thank you rolfk! I never realized this is the display problem. May I ask another silly question? I'm wondering how the 8 bit data could represent 1 integer. Like I want the Outside Temperature with offset at 12, size of 2, corresponding to 3193. Should it be 31=49, 93=147, 3193=147+49=196? This seems wrong because the temperature is much higher than this.

Besides, when I code, should I split the raw data first them format them separately? I still don't know which VI I should use and how to set the type parameter of Type Cast and Unflatten From String.

Sorry if these problems are too basic, I'm really new with these. Thank you for your patience.