- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

1 Msample DAQmx analog output buffer size limit

Solved!10-27-2021 07:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Is there a way around the 1 Msamples buffer size limit when generating analog signals from NI DAQmx boards (here concretely USB-6343)?

From what I could understand (in the documentation), the analog buffers are not fully stored on the board but are streamed on demand by Labview from the computer memory to smaller buffers residing on the board. Under these circumstances and as long as the aggregated throughput of the analog signals is quite below USB 2 bandwidth there should in principle not be any hard limit set on the size of the buffers.

Solved! Go to Solution.

10-27-2021 07:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You've interpreted the docs correctly. There isn't really a hard limit. Have you tried it and had a problem?

Note that larger buffers lead to more lag time between when you write data to the task and when it shows up as an output signal.

There are ways to influence this with DAQmx properties like "Data Transfer Request Condition", and I think there are some special properties specifically for USB devices. But it can get pretty tricky to combine high speed generation with low lag times.

-Kevin P

11-04-2021 07:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for your answer! I double checked everything and the problem was actually lying in the default timeout of "DAQmx Wait Until Done" (incidentally 10 sec when I was using a clock rate of 100 KHz which led to 1 Msamples).

Regarding lag time on buffer load I would actually believe that for signal generation the latency should only increase up to the capacity of the on board buffer and not past this point since excess data should only be transfered on demand by the driver.

However, in practice, I actually see a clear linear increase in loading time (at least in the range 1 to 16 Msamples). Would this loading time be related to the driver allocating computer memory and copying data? Or would the onboard buffers be larger than 16 Msamples? I could not find this information in the user guide of the USB-6343...

11-04-2021 07:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Regarding lag time on buffer load I would actually believe that for signal generation the latency should only increase up to the capacity of the on board buffer and not past this point since excess data should only be transfered on demand by the driver.

Well, no, not necessarily. If you write too much data too quickly to your task, the device's hardware FIFO buffer will fill up and then the task buffer will *also* start to fill up because DAQmx can't deliver it to the board until the board has room for it. So you can for sure build up a total latency that's (nearly) the sum of the FIFO size and your task buffer size.

And that probably explains the linear increase in latency you see. Your device is spec'ed for only a 8k AO FIFO, so the vast majority of your samples are getting backlogged in the task buffer.

-Kevin P

11-04-2021 08:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

11-04-2021 09:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Sorry, I auto-corrected you when I shouldn't have. You did refer to "loading time". Based on an awful lot of threads where terms are used loosely, careless, and wrongly, I did an auto-correct and assumed you were talking about latency from the time you write until the time the signal shows up as output.

Can you post the code in question and clearly identify where you start and stop your measurement of "loading time"? Also, what are some of the times you measure for different size writes? It's one thing to have a linear relationship, but I'm also wondering whether the slope is large or small (thus whether the total time is "significant" or not.)

I also have a vague awareness that USB devices use a 3rd "USB transfer" buffer, but haven't explored or memorized the implications in any detail. Sorry, can't seem to find any useful articles just now on the site.

-Kevin P

11-04-2021 09:40 AM - edited 11-04-2021 09:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

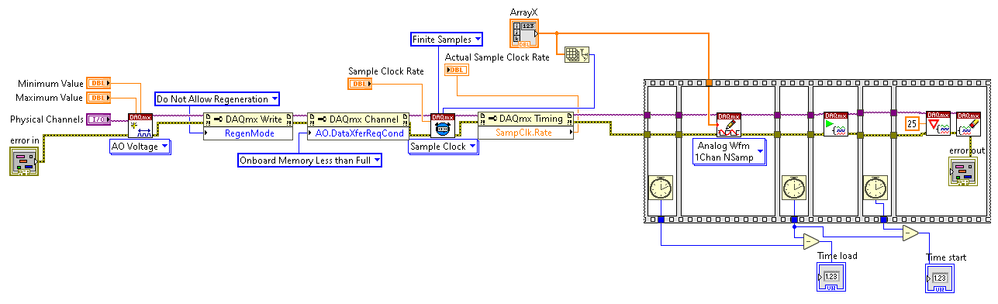

No problem, and thank you for your time and dedication in trying to clarify this! I also suspect that there are several intermediary buffers involved which bloat the total buffer loading time. The loading times I measured are actually quite high for my application. We are talking about 150 ms for a 16 Msample buffer, down to 17 ms for 1Msample. The minimum loading time I measured (for anything below 500 Ksample) is around 8 ms and probably reflects USB driver communication and OS thread latency. Task start time was more or less constant. I added a snapshot of the simple setting used for time measurements below.

11-04-2021 12:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I agree with your method for isolating load time. Now let me ask an annoying question. What's the nature of the overall app that makes you sensitive to something in the order of 100 msec? Are you *sure* it matters *really*? I know that other aspects of task config often require something in the 10's of msec, so even 150 msec isn't something I immediately think of as "very high".

If so, here's my educated guess and a possible workaround.

Guess: it has mostly to do with allocating the buffer memory (and possibly initializing values there?).

Workaround: Make an explicit call to DAQmx Configure Output Buffer before sending your array to DAQmx Write. I'd expect the Buffer config to take most of the time and the Write to take quite a bit less than you're seeing now. This approach could help if you're able to know the needed buffer size during a time-insensitive part of your app (such as initialization & config), but not yet able to know the signal content you need to write into it. Then you only deal with the hopefully-faster write-only part during the time-sensitive period.

Alternate workaround: One can also run a finite task that generates more samples than the buffer size. But you have to write incremental data to the task repeatedly to keep it going. So another way to speed up the buffer allocation/loading time is to live with a smaller buffer and a more complex method for feeding data to the task.

-Kevin P

11-04-2021 01:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

We are driving four optical components (galvometers, OTLs) of a camera based microscope with four tightly synchronized analog signals generated by the board. We need to update the driving signals before each new frame (hopefully predictably but possibly on the fly). Any delay in the reloading of the buffer will introduce breaks in the acquisition and will limit the achievable frame rate (which is critical for the imaging).

Now that I could check that there is no hard limit on the buffer size I guess that a practical approach would be to precompute and load the required control signals for at least tens or even hundreds of frames and only update the buffers when required (and also reduce the clock rate to the strict minimum required to ensure the signals integrity).

I will also check your two suggestions. The first one is easy to check but I was totally unaware that the buffer of a running task could be reloaded! Would you have an example illustrating this?

Thanks a lot!

11-05-2021 06:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

So I have tried your first suggestion (calling DAQmx Configure Output Buffer beforehand) but it did not shorten the posterior buffer loading time (the call to the configuration VI itself being measured to <1ms, please see the new test VI below).

However, with this setup I have tried to allocate a 16 Msample buffer while only sending 1 Msample and doing so I could estimate that the allocation of the (16 Msample) buffer alone takes some 30 ms, the remaining 120 ms reflecting all other data transfer.

I guess that we will have to live with these transfer times which are, as you rightly pointed out, not that large!