View Ideas...

Labels

-

Analysis & Computation

305 -

Development & API

2 -

Development Tools

1 -

Execution & Performance

1,027 -

Feed management

1 -

HW Connectivity

115 -

Installation & Upgrade

267 -

Networking Communications

183 -

Package creation

1 -

Package distribution

1 -

Third party integration & APIs

289 -

UI & Usability

5,456 -

VeriStand

1

Idea Statuses

- New 3,057

- Under Consideration 4

- In Development 4

- In Beta 0

- Declined 2,640

- Duplicate 714

- Completed 336

- Already Implemented 114

- Archived 0

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

Hard Inline VI

Submitted by

SteveChandler

on

07-18-2011

12:29 PM

35 Comments (35 New)

SteveChandler

on

07-18-2011

12:29 PM

35 Comments (35 New)

Status:

New

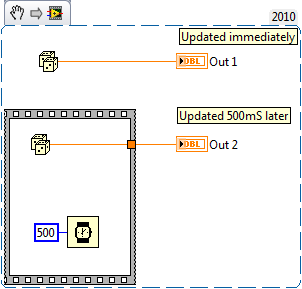

When you inline a subVI it is not the same thing as placing the contents of the VI on the callers block diagram. Almost but not quite.

The difference is that all outputs of a VI (inlined or not) become available at the same time when the VI completes execution.

Set the VI above to inlined and use it in another VI

The idea is an additional inline setting as follows.

Now the VI will truely be inlined as if it were not a sub VI at all.

The name "hard inline" is just a suggestion and could be called something else.

=====================

LabVIEW 2012

LabVIEW 2012

Labels:

35 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.