- Document History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

Contact Information

University: University of Texas at Austin

Team Members (with year of graduation):

Aimon Allouache - May 2014

Scott Medellin - Aug 2014

Eric Root - May 2014

Ansel Staton, Project Lead - May 2014

Faculty Advisers:

Dr. Raul Longoria, Professor

Email Address:

Submission Language:

English

Project Information

Title: The Human Aware Autonomous Mobile Robot

Description:

For this project, our team designed, built, and programmed a human-aware autonomous mobile robot (HAAMR). Specifically, our robot was developed with the ability to avoid a person who is crossing its path of motion. This action is carried out autonomously by the HAAMR, which uses depth sensors to recognize the obstacle.

Products:

LabVIEW and myRIO, along with Kinect interface for LabVIEW

The Challenge:

The HAAMR was designed to add to the growing research in the field of human- robot interaction (HRI). This is an active field of research that seeks to give robots behavioral traits that allow their interaction with humans to be more natural and comfortable. Most importantly, autonomous robots need the ability to avoid static and dynamic obstacles such as humans in a safe and repeatable way. Our algorithms allow the robot to navigate to a desired target point while dodging a dynamic obstacle.

The Solution:

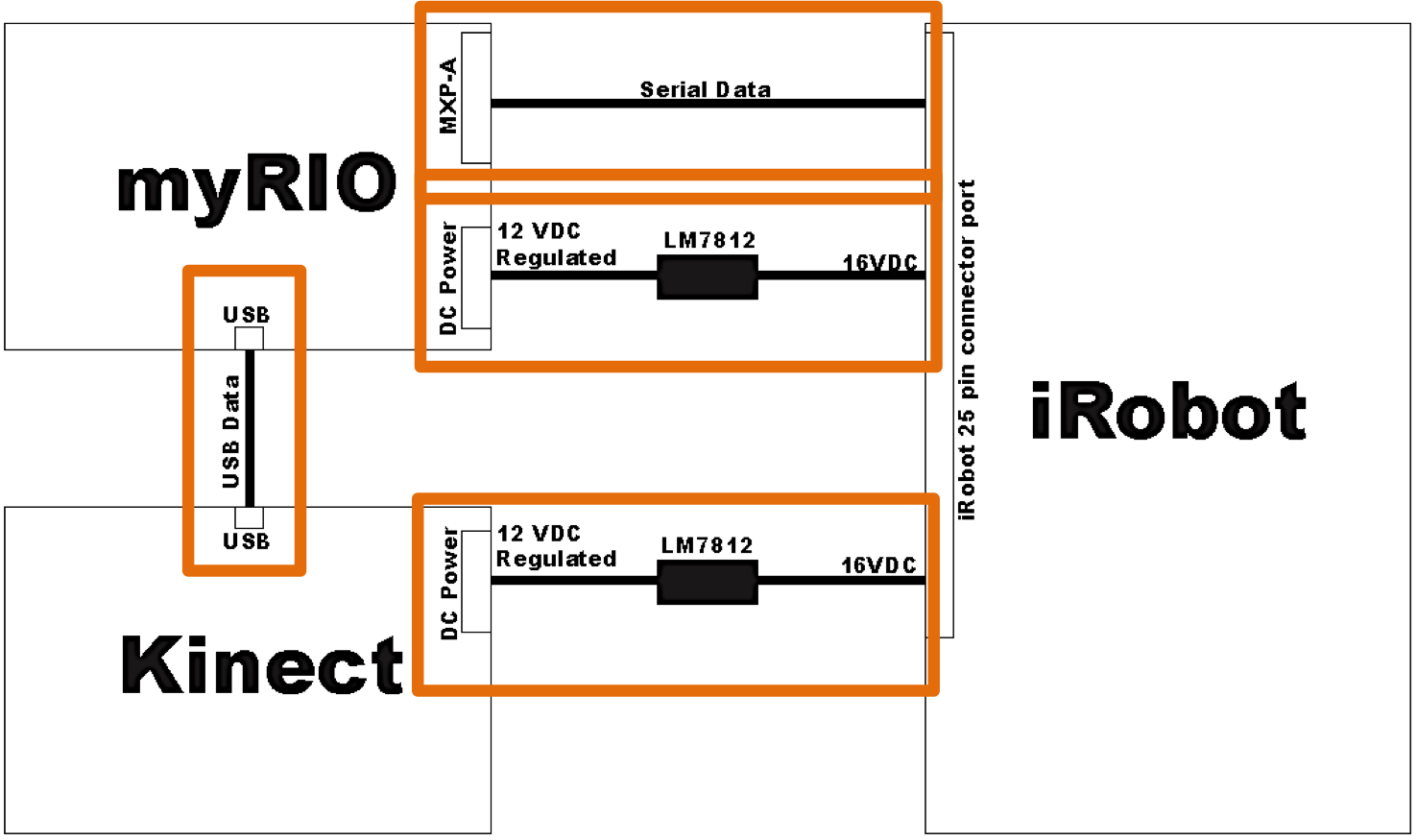

This robot has been designed using hardware provided by both National Instruments and our project sponsor, Dr. Raul Longoria. This hardware consists of an iRobot Create differentially steered robot, an Xbox Kinect sensor for gathering visual data, and an NI myRIO—a development board and real time processor created by National Instruments. The myRIO analyzes the data provided by both the iRobot Create and the Kinect sensor, and executes the algorithms that we designed to make decisions about how the robot acts. A simple electrical system was designed to power the myRIO and the Kinect sensor directly from the iRobot create's battery; communication with the iRobot from the myRIO was performed through the myRIO's UART serial ports.

Figure 1: human-aware autonomous mobile robot Block Diagram

Figure 1: human-aware autonomous mobile robot Block Diagram

Figure 2: Prototype of the System

While numerous algorithms for static obstacle avoidance have been developed and extensively studied by numerous researchers, the problem of dynamic obstacle avoidance is less well solved. Our algorithm detects the speed that an object is moving relative to the robot, and causes the robot to turn away from the obstacle at a rate proportional to the obstacle's speed. We chose to force the robot to always turn in a direction opposite to the object's motion, so as to consistently pass behind the object; this prevents the robot from tripping its human interactee.

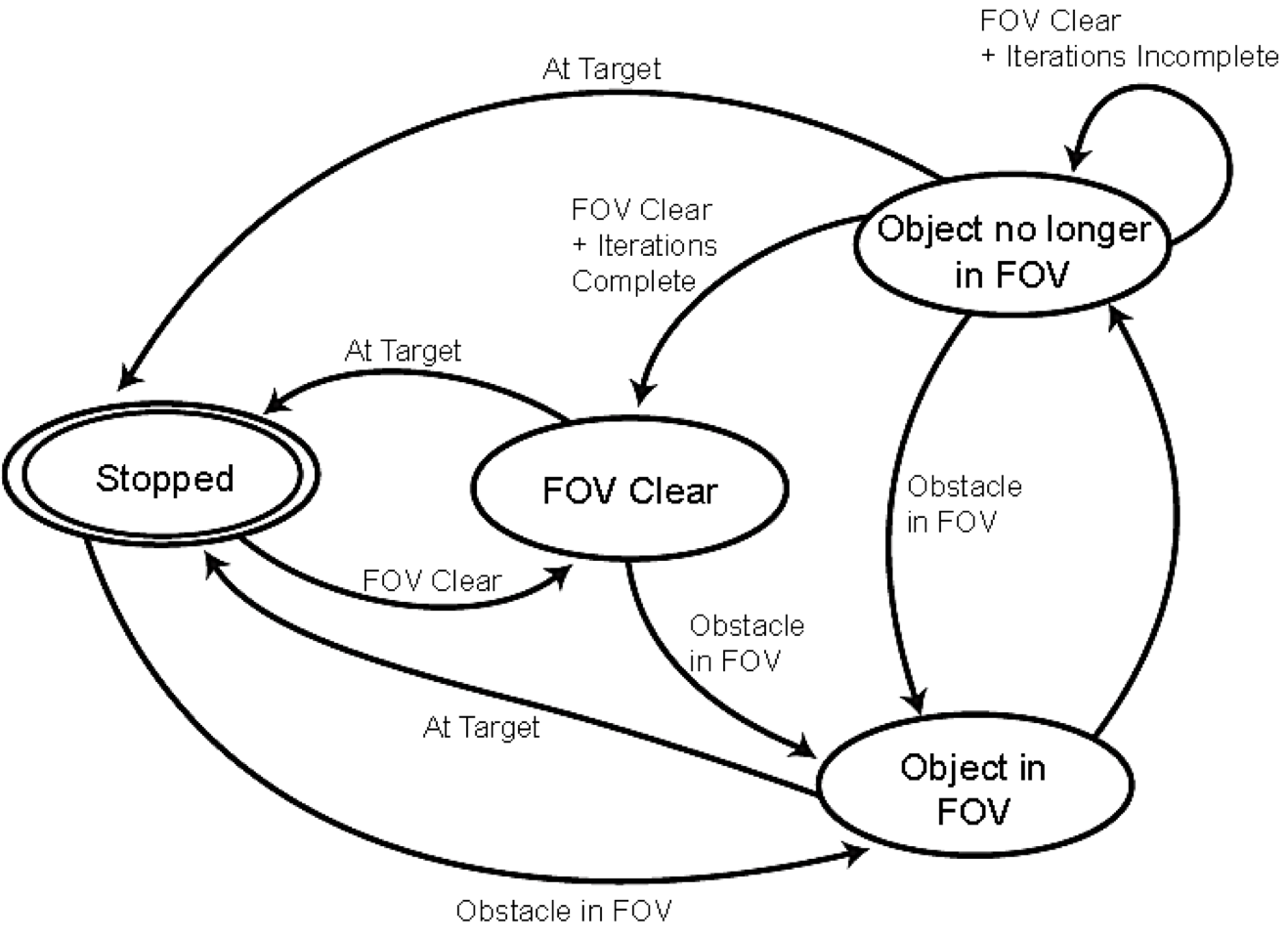

We chose to model our robot's control architecture as a simple finite state machine with four states. The robot begins and ends in a stopped state. When commanded to move to its final target (using the myRIO's external button0 as a trigger), the robot begins moving and turning towards its target destination. If the Kinect's depth sensor detects an obstacle within a threshold distance of the robot, the robot enters into an obstacle avoidance state. Once the obstacle has passed out of the robot's field of view, the robot enters a state in which it maintains a memory of the object's existance for a brief time (2.5 s). After this time has elapsed, the robot returns to its earlier state in which it moves directly to the target.

Figure 3: Finite State Machine

This algorithm uses three main subroutines.The first subroutine is an obstacle detection routine using data from the Kinect sensor, the second is a localization routine using the iRobot's odometers, and the third is the robot's actual control routine.

The obstacle detection routine uses the Kinect for LabVIEW libraries which can be found on the LabVIEW community website to connect to the Kinect sensor. The routine reads an array of 11-bit depth values from the Kinect (at a 120x160 pixel resolution). It then finds the smallest value in this array and builds a list including this point, and all other pixels within 200 units of this smallest value (along with their array location), which it treats as the object of interest. The routine then finds the horizontal centroid of these points, and determines the angle between the robot's forward direction and this point. By tracking the horizontal motion of this point, the obstacle's velocity relative to the robot can be estimated, and the robot can turn away from the point accordingly.

The localization routine takes odometry data from the iRobot and uses simple trigonometry to determine the robot's relative change in position from it's previous location. The accuracy of this routine was limited by the iRobot's internal odometry calculations; we performed a simple statistical analysis to determine whether our robot was able to move as accurately as we desired.

Finally, the robot's control routine was built upon timed loops, utilizing the myRIO's real-time capabilities. The robot updated it's state during each loop iteration; the loop was timed to iterate once every half second. During each loop iteration, the robot checked its field of view (using the first routine described above), updated its position (using the second routine), and updated its state based upon these two routines. Based upon its state, it either differentially steered toward the desired final position, or away from an obstacle in its field of view. In order to use both of the myRIO's processing capabilities to the utmost, we performed all of our depth sensor data processing on one of its two cores, and all of our odometry and iRobot communication on the second core.

We found that while our algorithm did allow the robot to successfully avoid a moving obstacle crossing its path and still move to its target position successfully, the accuracy of our localization routine suffered due to a lack of external localization verification methods. A more sophisticated localization algorithm could possibly obtain vision data from the Kinect and use Kalman filtering to localize the robot. We also found that the maximum loop speed of the robot was limited by the serial communication rate between the iRobot create and the myRIO. A more sophisticated robot would instead drive the iRobot's wheels directly from the myRIO, and obtain encoder data directly from the iRobot's wheels; this, however, would require extensive hardware modification to the iRobot.

By utilizing commercial-off-the-shelf products, our design can easily be replicated in the future, and our algorithms could be applied to more advanced robot systems. More extensive details as to our design process and results can be found in the attached PDF report.

Figure 4: Final Design of the human-aware autonomous mobile robot

Attach Poster

Nominate Your Professor (optional)