- Document History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

Contact Information

Country: Denmark

Year Submitted: 2018

University: Aarhus University School of Engineering

List of Team Members: Ian Stewart Olsen Grant (2018),

Mads Langaa Nielsen (2018),

Morten Lisberg Jørgensen (2018),

Peter Skovsted Østergaard (2018)

Faculty Advisers: Claus Melvad, Professor

Main Contact Email Address: morten.lisberg.iha@outlook.dk

Title: Search and Find Autonomous Underwater Vehicle

Description: Underwater robot, for use in clear as well as turbid waters, to search and find lost objects and to aid in search for missing persons.

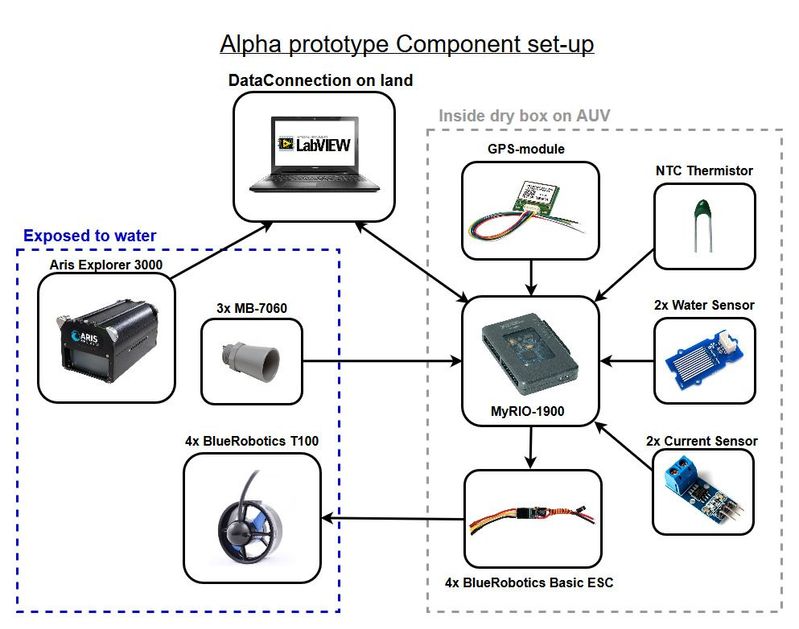

Software: LabVIEW 2017, NI myRIO Toolkit, Vision Assistant 2017

Hardware: NI myRIO-1900

Other: 3x MB7060 XL-MaxSonar-WR, 4x T-100 BlueRobotics Thruster, 4x BlueRobotics Basic ESC, ARIS Explorer 3000, ARIScope Software, VK2828U7G5LF GPS module.

The Challenge:

This project is inspired by Jeanette, mother of Nick who disappeared in January 2017. The search for Nick lasted several months. One can imagine the thoughts running through her head: Has he been kidnapped or murdered? Has he left us without saying goodbye? Is he lying somewhere waiting for help? It must be emotionally without any equivalent. 3 months went by before the body was found. Nick had drowned in the harbor.

This story really made an impact on us, and we wanted to do something that could make a difference to people in situations like this.

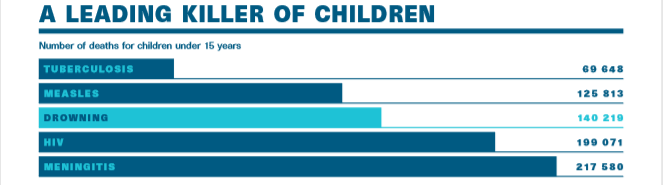

When people go missing, in areas with lakes or harbours, there’s a possibility that the missing person has drowned. According to World Health Organization “drowning is the 3rd leading cause of unintentional injury death worldwide, accounting for 7% of all injury-related deaths which is an estimate of 372 000 people a year” (Figure 1).

Searches in water are typically done by the use of divers. Especially in turbid waters these tasks are difficult and demands a lot of resources. Sometimes it can take several months before the body is recovered.

Goal:

Our goal became to design a prototype of an Autonomous Underwater Robot. This could be an important tool to support search teams, being able to see through turbid waters using sound imaging.

The robot should be able to:

- Systematically manoeuvre around in harbours and lakes

- Work in clear as well as turbid waters

- Avoid collisions and getting stuck

- Search for predefined objects

- If the robot finds an object of interest, it should be able to log the approximate GPS coordinates, log an image of the object, and send this report to the search team for recovery.

The Solution:

The solution is a floating robot, where the chassis is made of HDPE for increased buoyancy. As this was an alpha prototype, there were not invested in batteries for the AUV, so a tether was used for power and viewing the data stream.

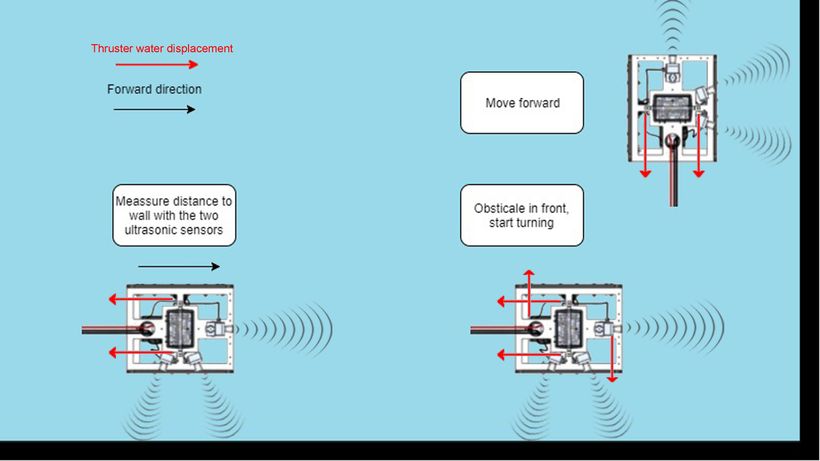

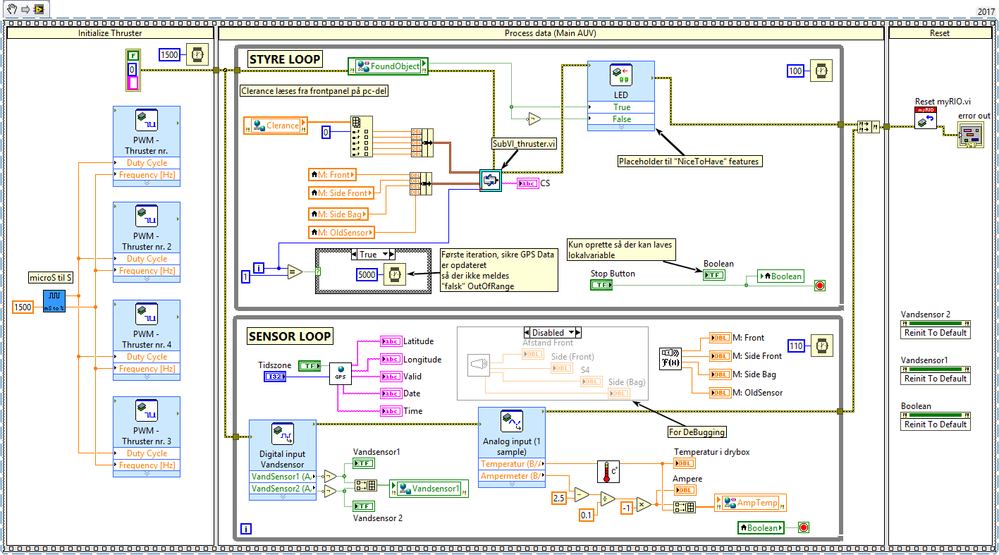

The autonomous steering is implemented as a State-machine, shifting between states depending on the distances to objects around the AUV. Distance is calculated with use of Ultrasonic Sensors(Figure 2).

In the main forward state, the robot navigates by following the harbour front. The difference in distance, measured by the two sensors on the side, is converted to a voltage, regulating the thruster force, thus a constant distance to the harbour wall can be obtained (figure 3). The AUV has a max. of 7.5m range from the harbour walls.

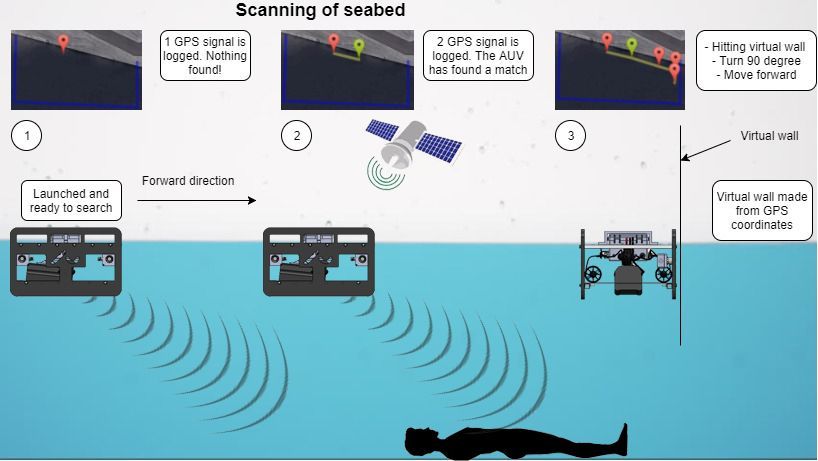

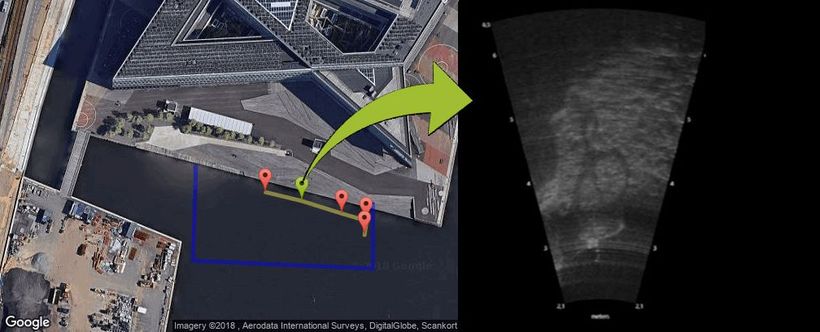

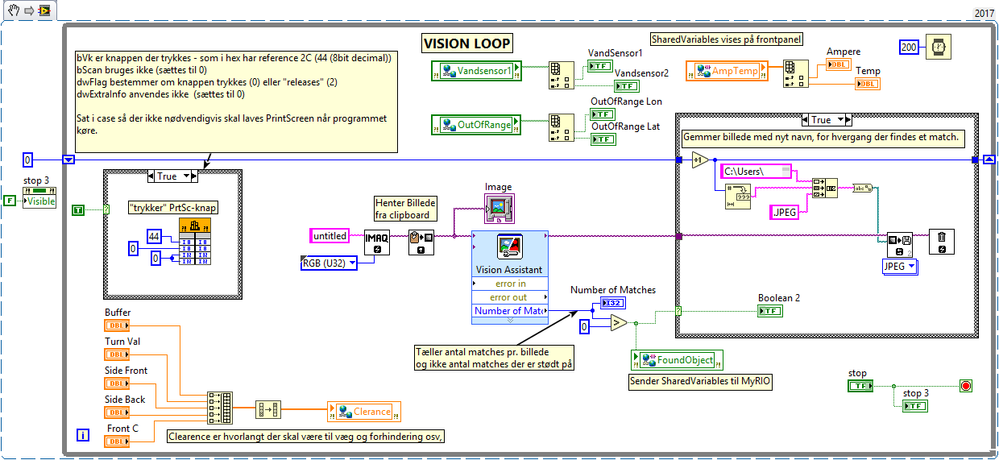

With GPS-coordinates the user can set boundary points, to make sure the robot does not sail too far away from the desired search-location. The GPS is also used to log the “sailing” route and log the approximate location-of-interest, by composing a URL link. A location of interest is identified using Computer Vision (Figure 4).

For the robot to “see” in all kinds of water, Sonar Imaging is used. The sonar is an Aris Explorer 3000. This is the eyes for the computer vision, which is implemented through LabVIEW Vision Assistant, by the use of Feature Recognition. A better solution would be to use Machine Learning, but this demands a comprehensive image library, which we didn’t have, in order to train the algorithm.

To test the computer vision with the AUV, it was set to search for pedal bikes (a distinct geometry), which was successful (Figure 5). A map in a web browser was updated with a color-coded pin at the given location.

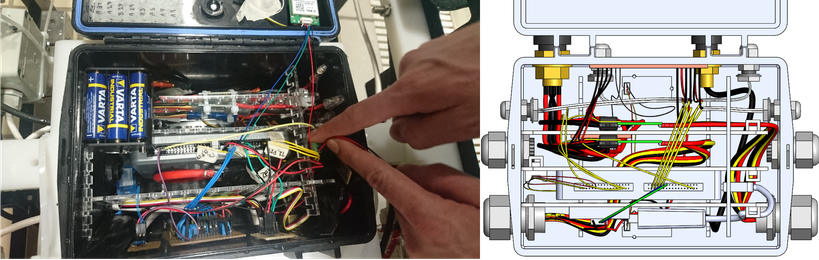

For protection of equipment in case of defects and errors, several sensors were placed inside the drybox (Figure 6). Water sensors for detection of leakage, thermistor in the case of excessive heat dissipation from electrical equipment, and Current sensor to shutdown thrusters if pulling too much current in case something gets tangled in the thrusters.

An overview of components can be seen in (Figure 7).

All in all: The tests of the AUV prototype (Figure 😎 were successful. The autonomous navigation worked well. It can follow the harbour walls with a desired distance, avoid objects in front of the AUV, and it is possible to use the Aris Explorer 3000 with computer vision software, to automatically find objects and log the approximate location coordinates. The question left unanswered is, whether it can be used to detect a dead person’s body in the water. From our tests on a bicycle we cannot make a conclusion on this question, but we strongly believe this is possible.

The benefits of using LabVIEW and NI tools

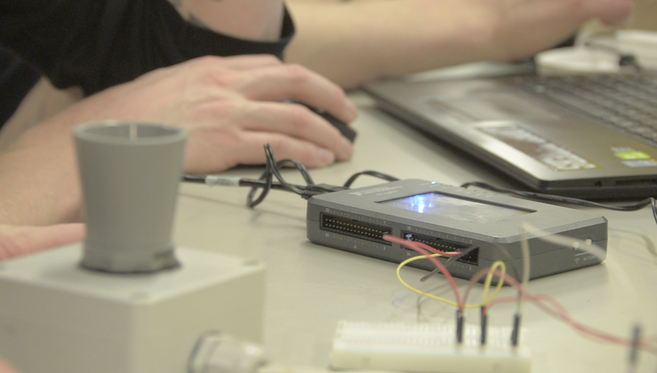

All project members are Mechanical Engineers and prior to this project, all team members have had one semester course, learning the basics of LabVIEW programming. If it wasn’t for the intuitive block programming and LabVIEW community, we would never have been able to make all the code needed (Figure 9 & 10), for this project to come alive, within the given project timeline. Especially is the vision assistant a unique toolset, without that, there would be no Search and Find Autonomous Underwater Vehicle project.

The fact that we as Mechanical Engineers, can make advanced programming on our own prototypes and test it, is just awesome. It makes a world to difference, that it’s not just a black box of magic, but we actually know what’s going on. It’s also giving us a greater knowledge of doing mechanical designs in the first place, since we have a slight understanding of the electrical systems, needed to bring these mechanical designs to life.

VI code snippets

Level of completion:

Level of completion is an alpha prototype

Time to build:

4 Months - 10 ECTS pr. group member

Additional revisions that could be made:

To have a fully functional beta prototype, the following needs to be made:

- Design of battery pack

- Embed ARIScope software, which will make the AUV tether free.

- Add ultrasonic sensors on the second side, so the AUV can sail in both directions

- Add gyroscope to increase sailing stability

- Wireless all-weather communication to remotely access the AUV

- Use Machine Learning instead of Feature Recognition

Special thanks to

- Professor Claus Melvad for guidance and inspiration to make this project possible. Because of his engagement, open-door-policy and commitment to this and all his others Mechatronic projects.

- Thanks to Jeanne Dorsey from Ocean Marine Industries (US), Jake Reed from Reeds Sonar Consultancy LTD (UK), for help with the sonar imaging equipment Aris Explorer 3000 made by SoundMetrics (US).

- Thanks to Dennis Lottrup, Kasper Trabjerg, Ole Erikstrup, Rasmus Lindengren and William Iversen for inspiration to the idea of this project.

- Spektrum Odder Swimming - for providing testing facilities