- Document History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

Contact Information

Country: Denmark

Year Submitted: 2018

University: Aarhus University

List of Team Members (with year of graduation):

Christian Brahe

Christian Sørensen

Johan Krogshave

Robert Søndergaard

Tim Pedersen

(All graduated in February 2018)

Faculty Advisers: Claus Melvad

Main Contact Email Address: timtoernes@gmail.com

Project Information

Title: Autonomous 3D mapping drone

Description:

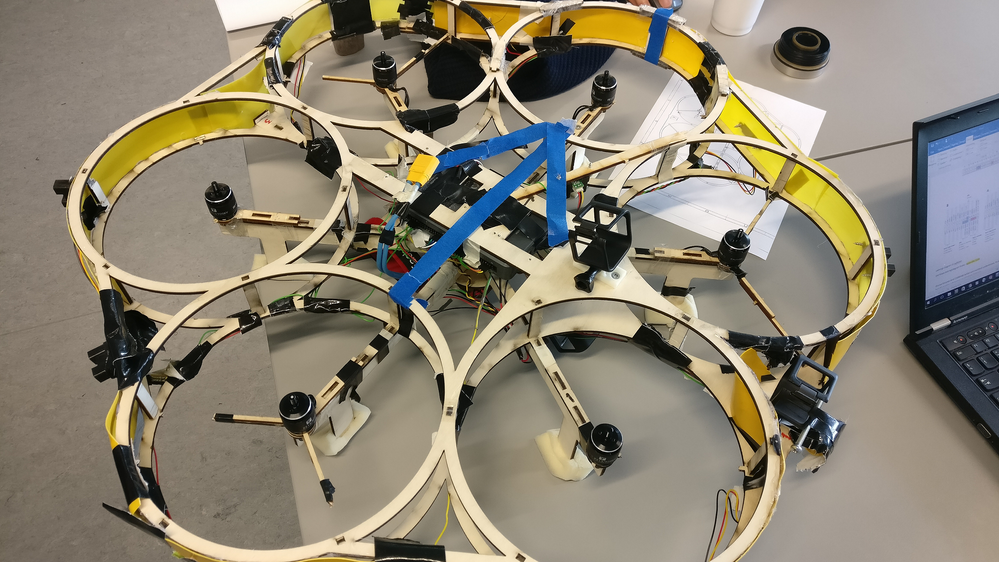

The goal of the project was to create an autonomous drone, capable of 3D mapping a room, using photogrammetry.

Products:

National Instruments:

MyRio

Other:

TATTU 11000mAh 14.8V 15C 4S2P Lipo battery

Drone Power Distribution Board 5V/12V

Naze32 Rev 6 Flight controller

9 x IR distance sensor – Sharp model: 2y0a02 f 48

3 x Gopro Session

6 x Air Gear 350, Motor and ESC

The Challenge:

In modern engineering 3D modelling is an important part of the design phase, when developing new solutions. 3D models of existing environments are needed when developing solutions to fit in existing spaces, such as new production facilities.

Currently 3D scans of rooms are often made using lidar technology and can be a time consuming manual process. Lidar equipment is expensive and as the process is also time consuming the total cost of a 3D scan can be very high. By automating the 3D scan process time and money can be saved, making 3D models of large rooms more excisable.

We aim to automate 3D scanning of large rooms by making an autonomous drone capable of mapping indoor rooms.

The Solution:

We have made an autonomous drone, that can 3D scan a room using photogrammetry. By moving randomly around the room and avoiding obstacles the drone takes pictures of the entire room with its three GoPro cameras.

Obstacle avoidance:

The drone uses 9 IR distance sensors to avoid obstacles in its path. These sensors are mounted in a strategic way to cover all angles.

One of the sensors point downwards, and this sensor is used to keep a chosen distance to the ground.

The remaining 8 sensors cover the directions of movement, and keep the drone from hitting walls or objects in the room.

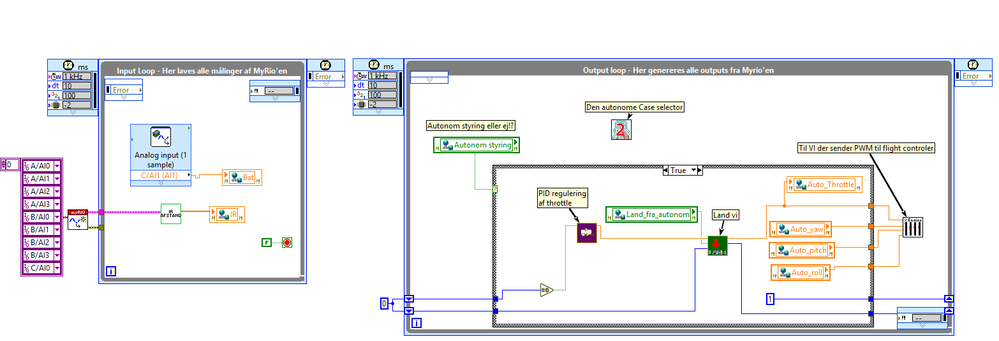

In this project a set of Sharp IR distance sensor have been used. They provide an analogue signal, and to achieve optimal results from these sensors a calibration have been done and the signal is continuously processed in the MyRIO.

3D scanning:

A 3D model of the room is made with the drone, using its three GoPro cameras. By taking pictures of all surfaces from multiple angles, it is possible to stitch together a 3D model of the room. A software called Agisoft is used for the stitching.

Software architecture:

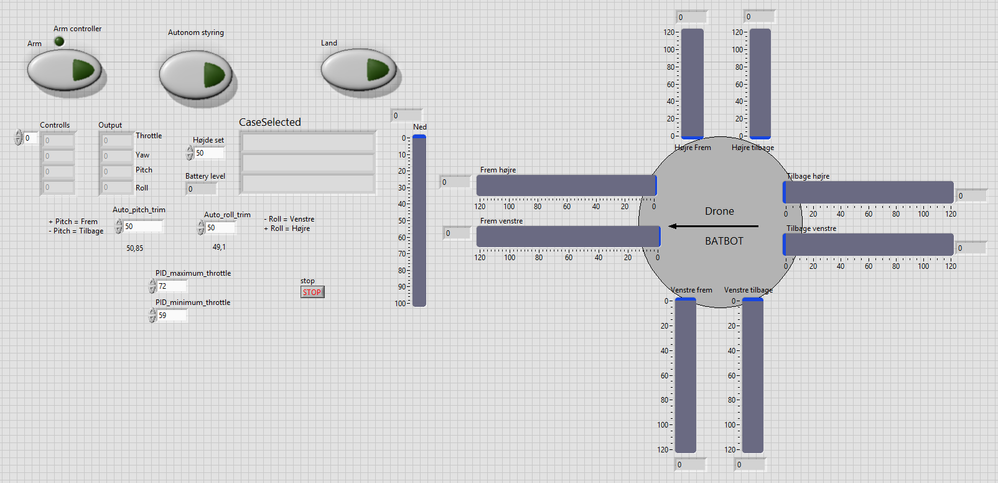

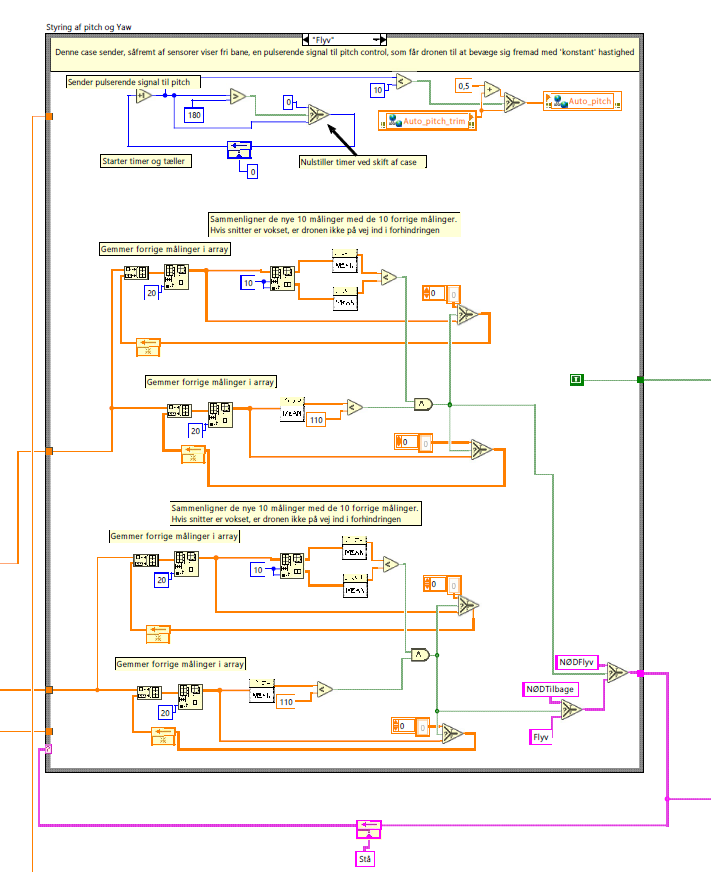

The drone is build as a state machine, controlling the movement. When the drone is airborne it mainly changes between an idle state and obstacle avoidance states.

Data acquisition is done in a parallel loop. This makes it possible to run the data acquisition loop very fast, and make signal processing on the data.

A base station is set up, consisting of a laptop running LabVIEW. From the base station the scanning operation is initiated and the drone can also be landed from here. Furthermore, the bases station allows for manual control of the drone for testing. The base station communicates with the MyRIO on the drone using WIFI.

Why LabVIEW:

By using LabVIEW, it has been possible to create a working prototype with alpha version of the software, very fast. Furthermore, it has been possible for 5 engineering students with only little programming experience to solve a relatively complex task.

Further work:

If another revision of the drone was to be build, it should be considered using a different type of sensors for obstacle avoidance. Ultrasound range sensors was found incompatible due to noise interference from the motors, therefore Lidar would be interesting to test.

Experiments with an indoor tracking system have been made, but as this was run on ultrasound, this was also disturbed the motor noise. Implementation of motion tracking using video would be interesting to implement. This would make it possible to implement more advanced path control algorithms.

Time to build:

The project was build in a 10 ECT’s point course in Mechatronics. Approximately 300 hours per student was put into the project during half a year.

Video

Link to Video

https://www.youtube.com/watch?v=EiVUn0I7ZM8&feature=youtu.be