Latency (1-2 seconds) in Real-Time DAQ using PXIe-4302

已解決!I am using LabVIEW 2020 RT to read continuously from a 32-Channel PXIe-4302 at 100 Hz Scan Rate. My Loop rate is set to 100ms, and I ask for 10 samples per read. When I monitor that actual loop time, it is indeed 100ms and I am getting 10 samples per read. However the voltage values are typically lagging the actual voltage input (from a potentiometer connected to the channel). It takes > 1 second for any signal level change to appear in the data.

The same code (except that I am using a cDAQ-9201 8-channel DAQ module instead of the 32-Ch PXIe-4302) on LabVIEW 2020 (Windows) runs fine and any signal level change appears almost instantaneously in the data.

I have also played with the DAQmx Read Properties (Relative To Recent Sample, and Offset set to -1) but see no improvement in the RT. The 1s delay remains.

I did some more tests today, but this time I used the NI MAX Test Panels instead of my VI, using the same settings for Scan Rate (100Hz) & Samples to Read (10).

With the MAX Test Panels (in RT), there is still the same 1-2 s latency. So does this indicate a driver issue or PXI card issue? Or is there any special settings needed in the PXI system to minimize the latency?

The DAQmx version on the RT PXI System is NI-DAQmx 21.0.0 .

Has anyone seen this noticeable lag in RT and not in Windows?

I am enclosing a stripped down Main VI and its SubVI for your examination.

Thanks for your time!

LabVIEW Developer since Version 2.0

已解決! 轉到解決方案。

在 08-02-2022 08:38 AM

- 標籤為新

- 標示為書籤

- 訂閱

- 靜音

- 訂閱 RSS 提要

- 將本訊息視為重要文章

- 列印

- 將不當文章回報版主

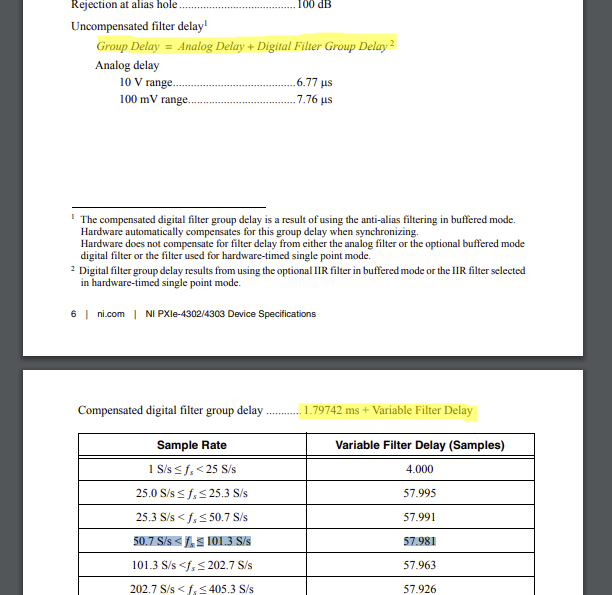

A part of the reason for the delay is the filter after the Delta Sigma ADC in the instrument, this delay is specified in the datasheet as shown below,

You can expect delays in the order of ~582ms

Soliton Technologies

New to the forum? Please read community guidelines and how to ask smart questions

Only two ways to appreciate someone who spent their free time to reply/answer your question - give them Kudos or mark their reply as the answer/solution.

Finding it hard to source NI hardware? Try NI Trading Post

Thank you Santo_13. I was unaware of the filter delay in the ADC. That could very well be the reason.

Can the filter be disabled (using DAQmx Properties in LabVIEW)?

LabVIEW Developer since Version 2.0

By reading the manual closely it seems that the digital filter delay is specified in # of Samples (i.e., not a fixed time value like 50 ms for example)

So the delay can be reduced by just increasing the scan rate. If i increased the scan rate from 100 Hz to 1000 Hz, I can potentially reduce the delay by a factor of 10. Does this make sense?

LabVIEW Developer since Version 2.0

在 08-02-2022 10:10 AM

- 標籤為新

- 標示為書籤

- 訂閱

- 靜音

- 訂閱 RSS 提要

- 將本訊息視為重要文章

- 列印

- 將不當文章回報版主

Yes, you can reduce the absolute time by increasing the sampling rate.

About the digital filter delay, it cannot be removed it is required for a Delta-Sigma ADC to function as designed.

Soliton Technologies

New to the forum? Please read community guidelines and how to ask smart questions

Only two ways to appreciate someone who spent their free time to reply/answer your question - give them Kudos or mark their reply as the answer/solution.

Finding it hard to source NI hardware? Try NI Trading Post

08-02-2022 01:03 PM - 已編輯 08-02-2022 01:05 PM

- 標籤為新

- 標示為書籤

- 訂閱

- 靜音

- 訂閱 RSS 提要

- 將本訊息視為重要文章

- 列印

- 將不當文章回報版主

I set the Scan Rate to 2000 Hz. That minimized the latency to unnoticeable levels).

Thank you Santo_13!

LabVIEW Developer since Version 2.0