- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

What does "DAQmx Read" actually read in terms of synchronization ?

04-21-2019 11:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi

I was wondering what data "DAQmx Read" actually read with it's called. Consider reading a retriggerable finite sample AI task with N samples.

What happens if at the time I call "DAQmx Read" to read M*N samples:

a) the trigger hasn't arrived yet

b) the task is at the middle of N samples

I would like it to behave as:

a) wait for the trigger, then read exactly the same N samples that the AI task is acquiring during a single trigger, repeat for next M-1 consecutive triggers then output concatenated M*N data.

b1) read the whole N samples, including those already read before calling the function (assuming there is a buffer) , do the rest the same as a).

b2) ignore current samples, wait for the next trigger, same as a).

Both b1 and b2 are acceptable. However I don't want it to read from the middle of the N samples.

- Is this the default behavior of the function ? If not, how can I configure it to behave like this ?

- Do I need to restart the task every time before reading ?

Thanks in advance !

04-21-2019 05:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If you configure a finite task for N samples, DAQmx makes a size N buffer. It will not (and can not) auto-concatenate M*N samples over the course of M triggers.

Your best approach is to call DAQmx Read requesting N samples. (Note: leaving the value unwired uses the default value -1, which does the same thing, but *only* for Finite Sampling tasks.)

Then the call to DAQmx Read will wait until either the timeout expires (10 sec by default), or until all N finite samples accumulate in the buffer, whichever comes first. No samples will accumulate until the trigger arrives.

To accumulate an M*N array, put the DAQmx Read call in an M-iteration For Loop. As you pass the N-sample array to the For Loop border, it'll default to auto-index the 1D array of N samples into a 2D M*N array of samples.

If the task was configured to be retriggerable, you shouldn't need to restart it after each DAQmx Read. The one thing I'm not totally sure about is what happens if the next trigger arrives before you finish reading the previous N samples? Since retriggering is a hardware functionality on the board, I suspect the task rearms as soon as it has taken N samples. The process of moving samples from the board to PC RAM is the responsibility of the DAQmx driver. And your application code is responsible for calling DAQmx Read to clear the old samples out of PC memory in time to make room for any new ones. If you don't get them cleared out in time, I suspect DAQmx will throw an error, but I can't say with 100% certainty.

-Kevin P

04-21-2019 07:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Kevin_Price wrote:

If you configure a finite task for N samples, DAQmx makes a size N buffer. It will not (and can not) auto-concatenate M*N samples over the course of M triggers.

Your best approach is to call DAQmx Read requesting N samples. (Note: leaving the value unwired uses the default value -1, which does the same thing, but *only* for Finite Sampling tasks.)

Then the call to DAQmx Read will wait until either the timeout expires (10 sec by default), or until all N finite samples accumulate in the buffer, whichever comes first. No samples will accumulate until the trigger arrives.

To accumulate an M*N array, put the DAQmx Read call in an M-iteration For Loop. As you pass the N-sample array to the For Loop border, it'll default to auto-index the 1D array of N samples into a 2D M*N array of samples.

If the task was configured to be retriggerable, you shouldn't need to restart it after each DAQmx Read. The one thing I'm not totally sure about is what happens if the next trigger arrives before you finish reading the previous N samples? Since retriggering is a hardware functionality on the board, I suspect the task rearms as soon as it has taken N samples. The process of moving samples from the board to PC RAM is the responsibility of the DAQmx driver. And your application code is responsible for calling DAQmx Read to clear the old samples out of PC memory in time to make room for any new ones. If you don't get them cleared out in time, I suspect DAQmx will throw an error, but I can't say with 100% certainty.

-Kevin P

Thanks for answering.

I know AI task only hold N sample in buffer, but what happens if I read M*N sample with "DAQmx Read" ? Does it wait for the rest of the triggers and collect M-trigger worth data ?

The reason I'm reading M*N data is that I want CONSECUTIVE M*N data, if I put "DAQmx Read" in a loop, the next data is already lost by the time the second loop starts. The trigger runs at 4KHz.

Is the a way to get all data out without losing even 1 sample ?

04-21-2019 11:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@2A91312E wrote

Is the a way to get all data out without losing even 1 sample?

yes. Trigger once and acquire continuously, don’t reset the trigger you will lose samples.

mcduff

04-22-2019 03:34 PM - edited 04-22-2019 03:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@mcduff wrote:

@2A91312E wroteIs the a way to get all data out without losing even 1 sample?

yes. Trigger once and acquire continuously, don’t reset the trigger you will lose samples.

mcduff

Well, you inadvertently almost solved the problem, but the OP explicitly asked for a retriggerable finite acquisition. The solution is to make a retriggerable counter output task that creates N pulses on each of M triggers at the sample rate of your choice. Then do configure your AI task to be continuous, but instead of using OnboardClock as the sample clock, use the counter output terminal. The DAQ task will think that it's continuous, but in reality it will be acquiring several finite bursts in its buffer. Fetch N*M samples. As in any master/slave synchronization configuration, start the slave (analog input) first so you don't miss any sample clock ticks from the counter output.

04-22-2019 03:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@croohcifer wrote:

@mcduff wrote:

@2A91312E wroteIs the a way to get all data out without losing even 1 sample?

yes. Trigger once and acquire continuously, don’t reset the trigger you will lose samples.

mcduff

Well, you inadvertently almost solved the problem, but the OP explicitly asked for a retriggerable finite acquisition. The solution is to make a retriggerable counter output task that creates N pulses on each of M triggers at the sample rate of your choice. Then do configure your AI task to be continuous, but instead of using OnboardClock as the sample clock, use the counter output terminal. The DAQ task will think that it's continuous, but in reality it will be acquiring several finite bursts in its buffer. Fetch N*M samples. As in any master/slave synchronization configuration, start the slave (analog input) first so you don't miss any sample clock ticks from the counter output.

Did not think this was possible. I never used triggering options on DAQmx, my only experience has been a scope.

The OP says his trigger rate is 4kHz, that is a time period of 250us. I have never had any luck in a getting a DAQ device to download data, set up for next acquisition, grab data without missing a point that fast.

but in reality it will be acquiring several finite bursts in its buffer

Doesn't this negate the point of acquiring every point? I guess the OP should define what every point means, does it mean every single point after the first trigger, or does it mean every point in a 100us burst after the trigger? I understood the former, not the latter.

mcduff

04-22-2019 03:57 PM - edited 04-22-2019 03:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Did not think this was possible. I never used triggering options on DAQmx, my only experience has been a scope.

The OP says his trigger rate is 4kHz, that is a time period of 250us. I have never had any luck in a getting a DAQ device to download data, set up for next acquisition, grab data without missing a point that fast.

My understanding based on the OP's original statement ("retriggerable finite sample AI task with N samples") is that the OP wants M "records", each of which is N samples long, acquired on every incoming trigger. (I'm hoping the OP will correct my assumption about the desired acquisition config if I'm wrong.) If you tried to fetch (i.e., DAQmx Read) every single record before the next was acquired, you'd never be able to keep up before the buffer overflowed because of the limitations Kevin mentioned. By implementing the counter and retriggering logic I described, you should be able to do what I described. You'd just use one DAQmx read on your AI task per M records you want to fetch. By virtue of DAQmx read blocking until N*M samples are acquired, you'll never run into the issue of fetching a fractional record. By virtue of starting the AI task before the counter task, you'll guarantee that the first sample in the AI buffer is actually the first sample of a record of N samples corresponding to a specific trigger.

04-22-2019 04:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@croohcifer wrote:

Did not think this was possible. I never used triggering options on DAQmx, my only experience has been a scope.

The OP says his trigger rate is 4kHz, that is a time period of 250us. I have never had any luck in a getting a DAQ device to download data, set up for next acquisition, grab data without missing a point that fast.

My understanding based on the OP's original statement ("retriggerable finite sample AI task with N samples") is that the OP wants M "records", each of which is N samples long, acquired on every incoming trigger. (I'm hoping the OP will correct my assumption about the desired acquisition config if I'm wrong.) If you tried to fetch (i.e., DAQmx Read) every single record before the next was acquired, you'd never be able to keep up before the buffer overflowed because of the limitations Kevin mentioned. By implementing the counter and retriggering logic I described, you should be able to do what I described. You'd just use one DAQmx read on your AI task per M records you want to fetch. By virtue of DAQmx read blocking until N*M samples are acquired, you'll never run into the issue of fetching a fractional record. By virtue of starting the AI task before the counter task, you'll guarantee that the first sample in the AI buffer is actually the first sample of a record of N samples corresponding to a specific trigger.

It is clever method of using the counter, if the DAQ card supports it. Then after acquisition divide up your acquisitions in to separate time records. I'll have to remember it if I need it in the future.

mcduff

04-22-2019 05:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

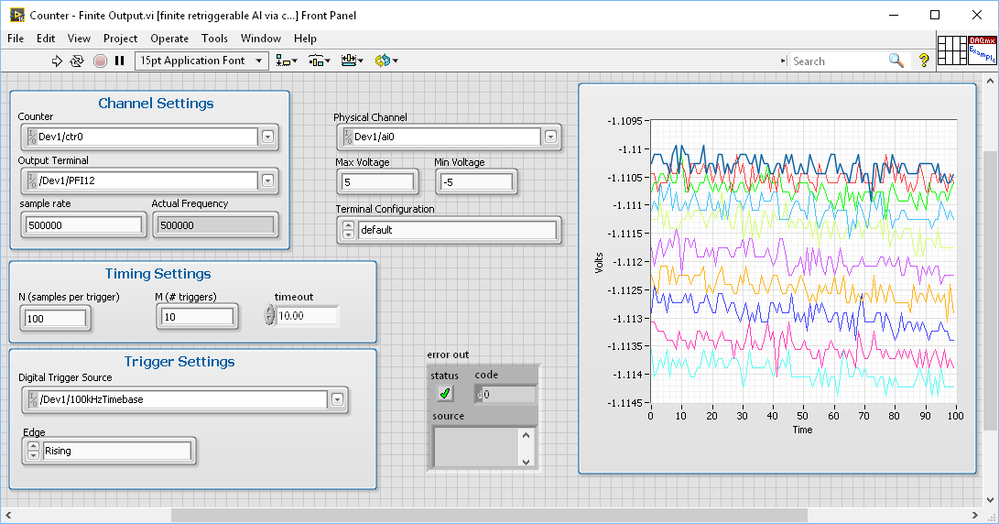

I'd say croohcifer nailed it! I threw together an example to illustrate, copy/pasting heavily from shipping examples. It ran fine on a desktop X-series board.

Notes:

1. I chose Finite sampling for the AI task with a buffer size of M*N samples. croohcifer's recommendation of Continuous sampling certainly can also work, and may be a better choice for your real app, but Finite was easier to use for illustration purposes.

2. I set the counter pulse frequency to 500 kHz with 100 pulses per trigger. This accounts for 200 microsec, fitting inside the 250 microsec trigger interval of your app.

3. I configured the counter to use an internal timebase as the trigger. This let me test it with my DAQ device that isn't cabled to anything. You'll need to choose the PFI pin where the real trigger signal is wired.

4. After reading a 1D array of M*N samples from DAQmx Read, I reshaped the array to be M rows by N columns. Row i holds the N samples taken for the ith trigger. The graph shows M plots of N samples each. Again, the device isn't connected to anything so who knows what it's picking up or where from.

-Kevin P

04-23-2019 05:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@croohcifer wrote:

@mcduff wrote:

@2A91312E wroteIs the a way to get all data out without losing even 1 sample?

yes. Trigger once and acquire continuously, don’t reset the trigger you will lose samples.

mcduff

Well, you inadvertently almost solved the problem, but the OP explicitly asked for a retriggerable finite acquisition. The solution is to make a retriggerable counter output task that creates N pulses on each of M triggers at the sample rate of your choice. Then do configure your AI task to be continuous, but instead of using OnboardClock as the sample clock, use the counter output terminal. The DAQ task will think that it's continuous, but in reality it will be acquiring several finite bursts in its buffer. Fetch N*M samples. As in any master/slave synchronization configuration, start the slave (analog input) first so you don't miss any sample clock ticks from the counter output.

Thanks for answering.

I'm already using retriggerable counter as you described.

However, my AI task must be triggered as well because all those M sets of samples are different, i.e. they are not just repeating N samples M times. I have another 4/M KHz trigger telling me when the first of M sets begins, and the AI task is actually trigger at 4/M KHz.

Also I'm currently using burst mode, that is L*M*N samples fetched at a time. All L copies of M*N are the same signal. However this way I still lose some data after every burst.