- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

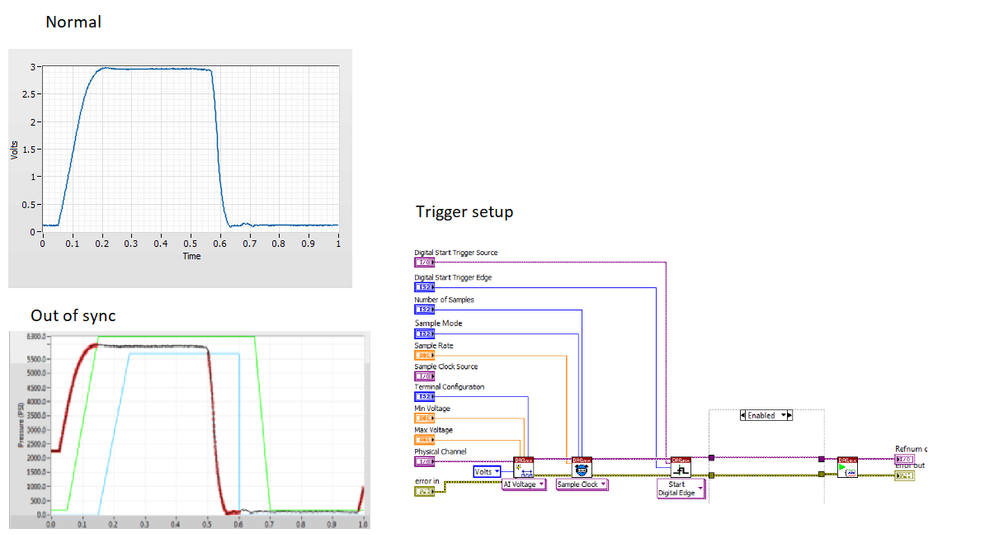

Analog signal acquire slowly gets out of sync

Solved!06-25-2018 01:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Has anyone seen this issue with M-Series DAQs? I am using a digital edge trigger and the signal skews after running it for hours. If I close and reopen the DAQ it is fine. The latest out of sync happened after 4-hours of running continuously. 1K samples and 1K sample rate.

Suggestions?

NI Alliance Member

LabVIEW Champion

NI Certified LabVIEW Architect

LabVIEW, LV-RT, Vision, DAQ, Motion, and FPGA

Solved! Go to Solution.

06-25-2018 02:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Can't tell from the limited info. What are the signals being graphed? Are all of them measurements taken from the same board? Or are they coming from different places (possibly some actual measurements, some expected values)?

If all signals are part of the same continuous hw-timed input task on the same board, their timing should not skew relative to one another. If they're on different boards, or you're comparing DAQmx timestamps to the PC's time-of-day realtime clock, skew should be expected.

DAQ boards are spec'ed to about 50 ppm timebase accuracy (1 part in 20000). 4 hours is 14400 sec. So a given DAQ timebase could have an inaccuracy as high as ~0.7 sec per 4 hours. The visible skew you posted is well within that range.

Solving it or compensating for it depends on more info about your setup, where these signals come from, and how their timing info gets correlated.

-Kevin P

06-26-2018 09:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Kevin,

This one is challenging because it does run for a prolonged period of time before seeing the skewed waveform. To be clear, when I close and reopen the DAQ the signal does go back into synchronization.

The signal is from a pressure gauge. Comes in an AI4 as a differential analog voltage. We have a digital signal from the PLC that when it is applying the pressure. We are using this as the digital trigger. This is using a single M-Series DAQ (NI_USB_6341).

My next step would be changing the digital trigger to an analog trigger. Other suggestions are welcome.

Thanks!

Matt

NI Alliance Member

LabVIEW Champion

NI Certified LabVIEW Architect

LabVIEW, LV-RT, Vision, DAQ, Motion, and FPGA

06-26-2018 10:14 AM - edited 06-26-2018 10:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

To be expected result if there is no (regular or continous) sync between the clock sources

If timing axis is right you have quite slow pulses (about 1Hz)

Seems that you want to check boundaries..

Instead of a contiouns capture , trigger for each pulse (with pretrigger points) and capture only 950ms (just less than the periode .. rearming the trigger need some time .. see manual/spec)

With absolute timestamping you still can measure the periode .. if needed ...

Or instead of always capturing 1s of data you can adapt the next number of points to the edges found the last times... like a slow PLL .. (If you use 1kSPS , capture chucks of 1k samples and expect the pulse rising edge at 100ms and it slowly drifts to 60ms (mean of last 10 edges) , just read 999 samples until your back at 100ms ..) no missing data 🙂

Henrik

LV since v3.1

“ground” is a convenient fantasy

'˙˙˙˙uıɐƃɐ lɐıp puɐ °06 ǝuoɥd ɹnoʎ uɹnʇ ǝsɐǝld 'ʎɹɐuıƃɐɯı sı pǝlɐıp ǝʌɐɥ noʎ ɹǝqɯnu ǝɥʇ'

06-26-2018 11:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

All,

I found this great DAQ finite trigger example code after digging through many threads. I am running it today to see if this resolves my current issue. Thanks to everyone for your suggestions!!

Finite Acquisition with a Software Retriggerable Reference Trigger in DAQmx

NI Alliance Member

LabVIEW Champion

NI Certified LabVIEW Architect

LabVIEW, LV-RT, Vision, DAQ, Motion, and FPGA

06-26-2018 12:31 PM - edited 06-26-2018 12:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It still isn't clear to me exactly how you define "out of sync". You showed a graph with 3 plots to illustrate "out of sync" but haven't described what those different plots represent.

Between Henrik_Volkers' intuitions and your subsequent attempt to solve things with software re-triggering, I'm suspecting that the essential problem is that the PLC and the DAQ board are independent timing sources. The PLC is pulsing at, maybe, 1 Hz according to its own timebase. The DAQ board has a requested sample rate that it will try to achieve according to its own timebase. If you run the task continuously for hours, but read and split data into "1 second" chunks to compare cycle to cycle changes, then timing skew would definitely be expected.

Some skew comes from the relative inaccuracies of the two timebases. But there could be another contributor if your requested sample rate can't be achieved exactly. The AI subsystem creates a sample clock via integer division of the board's main timebase. Let's say you request a sample rate of 130 kHz on an M-series board with a 80 MHz timebase. The ratio is ~615.4. The actual sample rate will be based on a nearby integer divisor, either 615 or 616 (there's consistent rules for how it "rounds", I just don't remember offhand), leading to an actual sample rate of either ~130081 or ~129870.

So, if you were to break the AI data into 130000 sample chunks, *believing* that each chunk represented 1.000000 seconds of data, that'd be wrong. They'd represent either a little more or a little less than 1.000000 seconds. Over time, that'd accumulate to look like timing skew.

Your latest approach is to repeatedly iterate in software over a short finite triggered task. This will re-establish sync for each finite set of data, and will eliminate any accumulation of both kinds of skew mentioned above. If your original problem is fairly similar to things Henrik and I have speculated about, you should be well on your way to having things solved.

-Kevin P

06-26-2018 01:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You are correct. It is an accumulating skew in the time domain. It shouldn't skew when connected to a digital trigger but seems to be happening in my case. I will post my results later this week. I do believe that using the finite trigger will fix this issue.

Matt

NI Alliance Member

LabVIEW Champion

NI Certified LabVIEW Architect

LabVIEW, LV-RT, Vision, DAQ, Motion, and FPGA

01-08-2019 06:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

I am using 6211 DAQ device and I need to know/find channel skew. There is a command in legacy interface chskew = get(ai, 'ChannelSkew'); in matlab. Is there any equivalent of this command in session based interface? or how to find it?

Thank you. I appreciate any feedback.

01-08-2019 07:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Looks like my solution was to use a NI example of a finite trigger with pre-trigger samples. I start and stop the acquisition for everytime I acquire the signal.

Hope that helps,

Matt

NI Alliance Member

LabVIEW Champion

NI Certified LabVIEW Architect

LabVIEW, LV-RT, Vision, DAQ, Motion, and FPGA