- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

timed average data

02-13-2015 06:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

I have configured my Labview program to report data into an excel sheet but it is too much for the experiment I am conducting. I would like my program to continously take data but only report the average of the data taken every 10 minutes. What would be the best way to do this?

Any help is appreciated!

02-13-2015 06:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Mike...

Certified Professional Instructor

Certified LabVIEW Architect

LabVIEW Champion

"... after all, He's not a tame lion..."

For help with grief and grieving.

02-14-2015 02:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If this is a serious program that you (and others) need to use and maintain. you really need to learn better LabVIEW Style, including how to break things up into sub-VIs for the purpose of "hiding the messy details". Peter Blume wrote an excellent book on LabVIEW Style, which (when I was starting out in LabVIEW) made a tremendous impression on me, and almost overnight made my LabVIEW code an order of magnitude "better". One good rule of thumb is "The entire block diagram should fit on a standard screen, e.g. 1024 x 768". [I have a colleague who cheated -- his LabVIEW Block Diagrams didn't fit on two "big" monitors, so he bought two "bigger" ones -- it didn't help, spaghetti code is still spaghetti code, with or without tomato sauce].

One of the best ways to debug your code is to ask someone why it doesn't work. They do not need to know LabVIEW for this to be helpful -- just walk them through your Block Diagram, explaining what is going on. The graphical nature of LabVIEW makes this possible, even for non-programmers to follow (don't try this with your young children, unless they're truly exceptional, but almost any college student with a smattering of mathematics should be able to "ask intelligent questions" that cause you to say "Ohh, I missed that ...". Of course, if your code is disorganized, all bets are off ...

Did I say "Style is Important"?

Bob Schor

02-14-2015 03:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

(Extra points if the duck actually understands what you told it...)

Mike...

Certified Professional Instructor

Certified LabVIEW Architect

LabVIEW Champion

"... after all, He's not a tame lion..."

For help with grief and grieving.

02-20-2015 05:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you for your reply!

I do intend to clean everything up once I have finished the program but for me it is easier to see it all in one program as I am changing and reworking it. The final product will be much easier to view though.

In terms of my question, I figure that to report every 10 minutes requires me to change the sampling rate and number of sample on my DAQmx. The highest rate I am able to sample at is 41 Hz though. Any thoughts on why it won't go higher? And I don't quite know a lot about programming but I'm assuming that if I would want data every 10 minutes, I would just multiply the rate by number of seconds (600 seconds = 10 minutes) and that number would be number of samples I input?

02-20-2015 06:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Just bumping up the number of requested samples is a poor way of running for an extended period. Better to use a for or while loop where the number of samples requested is less than or equal to the rate. For example, if your rate is 1000S/s, don't request more than 1000 samples. The number of samples is often 1/10 the rate. Your gui would be totally unresponsive with the high number of samples you suggest.

02-21-2015 06:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I do intend to clean everything up once I have finished the program but for me it is easier to see it all in one program as I am changing and reworking it. The final product will be much easier to view though.

Many beginners who know very little about programming would agree with your statement; almost all experienced programmers would say you are 100% wrong.

The best way to start a non-trivial program is to Write the Documentation (first!). Among other things, this forces you to put down the parameters of the problem and to segment it in ways that might suggest Good Programming Practices. Had you done that and shared this with us, some of the questions (What is your sampling rate? How much data do you want to take? What do you want to do with the data? How will you save the data? What are you using to take data?) would be answered, and we could be more helpful.

I'm going to take a stab at what might be in such a document, and suggest how this would lead to "clear thinking" about how to program it. It might not apply to your situation, so feel free to modify.

Until I say to stop, do the following:

- Sample N channels of (analog) data at a rate of F Hz (almost any system worth its salt can sample at F = 1000 Hz).

- Stream all of the data (without losing any) to a (binary, TDMS, etc.) file on disk.

- Simultaneously, accumulate an average over M (points, minutes, you specify) and write the average to an Excel Spreadsheet.

Now think about this. You've specified 3 (or 4) actions that need to take place simultaneously -- sampling (at, say, 1KHz), writing to disk (in such a way as to not lose samples), accumulating averages, and writing to Excel. Trust me, you don't want to try to make a huge Block Diagram with all the details of this on one picture.

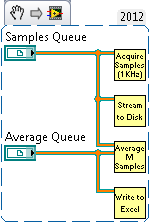

But if you step back a bit and think about it, the following might suggest itself: Split the task into four parallel tasks. One samples data (at, say, 1KHz) and puts the data into a Queue (a data structure useful for passing data around to other functions on a First In, First Out basis), the second takes the data from the Queue and writes it to disk, the third takes the data from the Queue and constructs an average, which it puts on an "Averaged Queue", and the fourth writes the Averaged data to Excel.

There it is! You can glance at this and see what the entire program is supposed to do, all at once! Now, some details are missing, like how to do each of the sub-tasks, but you can do a further "divide and conquer" there as well.

If you understand LabVIEW's DataFlow model, you will recognize that all four of these sub-VIs are designed to run at the same time, in parallel. Think about how "data flows" in this routine. The first VI produces data at a fixed rate (here, 1KHz). The second consumes this data and writes it to disk -- as long as the "wire"(a Queue) connecting them can "expand" a bit if necessary, and if disk writing is faster than 1000 samples/second (I think even a floppy disk is at least this fast!), no data should be lost. The third VI also gets the samples and averages M of them, spitting out the average on another Queue which goes to the final VI that writes the data to Excel.

Now before my LabVIEW Expert Colleagues start yelling at me about the Stupid Error I Made with Queues (I have both the second and third VI taking data off the Queue -- a no-no), the point here isn't that this Very Simple Routine is 100% correct, but that it is clear and "gives you the Courage to Do what Needs to be Done". [If I were doing this "for real", I'd put the Averaging routine "inside" the Streaming routine, and let the Streaming Routine export the Average Queue].

But, hey, feel free to continue to put all of your code "in one basket" (I have a colleague who persists in doing this, even after two years of working with him, "because I'm just testing to see if it works" -- I get called when he can't figure out why it doesn't work, and I'm fond of saying "First tell me what you want to do (a.k.a. Write the Documentation) and then write multiple VIs to do Big Tasks"). However, if you ask for help, (a) first tell us what you want to do, and (b) try not to give us everything in one huge diagram.

Bob Schor

P.S. -- Please don't take this personally. Even though I had several decades of programming (at least 5 languages) when I started with LabVIEW, my first LabVIEW programs were pretty messy, too. However, within a month of starting to learn/use LabVIEW, I bought Peter Blume's book, and haven't looked back.

03-04-2015 06:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I am new to programming so I am unsure as to how to fix this problem. How would I determine if I am using single point aquisition and if I am, what would I do to change it?

03-04-2015 09:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

03-06-2015 04:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I have my DAQmx Read set for N Channels and N Samples. I have a control set up for number of samples and have always been using numbers larger than 1.

Any other ideas how I could speed up my rate?