- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

simultaneous read/write a binary file

11-04-2018 05:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

In my test sequencer I have an exe running in parallel sampling a DIO and writing the status into a binary file according to the next logic:

1. Read results are placed in a binary file containing a cluster with 3 2d arrays.

2. If a field in that binary file was changed by the sequencer the engine writes that change to the hardware

At first each sequencer step that requested a read/write opened a STM TCP client request to that engine in the exe yet this was very slow.

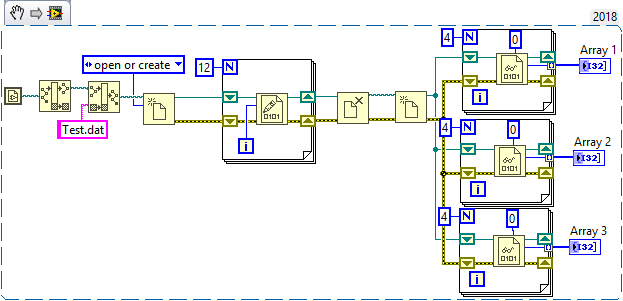

The file mechanism works great yet I wanted to check it for potential collisions as you can see in the attached file (this simulated several exes accessing the same binary file at the same time).

The VI runs with no error at first but if you change the 0 input to the index you'll run into error 116 for example.

I tried deny access, I tried using the same ref or different refs, I tried flushing, I tried using file size yet nothing worked.

I think the issue is the file growing in size parallel to a read since I made one of the fields to grow in bytes.

Why doesn't deny access prevent this?

I would expect a file access violation which I would then ignore and try again until success.

Thanks in advance

11-04-2018 12:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You don't seem to understand the Principle of Data Flow, nor how Files work. You are reading one file in parallel several times -- Data Flow should tell you that the results that you read will be "unpredictable" from time to time. Here's a Snippet of a routine you could try and "see for yourself" -- each time you run it, the results in the three Output Arrays will (probably) be different, and may not include all of the numbers in the original created file.

Redesign (and rethink) your routine.

Bob Schor

11-04-2018 02:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

A file is a serial device and you cannot do parallel operations in any case (You don't have a read head for each bit, right?)

All you can do is open the file for read and write outside the loops and then hammer it in the various loops as fast as the computer allows as you currently do. Seems rather pointless! Whatever writes last wins. Your fields are also variable length, making the entire operation relatively inefficient. Also, since you constantly close and reopen the file, the file position is not retained.

It might be better to keep the file contents in an action engine in memory. The action engine could occasionally write the contents to disk if that's needed.

(You should also replace all your value property nodes with local variables.)

11-04-2018 02:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

A file is a serial device and you cannot do parallel operations in any case (You don't have a read head for each bit, right?)

Just curious here, wondering if that will change with the proliferation of SSDs. Can they read/write in parallel, there is no head reading a spinning disk? Maybe the whole OS needs to change to allow parallel access if possible with SSDs.

@OP

altenbach and Bob Schor are correct, everything for the file system gets queued up and operated on individually not in parallel, maybe with the exception of different files on different disk drives, rolfk probably has a greater insight to this.

11-04-2018 02:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@mcduff wrote:

Just curious here, wondering if that will change with the proliferation of SSDs. Can they read/write in parallel, there is no head reading a spinning disk? Maybe the whole OS needs to change to allow parallel access if possible with SSDs.

The interface connector is the critical section and it has fewer pins than you have storage bits on the device. It is a shared resource. Writing to an SSD is still significantly slower than any memory operation.

11-04-2018 02:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

11-04-2018 02:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

There is still a limited number of bus lines on the motherboard and on the CPU(s).

(Maybe in 50 years with quantum computers, holographic memory and alien Area 51 technology :D)

11-05-2018 02:27 AM - edited 11-05-2018 02:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@mcduff wrote:

@altenbach wrote:

A file is a serial device and you cannot do parallel operations in any case (You don't have a read head for each bit, right?)

Just curious here, wondering if that will change with the proliferation of SSDs. Can they read/write in parallel, there is no head reading a spinning disk? Maybe the whole OS needs to change to allow parallel access if possible with SSDs.

@OP

altenbach and Bob Schor are correct, everything for the file system gets queued up and operated on individually not in parallel, maybe with the exception of different files on different disk drives, rolfk probably has a greater insight to this.

SSD doesn't make any difference in this respect. The problem is not the speed of the interface. Even if it was indefinitely fast you still would have a problem with a scheme like this.

Sharing a file between two applications is VERY tricky. You can do that if you have a way to guarantee that both applications always write to fully different locations in the file so that they NEVER overlap. That requires that you design a layout and determine offsets into the file that are safe to write and then stringently stick to that. This is for instance done with memory mapped files to get a very fast inter application communication (without memory mapping the transfer back and forth is severely limited by the disk IO interface even when using SSDs (the new NVM SSDs would probably get somewhat closer to mapped memory performance though still not the same).

But communicating like this between two applications is very tricky and requires a very stringent discipline to make sure that you are not writing to the same location from both sides. With LabVIEW file functions you will need to use the Get/Set File Position functions a lot to allow such control about where exactly to write in a file.

Without Get/Set File Position the LabVIEW File Write function works fully seqential, starting with either a 0 offset when opening a file, or the offset set to the End of File position, when you requested to open the file in append mode, and then advancing the file offset every time with the amount of bytes written to the file. Everything else you have to do explicitly and VERY VERY carefully.

And if you want to simulate that in one LabVIEW application you have to open the file twice. The current file position offset is maintained per file handle (LabVIEW file refnum).

An analogy, although maybe not completely correct, would be if you want to do some handshaking through digital lines between two machines. You can't just use one digital line to be used in bidirectional mode to let machine A tell machine B that it needs to do something and then allow machine B to acknowledge when it is done. You either need seperate digital lines for each signal and direction (seperate distinct areas in the file) or a protocol that allows both machines to communicate over that single line (a carefully disigned access scheme in the file with discretly implemented semaphores, notifiers and all that kind of stuff, and yes if it does sound complicated that is because it is ![]() )

)

11-05-2018 06:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for the replies,

This code is broken to show the problem.

I tired to solve it using Deny Access hoping that it will bring back some data flow into this unpredictable simulation.

It didn't or I don't know how to use it correctly.

The question is actually: how do you serialize the access to the file from different EXEs? Speed and order are not important.

I even have no problem reading and writing the entire file each time with open+close.

However, generic solution I can use from Python and LabVIEW at the same time is important.

When I try to open Excel while it was already open I get "read only" alert. I thought Deny Access does exactly that and will allow me to:

1. Try to get write permission

2. Lock the file if you got the write permission till read/write ends

3. Release the file for the next operation

11-05-2018 10:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Or … you only have 1 exe write to the file and have them communicate via TCP/IP to each other. 🙂

That way you can send the data you want written to the other exe and it'll write it.

/Y