- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

multicore toolkit

12-09-2017

06:05 PM

- last edited on

01-08-2026

09:05 AM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

hi

am using "Multicore Analysis and Sparse Matrix toolkit" MASM, is this toolkit replaced the parallel loop ??

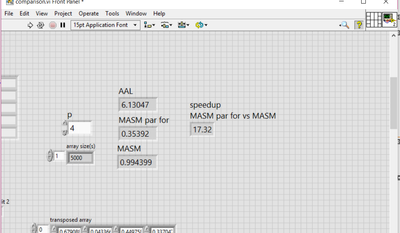

i used the parallel loop with this toolkit in a simple matrix multiplication example, i got a higher speedup vs. MASM alone, i have core i7 7700HQ 8 logical cores. anyone could explain to me these result values! the max speedup should be *8 max (is it right??)

in NI manual i found this Note National Instruments does not recommend using the Multicore Analysis and Sparse Matrix VIs within thread control structures, such as the parallel For Loop, Timed Loop, or Timed Sequence. Using thread control structures might cause thread scheduling conflicts and diminish the performance benefit of a multicore system. In general, avoid running other computationally intensive tasks at the same time as the Multicore Analysis and Sparse Matrix VIs.

12-09-2017 06:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Know nothing about the note, but does your processor have hyperthreading? Then 8*2 = 16 which is about the speedup you saw.

mcduff

12-10-2017 01:59 AM - edited 12-10-2017 02:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

We need to see how you are testing.

The front panel in your picture is completely meaningless (you don't even show all dimensions). And no, a hyper-threaded core (= 2 virtual cores) does not give a 2x speedup over a non-hyper-threaded code. You have four hyperthread cores, so 4x speedup over a single core is about what you can expect.

What functions are you testing? The masm functions operate on matrices directly, so why do you even need a loop? What are you doing with it? How is the parallel FOR loop configured? What are the execution properties of the testing VI? How do you measure the execution time?

We can compare the following:

- A multiplication of two large matrices using the regular matrix multiplication of the linear algebra palette

- A multiplication of two large matrices using the matrix multiplication of the MASM toolkit

- Maybe a homemade explicit matrix multiplication using simple primitives and comparing parallel/regular FOR loops.

Please show us your entire benchmarking code. Something is clearly wrong here.

12-10-2017 02:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@mcduff wrote:

Know nothing about the note, but does your processor have hyperthreading? Then 8*2 = 16 which is about the speedup you saw.

mcduff

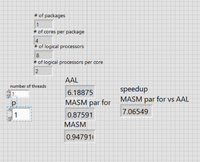

this is CPU information ( 4 cores each of 2)

when p=1 and the # of thread =1 the result speedup is satisfied about 8,

when i change # thread keeping p=1, there is no change

12-10-2017 02:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Your math makes no sense.

Again, show us the diagram, not the front panel (best, attach the actual VI)

12-10-2017 08:01 AM - edited 12-10-2017 08:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

hi altenbach

am sorry for late reply,

i understood my mistake, the use of parallel loop is meaningless when using MASM AxB cause these function operate on matrices directly as u said, i used for loop to increase the CPU utilization with small matrix size.

i compared MASM and ALL vis, the default value of # thread is 4, but when i set it to 3 or 2 there is no valuable change in speedup. i multiply 4x4 and 4xv where v vary from 100 to about 10000000 .

the question now how can i optimize this operation and how can i reach 4x speedup?? i tried to add dummy rows and columns, the speedup is increased but the calculated delay time for my actual matrices is undetermined in this case and i cant mul large size

12-10-2017 11:01 AM - edited 12-10-2017 01:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

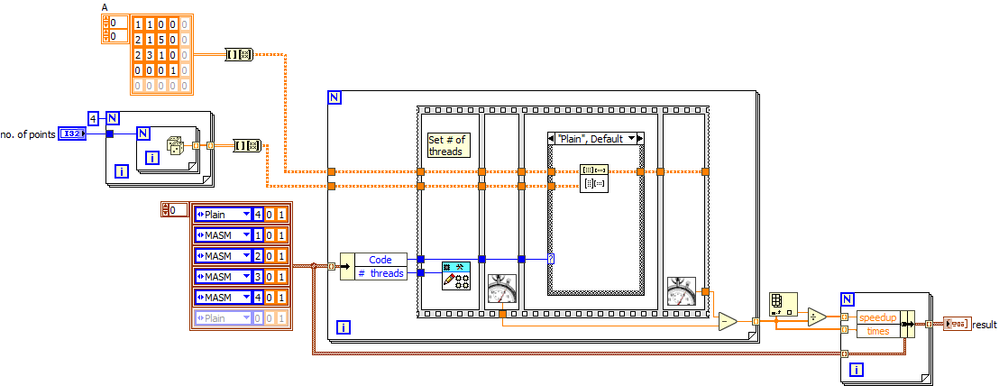

I'm going to install the MASM toolkit and do some tests, but as we discussed before you might not get as much speed increase because you only have so few rows. A multiplication of two matrices is not necessarily trivial to parallelize (start here) while other matrix operations possibly benefit more.

In the meantime, try to rewrite your benchmark code in a little more reasonable way and avoid all this duplicate code and that long worm of a sequence structure. Use a FOR loop with a case structure to do the various tests. Only the code that is different belongs inside the case structure, the rest can be shared. You don't need the repetition loops. Auto-index the elapsed times at the right boundary to create an array of times. This should all easily fit on a postcard! You also need to be very careful because your matrix function outputs are not wired to anything. The compiler could decide to eliminate the entire matrix multiplication as dead code (especially if you disable debugging). Why calculate a result if it is never used?? You should also convert the A array to a matrix datatype to avoid the coercion dots (probably makes no difference in performance, but still...).

12-10-2017 02:36 PM - edited 12-10-2017 02:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

ok am trying to rewrite my code taking ur advice and tips in my account.

@altenbach wrote:

You don't need the repetition loops.

the repetition loops are used in NI example ( AxB performance benchmarks .vi) the small matrix size the higher iteration?!

@altenbach wrote:

your matrix function outputs are not wired to anything. Why calculate a result if it is never used??

this vi is just a sample only for test, actually in my complete work i need the result

waiting ur reply...

12-10-2017 03:01 PM - edited 12-10-2017 03:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@ssara wrote:

ok am trying to rewrite my code taking ur advice and tips in my account.h

Here's something to get you started. (Sorry, I don't have a serious CPU here, will try on Monday). Compare the size with your code 😄 (fully scaleable, e.g. adding higher numbers of threads only requires changing a diagram constant!)

12-10-2017 05:15 PM - edited 12-10-2017 05:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Your code example may actually have a possible flaw. The unwired result matrix may as well be optimized away completely in extreme cases! I'm not saying that this happens but there is a real chance that it can happen. The LabVIEW optimizer has been getting pretty smart in the last years, making the creation of performance test VIs an even more complicated task.