ni.com is currently experiencing unexpected issues.

Some services may be unavailable at this time.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Why is RT matrix multiplication so much slower than Windows?

Solved!11-23-2022 02:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

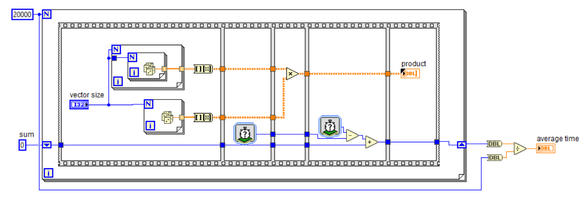

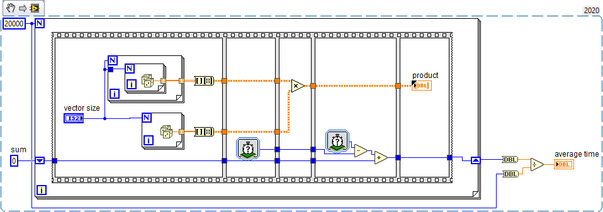

I ran the attached VI on both a cRIO-9035 and my Windows machine (A Lenovo ThinkPad) running LabVIEW 2020. All it does is find the average the timing cost of multiplying a random vector with a random square matrix over 20,000 times. With a vector size of 32, on the cRIO the average time was 21.88 microseconds, while on my Windows machine the cost was 2.04 microseconds. Does anyone know why there's such a huge disparity between the two times or if there's any way to speed up matrix multiplication on the RT target? The cRIO-9035 has a clock speed of 1.33 GHz while my Windows machine has a clock speed of 2.60 GHz, so certainly it isn't just the clock speeds.

Thanks,

Daniel

Solved! Go to Solution.

11-23-2022 02:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Daniel,

@dncoble wrote:

The cRIO-9035 has a clock speed of 1.33 GHz while my Windows machine has a clock speed of 2.60 GHz, so certainly it isn't just the clock speeds.

Yes, it's not just clock speed!

Which CPU does your Windows computer use? Which kind/type/spec of RAM?

The cRIO uses an old Atom E3825 with just two cores…

11-23-2022 05:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for the reponse!

To answer your question, the Windows machine has an Intel i7-6600U (2 cores), with 16 GB of DDR3 RAM. I expected that the laptop would be faster, but I doubt that hardware could account for a 10x difference in speed.

I'm interested in improving the performance on the real-time system, and it doesn't seem like the Intel Atom E3825 on the cRIO-9035 is performing as fast as I expect it could. So I guess my question was whether there is some library or setting that exists for LabVIEW windows which is accelerating matrix multiplication that doesn't exist for LabVIEW Real-Time. If so, is there a way I can change the real-time target to improve it's performance by maybe installing some module or changing some setting, or is this type of slow-down inherent to the real-time system?

Thanks,

Daniel

11-23-2022 10:59 PM - edited 11-23-2022 11:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

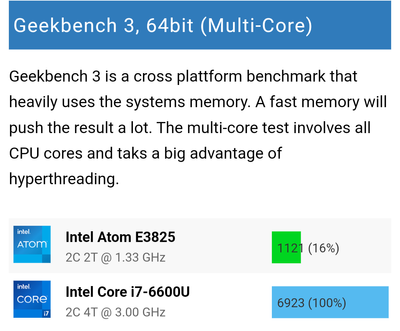

Atom processors are simpler. Optimized for low power, and in this case only has 25% of the cache.

Comparing the two shows the huge difference:

While I don't have benchmarks for exactly these CPUs, here my list of benchmarks showing the dramatic differences.

11-29-2022 03:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

11-29-2022 04:31 PM - edited 11-29-2022 04:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The CPU in the cRIO is a low power Atom chip and quite old (launched 2013). Clock speed is mostly useful for comparing CPUs of the same family or successors to see how instructions per cycle changed.

Atom CPUs lack a lot of the Core family CPU features, most notably AVX. If you want fast matrix multiplications you need vector instructions.

So until you run your benchmark on a Windows PC with a similar CPU and show that it is faster than the cRIO I will believe the CPU is to blame.

11-29-2022 05:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Did you forget your last query with the same title that you made last week? https://forums.ni.com/t5/LabVIEW/Why-is-RT-matrix-multiplication-so-much-slower-than-Windows/m-p/426...

The facts still are the same!

11-30-2022 11:03 AM - edited 11-30-2022 11:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thankfully somebody combined the duplicate threads.

There is nothing magic going on, one of the processors is simply much more powerful. Intel learned the hard way back in the days that clock speed is just a meaningless marketing term. When they came out with the 3GHz P4, people were wowed by the GHz, but performance was not that great. Later they came out with the core architecture which was significantly more powerful at lower clocks. (Analogy with cars: You cannot say that a 50cc motorcycle at 12000rpm is 3x more powerful than a 7 liter seventies muscle car at 4000rpm)

A modern processor takes about 7 CPU cycles for a multiply. But there is much more to it. My benchmarks have a column "S/GHz" which shows the single core performance normalized to the clock speed.

- An old Intel Atom N450 can do about 3

- A Intel P4 can do about 5.5

- Older Intel core can do about 12-20

- Modern Intel and AMD processors can do over 30!