- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

UDP Error 61 on cRIO - but controller memory usage is <~70%

Solved!08-26-2014 11:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi All,

I'm having a problem with a program running on a cRIO-9074.

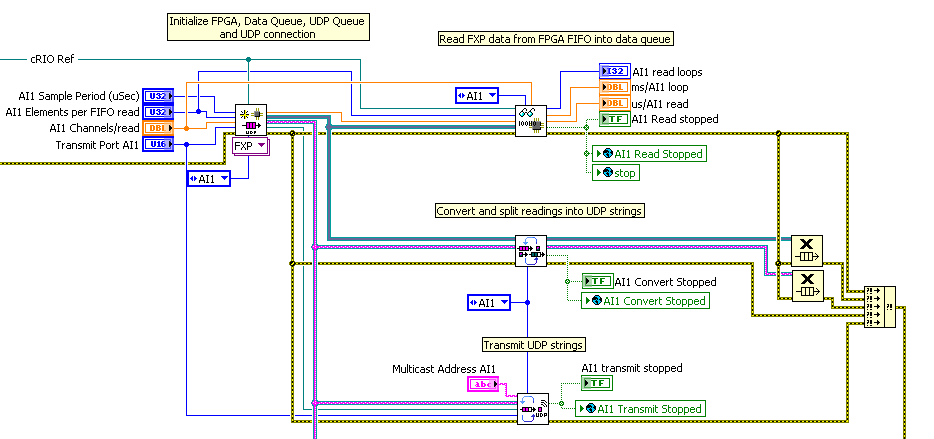

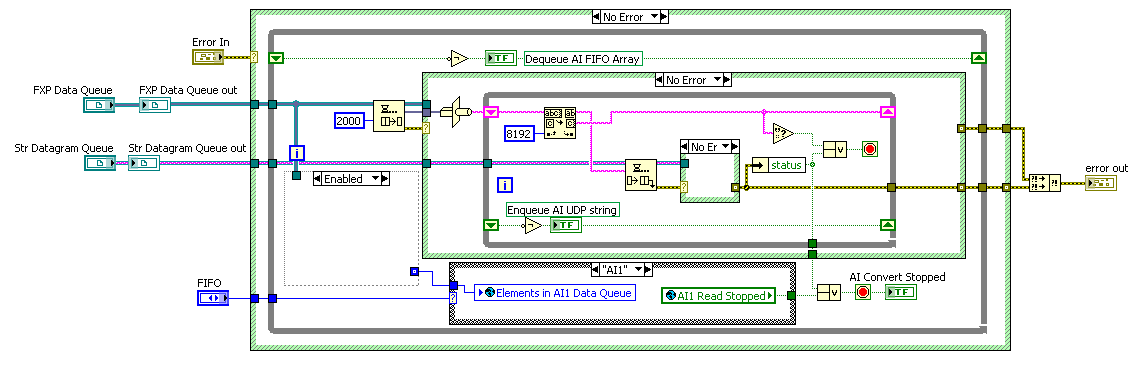

My controller code has 3 parallel processes (for 3 banks in my controller) that each contain 3 while loops. One reads an array of FXP values from an FPGA DMA FIFO and pushes that array into a queue. The next loop then reads the array from the queue, casts it into a string, splits that string into UDP sized chunks and places those substrings into a second queue. The final loop reads a substring from the second queue and transmits it via UDP.

Basically a producer - consumer/producer -consumer architecture.

Now this all works fine in my spaghetti-code development version with just one top-level vi. To make things more readable though I compacted things into subvis, using global variables when necessary to link them.

My code runs, but I'm getting frequent errors when transmitting the UDP strings:

"UDP Error -61: The system could not allocate the necessary memory. "

I've checked the memory usage using NI distributed systems manager and my cRIO memory has a fairly decent amount of overhead. Reducing the sampling rate - and corresponding memory usage - doesn't seem to make much difference to the time the system can go before errors. Even reducing the sampling rate right down and disabbling all but one loop (i.e. one bank) I'm still getting the errors.

How could I debug this?

Solved! Go to Solution.

08-26-2014 11:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

One other thing to note: my initial approach when introducing subvis into my code was to use references and property nodes (this is my first RT/FPGA project so I'm still not sure what's best). I didn't get any errors, but the code ran much slower, so I could only run at >1/2x the previous sampling rate, hence the change to globals.

08-27-2014 06:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi marc1uk,

Have you looked at the status of your FIFO ? Is the data ever overflowing ?

What address are you sending the UDP packets to ? I would first send them to localhost

I would first build a simple UDP project on LabVIEW and make sure that works before deploying it to your cRIO.

Lucas

Applications Engineer

National Instruments

08-27-2014 03:44 PM - edited 08-27-2014 03:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Lucas, thanks for helping. 🙂

I am monitoring the status of my queues and they're not overflowing - I haven't actually set limits on the number of elements but it doesn't go very high - generally 0 in both queues, with a brief flurry in the UDP transmit string queue when data comes in from the FGPA FIFO. I can't even see the spike in the conversion queue, so that must happen pretty quickly.

I'm sending the UDP packets to one of the standard UDP example addresses - my cRIO is directly connected to the dev machine (nothing else on the network, no router/switch, just an ad-hoc connection) and the UDP code has evolved from an example vi (exactly as you suggested). I'm reading the transmitted data on the dev machine fine, when it's transmitting. in fact as I say, the whole program works fine with everything in the top level, no sub-vi's.

Do sub-vi's perhaps get a limited amount of memory to work with? maybe the cRIO isn't full but the sub-vi's allocated memory space is?

08-28-2014 04:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi marc1uk

I looked up the error code 61 and it can also be caused by a "Serial port parity error". It appears that this is caused by not closing down the connection or having a timeout selected on the UDP read function perhaps.

Is your problem solved if you close all the VI's and quit LabVIEW and then try it again ?

If this doesn't solve your problem then it would help me troubleshoot your problem if you uploaded your code. If you wouldn't mind doing that ?

Have a great day!

Lucas

Applications Engineer

National Instruments

08-28-2014

07:48 AM

- last edited on

05-20-2024

05:14 PM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Lucas,

I expect the 'Serial port parity error' is just another interpretation of that particular error code; the networking error page here lists the error code interpretations for TCP/UDP/Datasocket/Bluetooth/IrDA functions, so I expect it's what's relevant. The serial port on the cRIO isn't being used, and UDP connections are, I believe, only being closed on exit. I'll double check that I don't have something odd happening that may be re-running the transmit after the connection has closed which is hiding the real error later today. I'll also upload my code, which I'm happy to do. Perhaps you could even make some pointers on other flaws - my RT programming is probably terrible.

Unfortunately closing labview/rebooting/power cycling either cRIO or dev machine (or both) doesn't fix the issue.

Best regards, and thanks again

Marcus

08-28-2014 09:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Marcus,

It appears you may be filling up the allocated buffers for TCP/UDP buffers. Your error can occur when the preallocated network communication buffers on the RT target are full.These buffers will fill up quickly if TCP/UDP packets are sent to the localhost but not read. When the buffers are full, the network communication will stop until there is space in the network communication buffers. If the buffers continue to be full, the target will hang.

To solve this problem, either read the TCP/UDP packets being transmitted or close the communication sockets to free up the buffers.

I hope this helps.

Lucas

Applications Engineer

National Instruments

08-28-2014 12:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Lucas,

It's an interesting theory, but there are a few things that don't seem to tie up with this for me. The first is that I'm using UDP rather than TCP explicitly because I may have one, many, or no receivers. I believe UDP, unlike TCP, does not establish a link between the sender and receiver, so does not check whether packets have been read or not. This seems to be backed up by the fact that I can run my old code (no subvis) to transmit data indefinitely, without running the vi that recieves the data. It's only when I use sub-vi's I have issues.

Secondly even when running my receiver vi, I still get trips. The receiver can clearly read at a suitable rate, it hasn't changed. In fact the time-to-trip doesn't appear to bear any correlation to whether the receiver is listening or not.

Finally reducing the sampling rate should also reduce the rate at which the buffers fill, and so time to trip. Again, there doesn't seem to be a correlation there.

I still don't have access to the program code so will upload it later.

Thanks for your suggestions, I really hate to be shooting down ideas like this, I don't mean any offence!

08-28-2014 07:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I've attached my project code; the main vi is "NI 9223 User-Controlled IO Sampling (Host) and transmit TC + AI subvid.vi", while the old non-subvi'd version is "NI 9223 User-Controlled IO Sampling (Host) and transmit TC + AI.vi". The old version is a rather huge and messy program, so apologies if it's a pain to interpet! There's 3 copies of each set for each bank, so once you understand 1/3rd of it the rest is just duplicates!

Labview crashed a few times while trying to create a library from these files, so I ended up just putting the source files for all subvis into a .zip file. I looked through the vi hierarchy so hopefully all the necessary files are included, but let me know if there's anything missing.

Hopefully this can shed some light on the matter.

08-28-2014 07:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

For the visually minded (such as myself) here are some screenshots depicting the basic problematic vi.

The top level vi has an initialization, then three vi's that run in parallel, each containing a loop that only exits on error or on when stopped by the user.

From top to bottom the sub-vis are as follows:

The top vi reads from the FPGA DMA FIFO and pushes the FXP array into a queue:

The middle vi reads from this FXP array queue, converts each FXP array into a string and splits that string into UDP-packet sized substrings. Each substring then goes into another queue:

The bottom vi then reads a (sub)string from the above queue and transmits it over UDP.

That's the basic (in fact, complete) operation. I have a 1000ms timeout on the UDP transmit, but don't see any reason the UDP transmit should take >1000ms to complete. If that's happened, the vi has effectively already failed - 1000ms should be a sufficient timeout for any normal operation.