- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Timing architecture when spawning multiple daemon instances

09-30-2014 05:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This is a partially theoretical question that may also have some practical impact.

I'm expecting an app to spawn a bunch of data-generating processes. By nature they are independent and asynchronous, but many of them will be producing data packets at the same rate. In order to spread out the CPU hit, and a simple way to do that is to scheme up a way to randomize the phase of a bunch of loops that all have the same period.

I've done this in the past when I just used a few Timed Loops, but this time I neither need nor do I particularly want the elevated priority they come with. On the other hand, a simple 'wait until next msec multiple' primitive doesn't provide a way to manipulate the phase of multiple processes running at the same period. A bunch of daemons set to loop at the same rate would all wake up and do their work simultaneously.

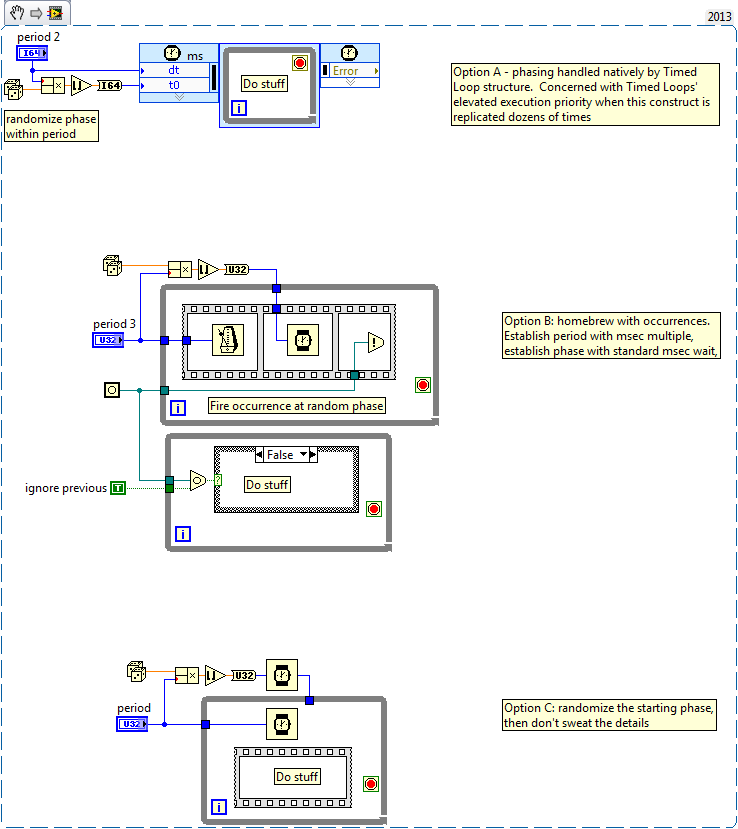

The snippet below shows 3 timing schemes I've thought of for randomizing the phase of a whole bunch of spawned processes that are designed to loop at the same rate.

Option A is based on Timed Loops. This might be fine, but should I worry about their elevated priority and the possiblity of starving my data consumers?

Option B is a simple scheme with occurrences and regular while loops that won't have their priorities elevated. It should function much like the Timed Loop where an initial phase can be set and the process will stay locked in at that phase. It adds some complication of having two loops to terminate, but that'd be solved easily enough by bumping up from occurrences to notifiers. (Plus, I do believe it demonstrates a legitimate use of a multi-frame sequence structure!)

Option C is the "hey, don't overthink this" option. A random initial phase is kinda set. Thereafter, phase isn't locked (nor is period, really), but there's also no reason to expect the different instances to get in lockstep with each other.

What are your thoughts on these (or maybe other) approaches? Again the goal is to have many independent asynchronous data publishers that loop at the same rate, but spread their CPU usage around rather than all waking and working during the same time slice of that interval. (The snippet below is just enough to illustrate each option, not looking for a code review.)

-Kevin P

09-30-2014 06:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Before I strain my brain thinking about this, what is the range of values for the common period?

Lynn

10-01-2014 07:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm planning on a loop period in the neighborhood of 100 msec, give or take. There's no hard real-time requirement though my thought process is admittedly influenced by past real-time work. Having a bunch of processes that wake up and work at the same time can make for timing jitter so for stuff that's asynchronous anyway (instrument communication, etc.), I just want to level out the cpu workload a bit to make the loop timing more consistent.

These processes are mainly acting as data generators. Each process wakes up about every 100 msec to publish its new data (if any) as the payload of a User Event. The 100 msec period is mainly driven by the needs of the data subscribers -- GUI displays, fault monitoring code, etc.

I figure I'm not the first person to bump into this kind of timing contention, so I'm looking for a sanity check. Is it really worthwhile to stagger the phasing of these processes that loop with the same period? Is there a standard approach that I don't happen to be aware of? If so, what does it look like?

-Kevin P

10-01-2014 12:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Tap, tap, tap...

Hello? Anybody?

Is this thing on?

<bump>

-Kevin P

10-01-2014 02:52 PM - edited 10-01-2014 02:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

How many of those "daemons" you spawn? You've asked for sanity check, so here it is: do they really make that big impact to other loops in application so its worth complicating this? Wouldn't just leaving the problem to LV/OS schedulers be the easiest and best option D?

There is also option E: change prioritty of VIs containing your deamons. But I'm not really like changing the priorities of VIs. VI priority is in fact hidden parameter (i.e. you can't see it anywhere in code and must explicitly check it) which can seriously affect the code - and its hard to track that little "above normal priority VI" is making trouble.

10-01-2014 08:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yeah, I'd just let the OS handle it. If its on a windows system you have so little control over when your code actually runs it just doesn't seem work worrying about. When you throw labview in the mix, where Loop A may spend half its time on thread 3 but then swap over to thread 1 for the other half of its time except for the occasional period where Loop B is trying to swap over to thread 1 at the same time and so LabVIEW automatically shifts A over to 5 and then oh nooooooo....

The above is (maybe?) an exaggeration but hopefully it makes sense 🙂

If you want an actual answer, I'd say maybe use semi-limited semaphore (for 10 threads make a size 4 semaphore, for example) where I believe LabVIEW will preserve the order of each request to unlock the semaphore and so each loop will get a fair opportunity to do its work. Plus if the other threads arent fast enough, you get a timeout and can always ensure data updates will occur.