- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Timeout influence at Create Network Stream Endpoint

01-19-2017 04:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

There's one question about Networks Streams, that is unclear for me. How exactly does timeout value affect endpoint creation?

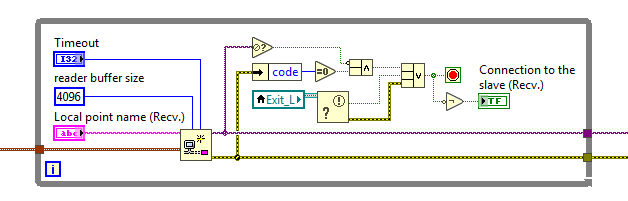

The question is not that silly as it seems. Now I have one program with input and output NW stream, running on PC1, and the second one w/ the same streams, running on PC2. If I set timeout to 150 ms or less, the connection between reader and writer in some cases doesn't set up at all. I checked it out on two PCs with Windows 7 64-bit + LV 2016 64-bit and on two PCs with Windows 7 64-bit + LV 2016 64-bit and Windows XP 32-bit + LV 2011 32-bit. In the latter case the connection was established only one way - from XP with LV11 to 7 with LV16. But when I set timeout to 160 ms or above, everything starts to work OK. The init loop on master side is pretty simple:

The slave's loop is almost the same - it just has url input wired in the form "//IP-address/endpoint name". On both sides the writer endpoint is connecting to reader endpoint with its IP-address.

According to the docs on the subject I have these things done:

- NI PSP Service Locator (lkads.exe) and LabVIEW are both added to Windows firewall exceptions;

- Windows firewall service is switched off and disabled completely;

- No 3rd party firewalls or AV on the machines;

- All networks on W7 are set to "Home";

- Pings go fine between both PCs;

- Shared folders work just fine;

- When trying to create reader/writer endpoints on both sides I can see active incoming / outgoing packet exchange in network adapter's parameters (the same is shown in tools like TCPView).

So, what's the reason for timeout to be higher than 150 ms? Why I cannot set it to 50-100 ms (as in my other loops)?

01-19-2017 08:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well, in my LabVIEW RT Application where I'm establishing 4 Network Streams between my PC Host and a Remote RT Target, I use a timeout of 15000 ms, with the proviso that if the Streams do not connect, they simply re-try. I wouldn't think to quibble if it were two orders of magnitude faster ... Note that, depending on what else is going on (and, I presume, one of your Endpoints is on a Windows OS, which is not so deterministic), a 10 msec difference (between 150 and 160 ms) is "in the noise" (so to speak).

Bob Schor

01-19-2017 08:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It could still be your network. If there is a lot of congestion on the network, it will take longer to establish the connection (lost packets have to be resent, collisions, etc).

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

01-20-2017

12:07 AM

- last edited on

12-17-2025

05:08 PM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Bob_Schor

@Bob_Schor wrote:

Well, in my LabVIEW RT Application where I'm establishing 4 Network Streams between my PC Host and a Remote RT Target, I use a timeout of 15000 ms, with the proviso that if the Streams do not connect, they simply re-try. I wouldn't think to quibble if it were two orders of magnitude faster ...

Well, I'm using the same approach - if there's no success on establishing a connection, then the both endpoints try to connect again and again. But I'm also using Stop mechanism based on notifier: when my Stop button is pressed, the notifier is set to True and all the loops must be stopped and the whole app must be shut down. So, it is better to me when it works as fast as it can.

@Bob_Schor wrote:

Note that, depending on what else is going on (and, I presume, one of your Endpoints is on a Windows OS, which is not so deterministic), a 10 msec difference (between 150 and 160 ms) is "in the noise" (so to speak).

All my endpoints run on Windows. And that's really strange. I checked a dozen times - when the timeout is 153 ms (to be very precise), no connection is made at all. But when I add just 1 ms to the timeout, it suddenly starts to work!

I may forget about this issue for sure. But I feel like I'm interested in this deal and the details because I'm into LabVIEW for a while. So, it seems to me, it's somehow related to the way how Network Streams work. In TCPView I see a huge number of TCP ports being allocated when trying to connect (starting from 59110, according to this). As I know NI-PSP uses Logos engine, I tried setting these keys in Logos.ini (the article [article no longer available]):

[logos] Global.DisableLogos=True Global.DisableLogosXT=False

and these two (in LogosXT.ini):

[LogosXT] LogosXT_PortBase = 59110 LogosXT_NumPortsToCheck = 100

It doesn't seem to affect the timing issues. I'm not sure how Logos exactly works internally though.

crossrulz

@crossrulz wrote:

It could still be your network. If there is a lot of congestion on the network, it will take longer to establish the connection (lost packets have to be resent, collisions, etc).

I think this is unlikely because I tried it with several local networks made of two dedicated Gigabit network adapters and not too long piece of 8-wired cable. There's no any other devices on the networks of those PCs, so no congestion.