- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Split 2D array into 25 2D arrays

Solved!10-08-2018 07:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm trying to split a large (2045x1080) 2D array into 25 smaller arrays. Split array 1 would be element 0, 5, 10 etc. of row 0, but skip row 1, 2, 3 and 4 before gettng element 0, 5, 10 etc again on row 5. Split array 2 would be element 1, 6, 11 etc of row 0, but skip row 1, 2, 3 and 4 before doing the same again on row 5. Skipping a few to keep this example short, split array 6 would be element 0, 5, 10 etc of row 1, but skip row 0, 2, 3, 4 and 5.

Attached is an image of what I mean, each square is an array element and I want to make 25 arrays which each contain every pixel labeled a number in the image. So split array 1 would contain all pixels labeled '1' (blue in the image), split array 2 all pixels labeled '2' etc. I am imaging a hyperspectral camera that has a large CMOS chip with 2045 by 1080 pixels, but is covered in a large grid of repeating 5x5 filters, hence the 25 arrays.

Thanks in advance. If it isn't clear, please ask what you want me to clarify as I am having trouble properly explaining this using just text and an image.

Solved! Go to Solution.

10-08-2018 07:52 AM - edited 10-08-2018 07:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

10-08-2018 08:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I have no idea how to start with this. Normally Id just pick every nth element from a row using a for loop but since my data isn't aligned by row but in squares spanning 5 rows that won't work. Added problem is that the image processing is done in real time at 3 fps and if I go ahead and run 25*2 for loops on 6.6 million array elements per second it will probably get bogged down.

10-08-2018 08:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Willem,

Normally Id just pick every nth element from a row using a for loop but since my data isn't aligned by row but in squares spanning 5 rows that won't work.

Why not?

Pick every 5th row, pick every 5th column.

What's the problem with using two loops?

10-08-2018 09:39 AM - edited 10-08-2018 10:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

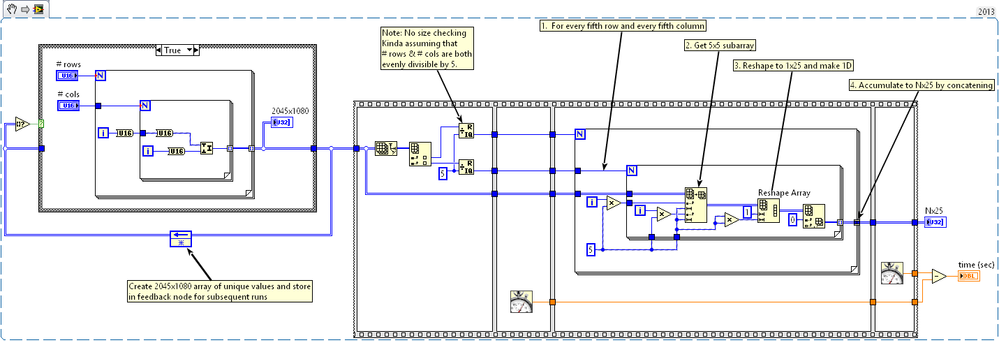

My first thought was to group those 5x5 subarrays of unique filter id's and work from there.

There are probably more optimal ways to approach this problem, but the stuff I tried below ran in ~50 msec on a several year old desktop PC. [Edit: changed display format of array contents to hex since values were generated in a way that makes more sense to see that way]

-Kevin P

10-08-2018 10:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

So you have a large 2D array and want 25 smaller 2D arrays. Is this just for processing or do you want to have 25 2D array indicators? Why not create a 3D array with 25 planes to keep things together?

You could start thinking of it as reshaped to a 1D array, because in memory elements that belong together (equally numbered in your image) are all equally spaced and it is trivial to to e.g. create a new 2D array where each row are the equally numbered elements reshaped as 1D array. It could even be parallelized.

What is the datatype of the array? Is each pixel just a few bits (e.g. 8)?

What do you really need to do with the subsets? Just process them into some derived data? Do some convolution? extract some features? Just save them? Is this running on windows, a RT system or on an FPGA?

10-08-2018 12:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

A couple followup thoughts:

1. The code I posted ended with an Nx25 2D array. Each column should represent one of the 25 subsets you want. Or you could transpose it to 25xN because then you could auto-index a For loop over these 25 subsets.

2. For your real app, it may be more efficient to pre-process the *pattern* of this rearrangement into an "indice mapping" array. Then the real time processing isn't trying to create and reshape 2D arrays, it just maps data elements from the original layout into the layout you want.

Let's say:

I is the 2045x1080 array of raw Image data

M is a 25xN array of mapping indices (here N = 88344 to account for every element)

D is the 25xN array of image data after rearranging.

The mapping indices found in M would let you do D(i,j) = I( M(i,j) ).

Then you could auto-index 2 nested For loops with the pre-defined M array and auto-index D on their output tunnels.

I'd expect this to be faster, but you ought to check it out rather than assume like me. (Plus, someone else may post a different and altogether more efficient solution).

-Kevin P