- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Speed Showdown: Linear Array vs Variant Attribute for Data Storage/lookup

03-23-2018

03:04 PM

- last edited on

12-16-2024

08:33 AM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

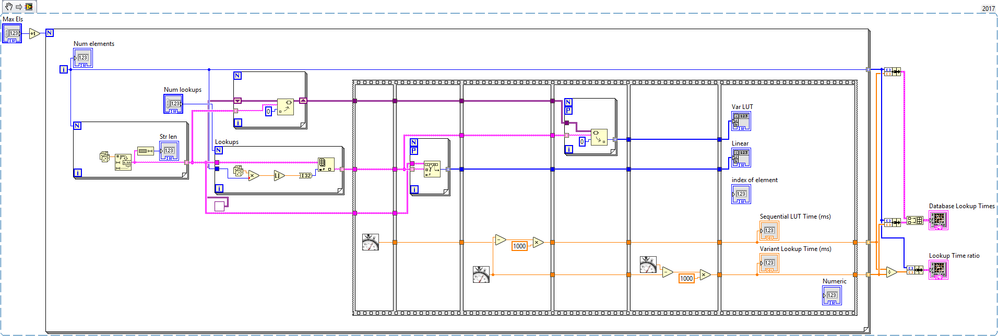

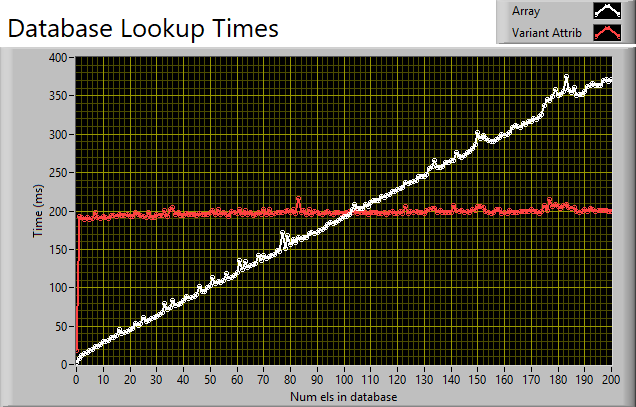

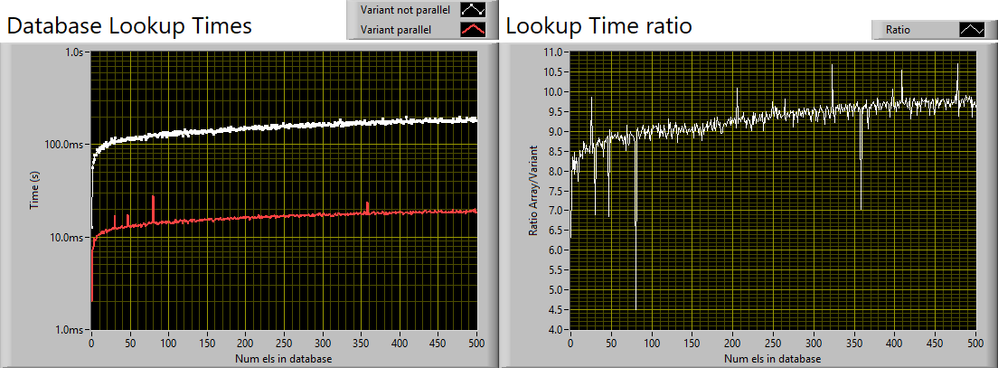

NI provides some guidance (http://www.ni.com/newsletter/51495/en/ tip 5) suggesting the use of variant attributes for key/value pair lookup. I wondered what size the data needed to be before the variant attribute performance was better than a simple linear search through an array.

While I don't claim what is below to be comprehensive, the answer is about 28 items. If you have fewer than this, and lookup performance might matter to your application, you might not want to use the Variant Attributes as a data store. The difference is most apparent at around 4-8 elements.

Comments, criticism, and extensions welcomed.

Data and snippets follow

03-23-2018 03:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'll have a look on the weekend. I use variant attributes extensively for temporary results caching during fitting.

(details, ~4k entries, keys are 368byte binary strings and attribute is a simple I32, used as index in fixed sized arrays containing the actual spectra and age, etc. "Age" is needed for expiration where the oldest entry is always replaced with newest. Without expiration I would get slower and slower and eventually run out of memory 😄 Performance is great!)

03-23-2018

04:21 PM

- last edited on

12-16-2024

08:34 AM

by

![]() Content Cleaner

Content Cleaner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@AdamZeroK wrote:

Comments, criticism, and extensions welcomed.

- It typically helps to put reasonable default values into all controls before saving the snippet. We don't know what's reasonable!

- You should use "high resolution relative seconds" instead of the ticker (better units and better resolution)

- Make sure that all indicators are after the sequence frame.

- There is no need for the shift register in the variant lookup loop because the data is not modified.

- I would probably multiply [i] by two for the lookup generation to have 50% lookup failures later (failures are more expensive for the search because all elements need to be inspected)

- Your times drop to zero when disabling debugging because you don't use the outputs. I would always add outputs after the toplevel loops (last value is sufficient).

- Both lookup loops can be parallelized for better performance (after you remove the shift register in the variant lookup loop. Dual xeon here, 16 hyperthreaded cores total).

- My crossover is around 18-22, probably CPU dependent.

- ...

03-23-2018 04:36 PM - edited 03-23-2018 04:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wrote:

- ... can be parallelized for better performance (after you remove the shift register in the variant lookup loop.

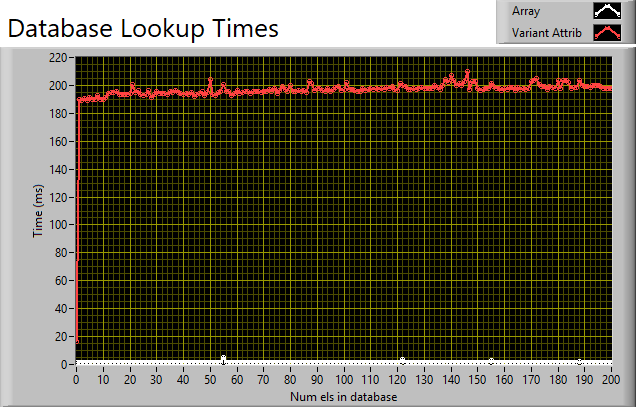

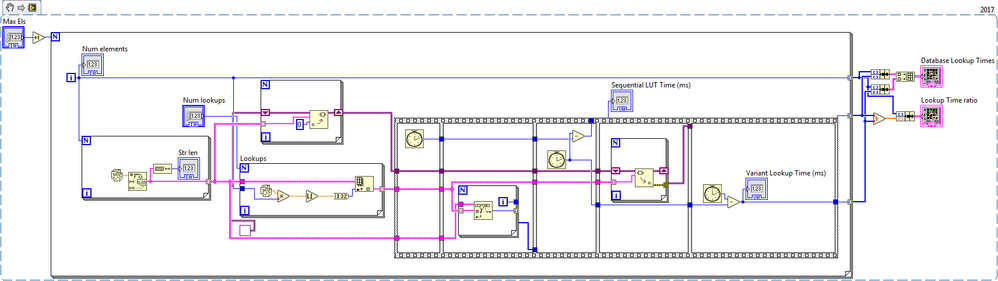

Here is comparing the same variant method with and without parallelization of the FOR loop. I get a ~9-10x speedup! This is for 1M lookup operations)

03-23-2018 05:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

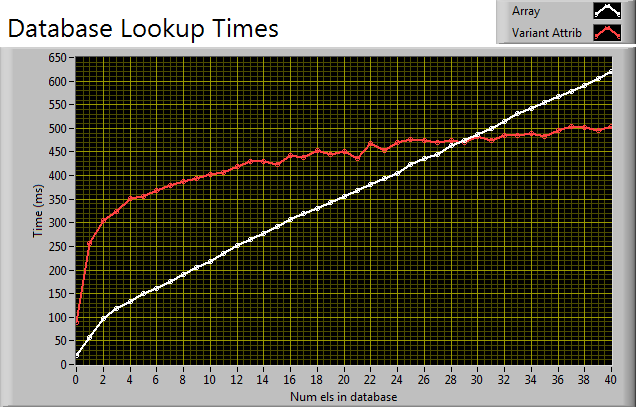

I noticed something else... there's no "payload" that is actually being searched for. This is just a "Is this string in a list" search, but it seems like the Variant attribute is much better suited for a key-data pair since it has a "Value" input, so I made a modified version where there's a chunk of data stored in the Variant attribute "value" and the array search instead operates on an array of clusters, where each cluster is the key string plus the chunk of data. Doing this seemed to make the crossover point shift to a lower number, but that might be just because using two FOR loops plus an unbundle is less efficient than a simple "Search 1D array" node.

Additionally, there is a small overhead associated with populating the Variant attribute in the first place. If you do a lower amount of lookups on a larger list of elements it can become a lot more significant portion of the total time. So possibly it needs to be put into the timing loop instead of left out of it?

03-23-2018 06:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I was about to comment on the same "lack of payload" that Kyle97330 just mentioned.

I'm going by memory now, but this seemed to be a similar flaw in some prior benchmarks results I'd seen for variant attributes so I rolled my own some time back. In my benchmark, I not only looked up the variant attribute value, I also *converted* it to its native type. This seemed an important step of realism for simulating actual lookup usage.

I don't recall exactly what datatype(s) I tried, but I recall the crossover point being about an order of magnitude larger (hundreds rather than tens of elements). I'm pretty sure I *didn't* approach it like altenbach suggested where ~50% of the lookups were manipulated to fail on purpose but I like that as a good additional factor to consider for benchmarking these lookup methods.

Note: as I recall, my array lookup was based on separate 1D arrays for the keys and values. I used the Search 1D Array primitive on the string array of Keys and then extracted the corresponding element from the 1D array of Values. Methods based on a single 1D array of clusters containing a Key-Value pair seemed intuitively less efficient to search.

-Kevin P

03-23-2018 07:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for feedback.

I used 4M lookups per run and it finished fairly quickly, but took enough time for the precision to not be an issue.

Parallelization:

As I usually the case with these benchmarks, the results are use-case dependent. In the use case I had in mind when setting this up (single queries with some time in between) parallelization wouldn't make sense.

Aside from this, parallelism speeds up both loops, and what I was after here is a comparison, right? In my test it parallelization relatively speeds up the linear search more than the variant (default parallelization options)

I am a bit confused however. If I use just last value of the loop in both cases the linear search time isn't rising, which seems clearly wrong; maybe some undesirable optimization occurring under the hood? OTOH I don't like using the indexing version because we might be getting allocations giving misleading results.

Data and updated snippet below.