- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Remove NaN

Solved!05-07-2012 05:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

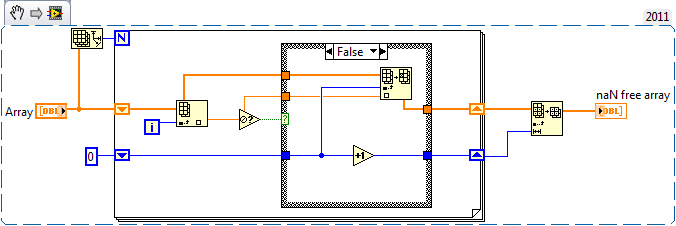

Thanks now only starting to learn those things. Will this be OK?

The best solution is the one you find it by yourself

05-07-2012 06:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

05-07-2012 06:02 AM - edited 05-07-2012 06:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Anand,

it get's better.

- It would be fine to compare two operations doing the same. As of now you compare your "replace" algorithm with TiTou's "delete" algorithm...

- Updating indicators (like "iteration count") in the tested loops will hurt performance a lot. Don't do that for benchmarking...

- Even now the compiler might be too smart for you. It might "know" your test-array is built from NaNs and might precompile (part of) the loops...

05-07-2012 06:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

05-07-2012 06:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Looks cool. Learnt a new thing today 🙂

The best solution is the one you find it by yourself

05-07-2012 06:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@GerdW wrote:

Uf... it's a bit unfair to fill the array with only NaNs... I mean, when I tested my algo I used the kind of data I had, that was arrays between 100k and 2M samples with a max of 10 NaNs for each 100k samples...

We have two ears and one mouth so that we can listen twice as much as we speak.

Epictetus

05-07-2012 06:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

05-07-2012 06:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

That's what I was expecting.

What I'm wandering now is what happens memory-wise. Is LabVIEW smart enough to do Anands loop inplace?

We have two ears and one mouth so that we can listen twice as much as we speak.

Epictetus

05-07-2012 06:31 AM - edited 05-07-2012 06:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

From looking into the algorithms (and their differences), i would guess that TiTous IS indeed slower than Anands for increasing numbers of NaN's to be replaced.

But i have to point out that with increasing array size, TiTou's will outperform Anands since it reuses memory (works on the original array "in place") whereas Anands create a complete new array.

Norbert

EDIT: Arg, two times misread the code, sorry....

EDIT 2: In order to get proper benchmark results, you have to execute the VI at least two times without unloading it inbetween (and doing other, performance wasting stuff). The first iteration results in incorrect values because of "organization stuff" like allocating memory.

----------------------------------------------------------------------------------------------------

CEO: What exactly is stopping us from doing this?

Expert: Geometry

Marketing Manager: Just ignore it.

05-07-2012 06:49 AM - edited 05-07-2012 06:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

A comment first on the comparison:

You cannot really do the timing-test that way:

- The two methods you are comparing do not do the same job, TiTou is removing the NaN-elements, while you Anand replace them with zeroes.

- Timing-wise running both methods in parallell will cause them to interfere with eachother.

- It only tests an extreme case where all the elements are NaNs.

Now, to the suggested solutions:

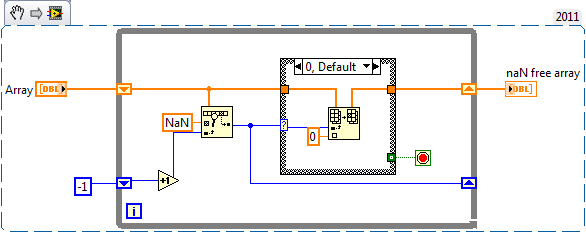

TiTou's solution for NaN removal trades memory for speed by creating what could be a huge array of booleans, and then potentially wastes all that speed (and additional memory) by resizing every time a NaN is found. If the arrays are small and/or contain very few NaNs the penalty is limited, but if that is not the case it can be severe. In general repeated use of any array resizing function should be avoided/minimized.

I have not spent much time on finding an optimal solution (in fact I'm quite sure this is not the optimal one), but the following code would definitely win a comparison test in cases where the task will in fact involve some work (i.e. large array with at least some NaNs):

As for replacing NaNs with zeroes Anands code is fine, but yes - using the search function instead of comparing the elements one by one is quicker so a better aproach would be like this: