- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Real-Time Digital I/O - Hardware/Software selection.

12-12-2022 05:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Dear colleagues,

I have short question about proper hardware selection for my task (I know, this is hardware question, but this forum is mostly active).

What I need is the "LabVIEW-controlled black box", which should have one TTL input & three outputs.

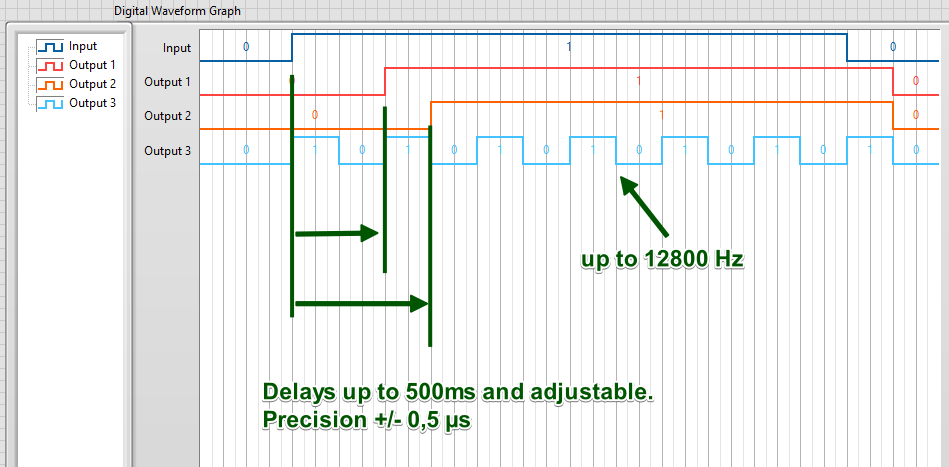

The logic: this device should wait for high level on input, and when detected, then set outputs 1 and 2 to the high level, but after some delay. Output 3 should start with generation of pulses up to 12800 Hz frequency.

Something like that, kind of "delayed triggering":

This magic box should be connected to Ethernet and I shall be able to adjust desired delays and frequency programmatically from LabVIEW app running on host PC (doesn't matter how - with simple TCP commands or network variables, etc).

Important is the jitter between input and output - in ideal case propagation delay should be better that half of microseconds. Output 1 and 2 shall be able to generate digital pattern with desired hight/low durations.

Currently I think that I should take cRIO-9053 together with NI-9401 (which is 100 ns C Series Digital Module), but not sure:

Do I need then LabVIEW FPGA Module, or is it possible to achieve my goal with NI-DAQmx only?

Any better idea which hardware do I need? May be CompactDAQ?

I have some experience with NI PCI/USB Digital IO, but not with CompactRIO/CompactDAQ/PXI.

Thank you in advance,

Andrey.

12-12-2022 05:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I don't think you could reliably trigger outputs with 0.5us precision on ordinary LabVIEW. You have delays there with minimum time of 1ms and even that is not that accurate. 0.5us sounds like a RT thing.

12-12-2022 06:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@AeroSoul wrote:

I don't think you could reliably trigger outputs with 0.5us precision on ordinary LabVIEW. You have delays there with minimum time of 1ms and even that is not that accurate. 0.5us sounds like a RT thing.

Yes, sure, I understand this, therefore I would like to cover this with Real-Time CompactDAQ with has FPGA on board.

I do not want to use LabVIEW RealTime on Windows host PC.

12-12-2022 07:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If Kevin pops in here, he may have a set DAQmx solution.

To get the response you are after, you will pretty much need to be down in the FPGA. I have a current application that is somewhat similar. I went with a cRIO-9053 or cRIO-9040 (had to switch between them due to the chip shortage issues over the last year) with a NI-9402. The 9402 is LVTTL and BNC, but has a shorter propagation delay (55ns). It is compatible with most things that need TTL.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

12-12-2022 11:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'd first want to explore whether this whole scheme could be DAQmx-able, without needing RT or FPGA. The portion of the signal timeline that's *visible* definitely could be done purely in DAQmx as a one-shot kind of deal. Where it could get trickier (and maybe not feasible) depends on answers to questions not presently visible.

1. How often might the input signal present a rising edge to react to?

2. For how long should output 3 should be generated?

3. What's the shortest expected time from the end of output 1,2, or 3 until the next rising edge on the input?

4. What are the consequences of "missing" an input rising edge entirely?

A few notes on where my thoughts are heading:

A. I see output 3 as a counter pulse train. The "Initial Delay" should be set as near 0 as possible so that the first rising edge of output 3 can *nearly* coincide with the input rising edge.

B. Outputs 1 and 2 could either be single counter pulses or DO lines in a common task.

C. I don't know the cRIO line but would expect that anything from the past ~8 years would support retriggering for these output tasks. So once you set up the tasks, they'll repeatedly respond according to the timing you configured until your host app decides the timing parameters need to change.

D. Note however that under DAQmx, the ability to change pulse timing parameters "on the fly" is only available for continuous pulse trains. And only the high and low times at that, not the initial delay property.

Similarly, if outputs 1 & 2 are DO lines, you'd need to stop the task to be able to write new data to the buffer that represents the new timing needs.

E. So it seems that a DAQmx solution would require time to stop the task, change timing parameters, and start it again before the next time it's *necessary* to react to an input rising edge. Under Windows I'd plan on a minimum of maybe 5-10 msec, but with no guarantees it won't take 100 or more once in a while.

Under RT, you can expect the reprogramming time to be more regular, especially if outputs 1 & 2 are counter pulses. (If they're DO lines, you might be getting into memory allocation if the DO buffer size needs to change.)

F. If you can't afford any "misses" and the needed time for reprogramming exceeds what's available (from question #3 above), then I'm more doubtful that a purely DAQmx approach will be feasible.

-Kevin P

12-13-2022 03:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you so much, Kevin for such detailed explanation!

"DAQmx-ability" means that I can achieve this with suitable NI PCI I/O card running under Windows OS?

Technically this diagram above is just "single shot experiment" invoked from user. The delays/timing and frequencies will be selected manually from presets prior to test, then will be written to I/O (cRIO), and when cRIO is ready (waiting for trigger), then the operator will hit "Start" button and then experiment started. When finished, then another preset will be selected and everything is repeated. Time between experiments (shortest time from the end of output 1,2, or 3 until the next rising edge on the input) is few minutes, so it is more than enough time to prepare I/O and restart the tasks with new parameters. During each experiment input triggered only once. When trigger detected, then system will run fully autonomously without any further changes of the timings "on the fly". Pulse train must be "active" already before the moment when output triggered. Theoretically can be started "together" with input trigger, here is no strong sync required. Output 3 shall be generated for few seconds, but must be also possible to generate train continuously all the time (like continuously running signal generator).

Important is that the the consequences of "missing" input rising edge entirely are very high. This should never happened (as long as hardware is OK, of course) and wholly unacceptable under any circumstances. By the way, which is the shortest pulse on input which can be detected by NI 9401/9402 modules with 100% guarantee?

The diagram above is "Minimal Valuable Product". As next step, if I will need several pulses on outputs with non-regular durations, like this diagram below, where all t0...t7 are slightly different (typically around 20...250 ms):

will be possible to configure such task in DAQmx? Looks like I should prepare waveform with this signal, then using DAQmx Timing / DAQmx Write to generate such train, isn't?

Andrey.

12-13-2022 03:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I do digital outputs like that all the time with DAQmx alone. I don't know the latency between a trigger signal and the first pulse coming out, but I do know those triggers are very fast. I also don't know the minimum pulse width it'll detect, but again I'm sure it's very fast.

For reference, I have to bitbang a serial signal using DAQmx digital writes running at ~1 MS/s and it works fine. I can stream several minutes worth of constant serial data that way without using much hardware resources at all. A 12.5 kHz signal is WELL within the range of DAQmx's DIO, especially if you don't need to change anything on the fly. You will need to confirm your specific modules meet your timing requirements but so far they don't seem too bad. 500 ns corresponds to 2 Mhz, which is faster than what I've done, but the base clocks running in most of these things are 20 or 80 MHz so I think this is entirely doable from a DAQmx standpoint.

From a 9401 module standpoint (datasheet here) you can see that the max switching frequency for 3 outputs is 10 MHz (more than enough). Kevin could correct me here but I think the I/O Propagation Delay of 100 ns is the value you're looking for for triggering.

BTW, I'd strongly consider cDAQ instead of cRIO for this. cDAQ means you can run your software on any host computer. cRIO means you'll need a separate program running on the cRIO chassis with another connection program running on a host computer. Alternatively I think they have chassis that support displays, but your control software here isn't very resource-intensive so I'd go with a 1-slot cDAQ chassis and call it a day.

12-13-2022 05:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I agree with BertMcMahan's comments. With the long time and the need for user interaction between triggering events, you need not worry about "missing" a trigger.

I have only minimal experience with cDAQ hardware, so I can't offer much in the way of details. I *did* check out the spec sheets for the 9171 and 9181 single-slot chassis. Based on their max internal timebase of 80 MHz, they may not support the option to perform your variable-interval outputs t0-t7 as a counter task. (Buffered counter output was introduced with the STC3 timing chip and X-series MIO devices circa 2011. The STC3 *also* bumped the max internal timebase to 100 MHz from the previous 80 MHz found in the STC2 and NI-TIO chips. So I suspect but do not know that a single slot chassis won't support buffered counter output.)

Anyway, as Bert described, you can still certainly define those output signals as part of a DO task. There's just a little more work to be done to come up with a clock rate that hits all your transition times as precisely as you need, and then create the digital data buffer that toggles the right bits at the right time *at* that sample rate.

Note that your actual sample rate may differ from your requested rate because the device can only divide its internal timebase down by integers. You can find out your *actual* sample rate by calling a DAQmx Timing property node to *query* the sample rate after you call DAQmx Timing to request one. You may need to iterate over this process until you find an *actual* rate that lets you land sufficiently close to every transition time for both DO lines.

All this stuff gets simpler if you can use a chassis that supports buffered counter output. Then outputs 1 & 2 are separate counter tasks, each with a small buffer containing the actual pulse timing parameters t0-t7. And you'll be able to hit your transition timing targets to within 1 cycle of the 80 MHz timebase. (Technically, the propagation delay alone is longer than that, but if you compensate the very first interval to account for such things, the subsequent intervals should prove extremely precise.)

Summary: this should be do-able with just DAQmx tasks running on a Windows PC connected to a 1-slot cDAQ chassis containing a simple DIO module like the 9401 or 9402. Even easier with a chassis that supports buffered counter output (which *might* include the 9171 or 9181, but I suspect not based on using the max timebase clock as a clue.)

-Kevin P