- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Reading a binary file of numerics with varying datatypes

Solved!08-08-2018 06:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

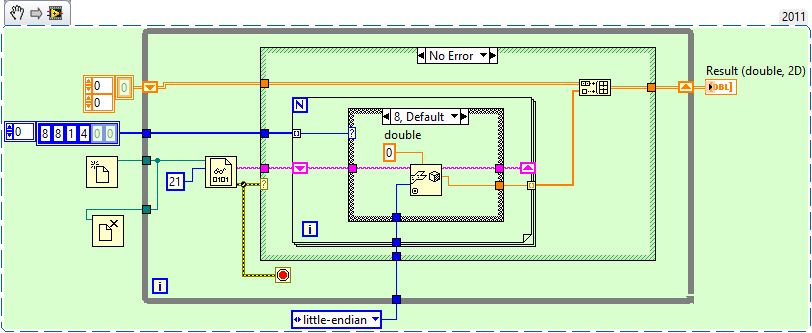

I think GerdW already sketched a solution, here is my version:

This example uses a pattern 8,8,4,1 as an example. You should care about data interpretation. For example, 4 can be a floating point SGL (as in my example) or a signed integer I32 or an unsigned integer U32.

-------------------

LV 7.1, 2011, 2017, 2019, 2021

08-08-2018 09:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well, my Bright Idea was only "half-bright". I was working on allowing the user to create an array that describes the data (e.g. Double Precision, Double Precision, I32, I32, U8, I32, Double Precision), then use LabVIEW Scripting to create a TypeDef that defines a Cluster that exactly corresponds to this description. A little tricky, but definitely do-able. What I couldn't figure out was how, in the same program, to use this newly-created TypeDef to do the Quick-and-simple Read As An Array of Cluster solution that I posted earlier.

I'd actually done this earlier, but had the luxury of having the format "relatively fixed" (until we changed the Program to handle additional parameters). So much for the "automatic" routine.

So I'll go back to my original suggestion -- if you know the format of the data record, create the appropriate Cluster to define the precise data types (including not only the number of bytes, but distinguishes between Floats, Integers, Unsigned Integers, and even more exotic numeric types such as Complex and Fixed Point. If you have 2 or 3 "versions", you can create corresponding Clusters for each and then ask the User to "Choose the Format". If your various formats differ in "byte size" (for example, the example in the first paragraph was 37 bytes, while 8 Dbls would be 64 bytes), you might be able to determine the Cluster to use by examining the File sizes and see whether it was a multiple of 37 or 64 ...

Bob Schor

08-09-2018 02:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Bob_Schor wrote:

if you know the format of the data record, create the appropriate Cluster to define the precise data types

I don't know the format until I've read the header, so hard coding a cluster is not an option.

@pincpanther: Your solution is similar to my previous post, however that still gives me back incorrect values... I have now tried out your exact program (and even replaced the "deserialise string vi" with a typecast) without luck. The output I get from this routine are whole integers (represented ad DBLs) throughout the array...

08-09-2018 03:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi doug,

I have now tried out your exact program (and even replaced the "deserialise string vi" with a typecast) without luck. The output I get from this routine are whole integers (represented ad DBLs) throughout the array...

DeserializeString should be fine (with more options than TypeCast).

Can you also attach your data file (or a smaller version of it with just some kB of data would be nice)

(StringToDecimalNumber is non-sense when your data is saved in "binary"…)

08-09-2018 03:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

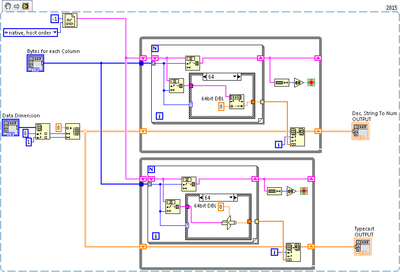

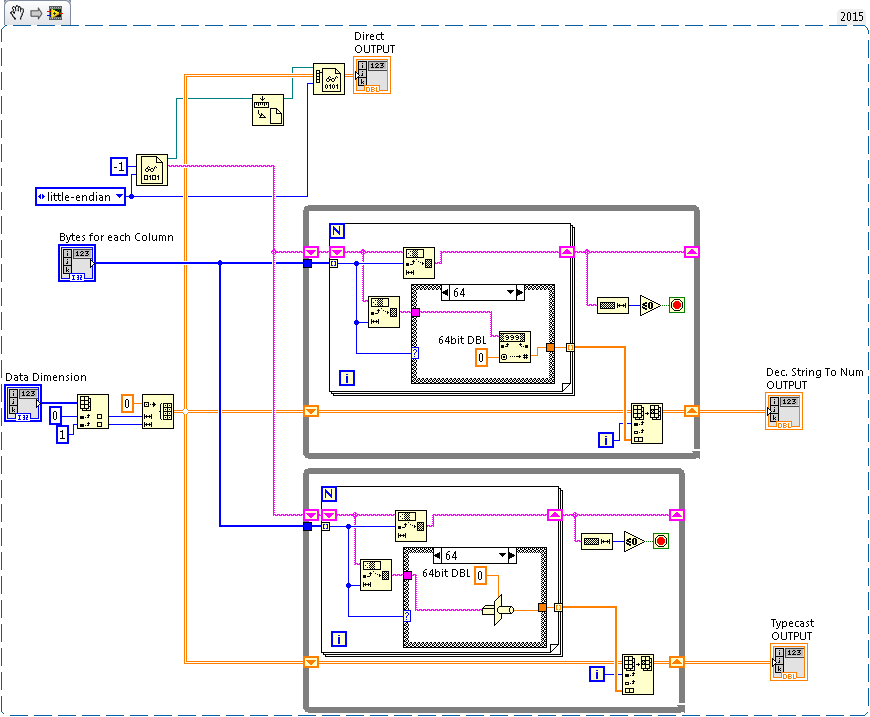

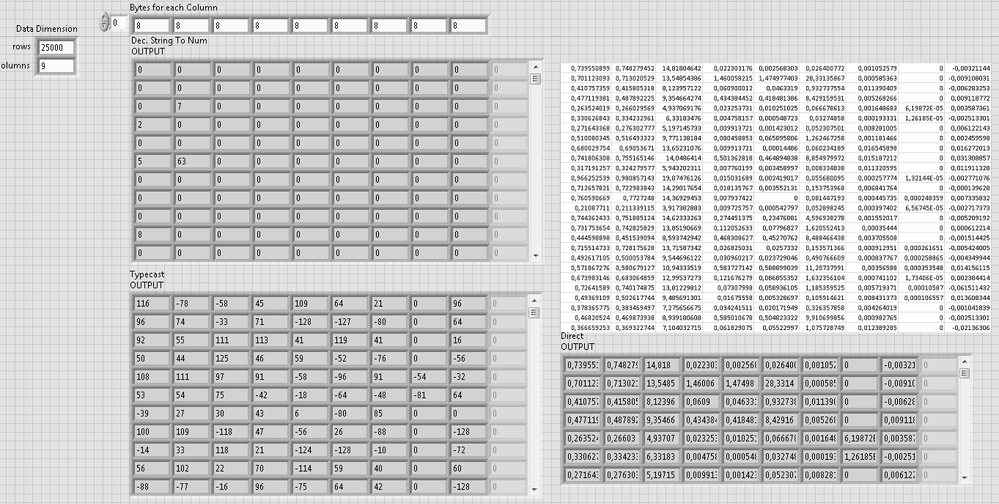

I've attached the data file again (1.7Mb, zipped because of .bin extension) and also an updated version of the previous pictures in which I now also use the 'Preallocated Read from Binary File' vi, which works great!

(Again, why don't I just use that? Because for the examle file provided all the data was originally 64bit DBL. But for future uses I can't make that assumption)

08-09-2018 03:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Doug,

you defined this "Bytes for each column" as an array of 9× the value "8".

In the conversion routine you convert an item of "8 bytes" into an U8 value - why do you wonder you get just U8 values???

You should rename your "bytes for each column" into "bits for each column" and input "64" instead of "8"…

08-09-2018 03:42 AM - edited 08-09-2018 03:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@GerdW wrote:

why do you wonder you get just U8 values???

I can't follow you...

That array only holds the number of ASCII characters to be read from the string. Its datatype should be irrelevant! An '8' in that array only means "read 8 characters (aka a 64bit DBL) from the string". I've just tried out changing the datatype of that array and it has no impact on the program (as it should be, since the 'String Subset' vi expects an INT anyway)

08-09-2018 03:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

08-09-2018 04:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you! That was indeed one issue. That is now fixed, but I am still getting wrong numbers (but at least they look like floats now, albeit with very large exponents...)

@GerdW wrote:

but your case structure is expecting a value of "64" to call the case for DBL conversion!

08-09-2018 04:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator