- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Reading a binary file of numerics with varying datatypes

Solved!08-02-2018 05:50 AM - edited 08-02-2018 05:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I am reading numeric data from a binary file. The catch: the data is not consistent in the datatype.

Specifically, the file may contain:

64bit DBL | 64bit DBL | 32bit SGL | 64bit DBL | 8bit U8 | 32bit SGL | 64bit DBL

This pattern would then repeat, say, 10,000 times, giving rise to a (in this case) 10,000-by-7 array. Naturally, each column would have to be converted to a 64bit DBL in order to be inserte into the array.

I have attached a zip with a sample file that contains 25,000*9 datapoints and needs to be loaded into a 25,000-by-9 array of DBLs, along with an VI that should do this, but doesn't.

Any ideas how to go about this?

In the provided file, all points were originally 64bit DBL, making this trivial, but I need to generalise this to handle various numeric datatypes.

Many thanks

Solved! Go to Solution.

08-02-2018 06:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi doug,

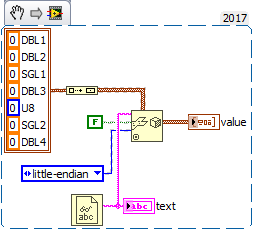

try something like this:

Your datastructure in the cluster MUST match the data in your file - right now it doesn't seem to be correct…

See here:

The text is shown in hex display: there are two DBL values (each 8 bytes) at the start, followed by 8 bytes of unknown content, then again 4 DBL values…

08-02-2018 06:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Create a cluster containing the desired numeric controls in the exact order (7 members in your example), make an array of such clusters, create an array constant from it.

Wire the constant to the data type input of the Read Binary File function, et voilà... in a single read.

Your vi is wrong for another reason: if you read a binary value in a string, you get... a binary representation of the value, not a text representation.

Decimal String to Number deals with text representations. You would need to cast your string to a numeric value, but you may run into byte order troubles. Endianness is not meaningful if you are reading a string.

-------------------

LV 7.1, 2011, 2017, 2019, 2021

08-02-2018 01:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

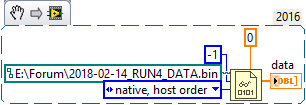

The key is that if you know the structure of the (binary) data written to the file, you can read it. Suggestion -- do not give the file the extension "txt", as too many programs will think it is text that they can open! I changed the extension to "bin" and wrote the following little routine:

I didn't originally have Mode wired, and it defaulted to Big Endian, and my "data" looked pretty screwy, so I specified Host Order, and got 225,000 reasonable-looking Dbl values (typically near, but less than, 1, with some values of 0).

As has already been noted, if you replace the "Data Example" (the Dbl "0" wired into the top of the Read function) with a Cluster that represents the "mixed format of Array data", you can read and auto-parse any format file you choose. The "-1" says to do multiple reads of the same thing and build it into an Array -- if, however, what was written was a LabVIEW Array (with the Array header that provides the "shape" information for the Array), you'd make the Example an Array of Cluster and only do a single Read (assuming the file consisted of a single Array). Of course, if the file had multiple identically-sized Arrays, you could have an Array Example and specify -1 to read in "all the rows" ...

Bob Schor

08-07-2018 02:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for all the answers so far.

I don't think I've made myself clear at the beginning: the example provided is kinda trivial in that all numerics in the file originally were 64bit DBL.

I am having trouble GENERALISING this to any datatype. At the beginning of each file will be Keyword-Value pairs telling me the datatype stored in each 'column'. So for example: the very first example I mentioned would be:

Datatype1: 64, Datatype2: 64, Datatype3: 32, Datatype4: 64, Datatype5: 8, Dataytpe6: 32, Datatype7: 64

whereas at the beginning of the original file I provided (the file I provided is only the DATA segment and I have cut off this metadata part) would be:

Datatype1: 64, Datatype2: 64, Datatype3: 64, ..., Datatype8: 64, Datatype9: 64

I can extract these numbers no problem, and these numbers may change from file to file. Thats how I always know how to interpret the bits in the DATA segment of the binary file.

Here's my pseudocode that I was hoping to implement:

extract numeric datatypes into a U8 array # legnth of this = number of columns

get the DATA segment of the file # provided in the .txt file

initialise an array of 64bit DBL # the converted values will be inserted into this

r = 0

for number of rows:

c = 0

for number of columns:

datatype = datatypeArray[c] # this will be any of the possible bit lengths for numerics: 8, 32,64 etc.

read datatype-many bits from file # i.e. if the first column contains 32bit SGL, read 32 bits; for 64bit DBL, read 64 bits etc.

convert read number of bits to DBL

insert into array at position [r][c]

c++

r++

08-07-2018 02:28 AM - edited 08-07-2018 02:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi doug,

I don't think I've made myself clear at the beginning

I think it seems so… 😄

I am having trouble GENERALISING this to any datatype.

You already know which datatypes to expect, so you can write a generic routine to read your data.

I would parse the header once to create an array of "data size". Your example

Datatype1: 64, Datatype2: 64, Datatype3: 32, Datatype4: 64, Datatype5: 8, Dataytpe6: 32, Datatype7: 64

would be converted to an array of datasize[8, 8, 4, 8, 1, 4, 8].

Then I would read blocks of sum(datasize) = 41 bytes and convert them with a 2nd subVI to their values (less file operations, more in-memory computations - it's usually faster).

But one big problem stays: you cannot define datatypes at runtime. So you have the option to read all data according to their definitions (DBL, SGL, U8), but store them as DBL internally. A DBL value can hold SGL and all integer values (upto 32bit) accurately. (You cannot store U64/I64 value using a DBL wire, here you get rounding issues.) That's the way I go with my Key-Value-Pair implementation…

Or you put the read data into variants - but then you need to parse those variants later on.

08-07-2018 10:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I think that you, GerdW, and I are all saying pretty much the same thing, just varying in how we implement it. The part that is still vague and unclear is what data, precisely, are contained in the file. You talk about having an array of DBL, yet some of your data appear to be 1 byte wide, which pretty much makes it either a Boolean array or a (very) short Integer.

When the data were written to the file, they were written representing (I was going to say "real", but a less ambiguous word is) actual data. One possibility is Dbl, Dbl, I32, Dbl, U8, I32, Dbl. If I were doing this, I'd do the following:

- Create a Cluster that describes the Native Data, and save it as a TypeDef (called Native Data.ctl, why not?).

- Use the code example I posted to read the entire file, read the entire file into an Array of these Clusters.

- Now, if you want to transform your data into a square array of Dbl, simply use a For loop to pass each Row in, unbundle the Cluster, convert those values that are not Dbls into a Dbl, build an Array of 7 Dbls, and pass the Row out (through the indexing tunnel) to make a 2D array of Dbls.

Bob Schor

08-07-2018 10:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Bob_Schor ha scritto:

I think that you, GerdW, and I are all saying pretty much the same thing, just varying in how we implement it. The part that is still vague and unclear is what data, precisely, are contained in the file. You talk about having an array of DBL, yet some of your data appear to be 1 byte wide, which pretty much makes it either a Boolean array or a (very) short Integer.

When the data were written to the file, they were written representing (I was going to say "real", but a less ambiguous word is) actual data. One possibility is Dbl, Dbl, I32, Dbl, U8, I32, Dbl. If I were doing this, I'd do the following:

- Create a Cluster that describes the Native Data, and save it as a TypeDef (called Native Data.ctl, why not?).

- Use the code example I posted to read the entire file, read the entire file into an Array of these Clusters.

- Now, if you want to transform your data into a square array of Dbl, simply use a For loop to pass each Row in, unbundle the Cluster, convert those values that are not Dbls into a Dbl, build an Array of 7 Dbls, and pass the Row out (through the indexing tunnel) to make a 2D array of Dbls.

Bob Schor

He can't use the cluster because he needs to manage the format dinamically. In each file there is a header specifiying which is the record structure.

-------------------

LV 7.1, 2011, 2017, 2019, 2021

08-07-2018 09:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Paolo,

Thank you for reminding me -- I'd forgotten that part of the problem was creating the solution more-or-less "on the fly".

I just had a wild idea about how to do this. It's crazy, but might work. I'm going to get some sleep and see if I can whip something up tomorrow, and if I can, I'll post it here.

Bob Schor

08-08-2018 05:22 AM - edited 08-08-2018 05:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you pincpanter! Thats right, I need to dynamically read a certain number of bits. How many, I extract from the header of the file.

I've once again attached a vi and sample binary file. The VI reads x amount of bytes and then interprets the read bytes depending on how many have been read (using a case structure).

The .bin file is the same as before.

Again, the file I am providing contains only 64bit DBLs, thus the 'Bytes for each Column Array' contains only 8s. This makes this exercise kinda pointless, however, given some different file, with a different header I will need to interpret the bytes as specified in the header. So a different file may specify that the data is stored as:

64bit | 64bit | 32bit | 32bit | U8 | 32bit | 64bit

in which case the 'Bytes for each Column Array' would be: 8, 8, 4, 4, 1, 4, 8.

Many thanks again for all your help