- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Queues - Enqueue / Dequeue Element Scheduling

03-21-2013 04:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi All,

I am looking at using SEQ as a mechanism for sharing access to a resource. This means clients of that resource are blocking on a Dequeue Element request (with a timeout for good measure), and once acquired a client then enqueues back to the SEQ once access to the resource is no longer required. This is a pretty typical pattern people have used for access to a "singleton" resource that minimises race conditions.

But it's not race conditions I am concerned about, it's starvation issues. If some clients bombard the SEQ for access, this may prevent other clients from accessing the resource in a timely fashion or at all. A simple example shows this might be the case. I'm wondering whether there is any documentation regarding how LabVIEW assigns which next dequeue / enqueue request will take place when an element is available or empty respectively if multiple threads are calling the primitives. I am guessing the selection of next caller is effectively random, based entirely on the LabVIEW execution scheduler. This would be much like other syncronisation constructs in other languages such as "lock" in dotNet or Auto/MannualResetEvents but I would appreciate any clarification.

I could always implement a scheduler for this purpose (such as a queue of requests for the resource) but why complicate if it's not necessary? That would involve a running async process to handle the requests and notify clients when exclusive access was obtained rather than static VI calls based only on state.

Thanks for any clarification.

03-21-2013 04:48 PM - edited 03-21-2013 04:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Have you considered using a DVR instead of the SEQ? DVRs have better performance.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

03-21-2013 04:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

AFAIK, the order is determined solely by the order of arrival (i.e. a VI arrives at the DeQ prim and enters a queue of users waiting to dequeue). In theory, you could probably come up with a use case where the VI doesn't even get to the dequeue prim because it's in a low priority thread and the higher priority threads keep stealing its CPU time (although I think only subroutine can do that, but I'm not well versed on the detailed), but if you have such problems, then I'm not sure other mechanisms will necessarily help you.

As a side point, since 2009 LV has the DVR, which does the same thing as the SEQ, but which I find easier to use. It doesn't have the timeout option, but in principle that shouldn't be required at all if everyone is playing by the rules.

___________________

Try to take over the world!

03-21-2013 05:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@tst wrote:

AFAIK, the order is determined solely by the order of arrival (i.e. a VI arrives at the DeQ prim and enters a queue of users waiting to dequeue). In theory, you could probably come up with a use case where the VI doesn't even get to the dequeue prim because it's in a low priority thread and the higher priority threads keep stealing its CPU time (although I think only subroutine can do that, but I'm not well versed on the detailed), but if you have such problems, then I'm not sure other mechanisms will necessarily help you.

As a side point, since 2009 LV has the DVR, which does the same thing as the SEQ, but which I find easier to use. It doesn't have the timeout option, but in principle that shouldn't be required at all if everyone is playing by the rules.

I have looked at using a DVR - in my case the shared resource is a network-bus system with a single "Master" which is actually a collation of multiple client calls to many slaves, so I figured the timeout option might be advantageous to avoid blocking that client. When everyone is playing by the rules both options work fine but as soon as I skew one of the clients (say five polling at 50ms and a single client at 2000ms) than the longer polled client barely scrapes in a single successful check-out over a 30 second period. All are running in the same Execution System (Same as Caller) and Normal priority In this case it seems scheduling will help guarantee that clients won't get starved if someone "abuses" the resource.

Out of interest I'm wrapping this all up in two classes (generalising) -

- A "Network" class that is an abstraction fo the shared network connection. It contains private data such as VISA Reference, SEQ reference etc. and Public methods to Create, Destroy, Reserve the Network and Release the Network. Reserve blocks on Dequeueing the SEQ, Release enqueues it back. The reason I am using methods for this (rather than wrapping an IPE in every client call) is I figured it would be useful for the Network object to track who has it "checked out" as well as potentially tracking how long it has been checked-out. This should be private data not visible to the clients.

- A "Device" class that maintains a DVR to the Network object the Device belongs to. There is a method in this class that calls the Reserve network function using the DVR, performs its various activities (polling a real device) then calls the network Release function. The actual act of checking-in and out is not visible to this object, including any of that Network object private data I talked about.

Appreciate any thoughts. I can always post some code for review as well.

03-22-2013 06:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yes, code would be helpful. At face value, what I understand of your explanation indicates that it should work, but that probably means I'm not understanding something about how the code is actually implemented and where the actual bottleneck is. I'm assuming it's in the VISA call, where you wait for a reply, but it's unclear to me exactly how the connection to the users works.

___________________

Try to take over the world!

03-24-2013 01:46 PM - edited 03-24-2013 01:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@tst wrote:

Yes, code would be helpful. At face value, what I understand of your explanation indicates that it should work, but that probably means I'm not understanding something about how the code is actually implemented and where the actual bottleneck is. I'm assuming it's in the VISA call, where you wait for a reply, but it's unclear to me exactly how the connection to the users works.

Here's some code I'm currently playing around with. The RS485 Testing vi crates a single network and two devices on that network. It then has three loops - one to poll the network status (showing if it's reserved, who by etc.) and the other two to poll the two devices. The vi is intended to test how the network is being "shared". The Time 1, Time 2 controls are the time that the FlowMeter:Read Random Data uses to mimic the comm activity time. The Repeat controls are simply the poll rate. Ignore the local variable usage, this is just a test vi.

I'm also enqueing to reserve and dequeuing to release. This allows me to put who reserved the resource into the queue as an atomic operation. This is in opposition to my previous post. I figure there's no problem with this (besides the contrast to the check-out, check-in concept) since the Timeout terminals exist for both operations.

Where I come unstuck is:

- Setting the values to the defaults is fine, the reserve / release process works ok.

- Setting one device timing to exceed the reserve timeout of 3000ms does not cause the other device to indicate a time-put or abort attempting to reserve the network - instead that call simply blocks (potentially forever) blocking the caller. In this case, set Time 1 to 4000ms to demonstrate this, and note that the second (2) loop blocks in the Reserve network vi, TImed Out? 2 output is never indicated. Sure, in this case that's not a problem but I am anticipating multi-threaded access to this network, hence the sharing idea. This is also shown in the "Devices Waiting for Network A" Network status variable - this is always 0, but in this case I would have exepcted it to be 1 to indicate that Loop 2 is waiting to insert to the queue.

There's probably a race condition in here somewhere, or a VI call not re-entrant or something like that. Maybe it's something really simple, I feel as if I've been staring at it too long! Appreciate any help. I'm really looking to get the Timeout feature operating, otherwise I'll just go back to DVR's (perhaps a Send Receive vi in the abstract class to reduce the burden on concrete classes).

Thanks

EDIT 1: Sorry, forgot to mention. I'm not implementing the actual VISA calls at this stage, I think that's a trivial addon once the framework is up and going. I'm using a simple activity timer to mimic this.

EDIT 2: Another forgetful moment - I'm using 2012f5. Still waiting for the 2013 DS1 discs.

03-24-2013 04:04 PM - edited 03-24-2013 04:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

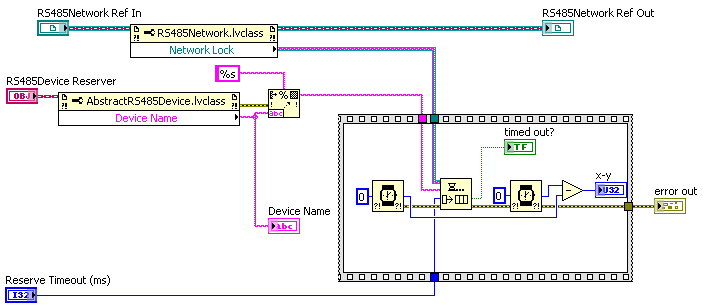

As far as I can tell, this is a bug in LabVIEW. I modified the internal subVI to look like this (and now that I look at it, I see that the Format Into String is redundant):

Basically, it measures how long the enqueue takes to execute. If I set up a long wait on A, then B takes longer to execute than its timeout input (and it doesn't time out, as you pointed out). I'm pretty sure that's never supposed to happen.

I'm not sure what the exact combination required for this is, but I would guess it has to do with the timeout on an enqueue in a reentrant VI. It's relatively rare to put a timeout on an enqueue, so that would explain how such a thing could be missed. Certainly your case is different from common SEQs in that you enqueue to lock, where people usually dequeue.

I would suggest you report this to NI in an orderly fashion and they can investigate it further.

___________________

Try to take over the world!

03-24-2013 06:31 PM - edited 03-24-2013 06:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for looking into this tst!

@tst wrote:

As far as I can tell, this is a bug in LabVIEW. I modified the internal subVI to look like this (and now that I look at it, I see that the Format Into String is redundant):

Basically, it measures how long the enqueue takes to execute. If I set up a long wait on A, then B takes longer to execute than its timeout input (and it doesn't time out, as you pointed out). I'm pretty sure that's never supposed to happen.

I'm not sure what the exact combination required for this is, but I would guess it has to do with the timeout on an enqueue in a reentrant VI. It's relatively rare to put a timeout on an enqueue, so that would explain how such a thing could be missed. Certainly your case is different from common SEQs in that you enqueue to lock, where people usually dequeue.

I would suggest you report this to NI in an orderly fashion and they can investigate it further.

I agree, the enqueue-then-dequeue method of obtaining sequential access is different than normal, I'm abusing this to get that atomic operation for supplying additional network information. Interestingly this example VI does work as expected (see attachment). The time-out functions as expected here, which indicates some other issue at work...

I'll talk to our local NI support and get them to dig into this. I'll post back here with any information that I find out or a CAR reference. Thanks again for your help.

03-26-2013 03:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

CAR 305402 notes the existence of a queue starvation issue when you have multiple concurrent writers.

03-26-2013 03:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@AristosQueue (NI) wrote:

CAR 305402 notes the existence of a queue starvation issue when you have multiple concurrent writers.

Hm...sounds like AQ put a bug in LabVIEW ![]()