- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »

Python Dictionary ported to LabVIEW

04-03-2018 06:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

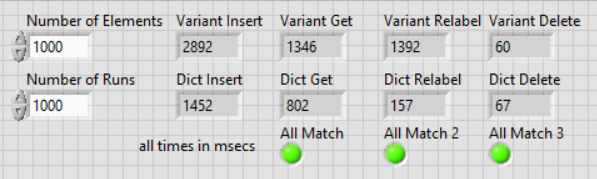

@crabstew wrote:On average, inserts and gets are much faster than variant attributes, relabels are orders of magnitude faster and deletes are a tad slower.

Try it out and see if you can squeeze any more performance out of it. I'm not super familiar with the intricacies of the LabVIEW compiler so I'm sure there is something I've overlooked performance-wise but it was pretty fun nonetheless and I'm quite happy with the results.

Your 1st timing includes the time to create the arrays, if you wire the created array through the 1st frame time will change considerably.

Also, it seems Variants as variant attributes are slow. If you set the Integers as data instead of the converted variant (fix the wire to the 1st frame) this is what happens:

The Relabel is much slower on the variant attribute, the other operations noticably faster!

/Y

04-03-2018 06:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

04-03-2018 09:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

What LabVIEW version are you running Yamaeda?

I ran your corrected test in LabVIEW 2017, both in the IDE and in a fully optimized application (no debugging enabled in the build VIs, and optimization set to max) - and the Gets are still much quicker in than the variant-based solution. Inserts are slightly slower. Another thing is to test for updates of existing keys...then the hash table beats the variant by a huge margin, especially on Linux RT-targets.

One nice thing you can do with the hash map is avoid variants all together...(if you only need a single data type for example) - which moves the performance to a whole new level.

04-03-2018 12:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I also ran 2017, didn't compile it though. Those Variant Attributes are pretty well optimized. You can 'hash' Variant attributes so you don't have all in one variant, that should be interesting when the lists start to grow (>10k?)

/Y

04-03-2018 01:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

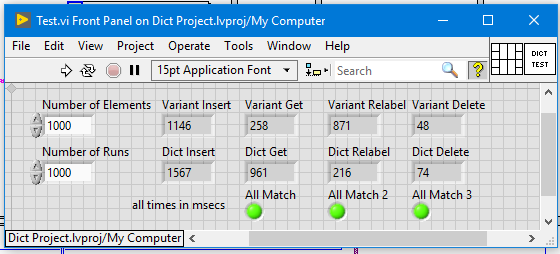

Just tested at home, better computer than the work one. Parallellized all loops (except the comparison ones since they couldn't be. Comparison between IDE and compiled result.

/Y

04-03-2018 04:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Can you post your test.vi?

04-04-2018 02:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Not right now, i went on to try and 'hash' the variant by using an array of variants instead of 1, that didn't turn out good. 🙂

/Y

04-04-2018 03:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I found a copy. I noticed a strange effect of Variants in the Get Library case which i've worked around and commented in the code.

/Y

04-04-2018 06:48 AM - edited 04-04-2018 06:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

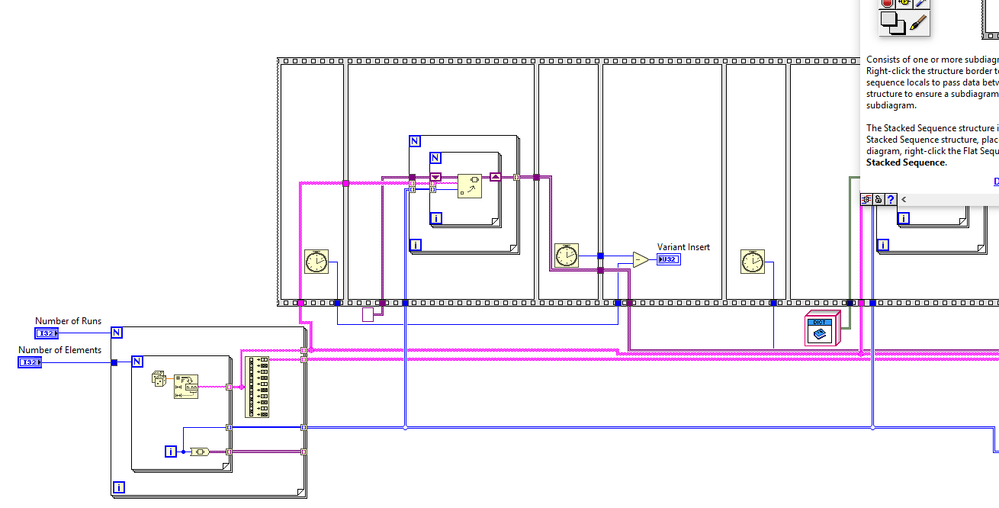

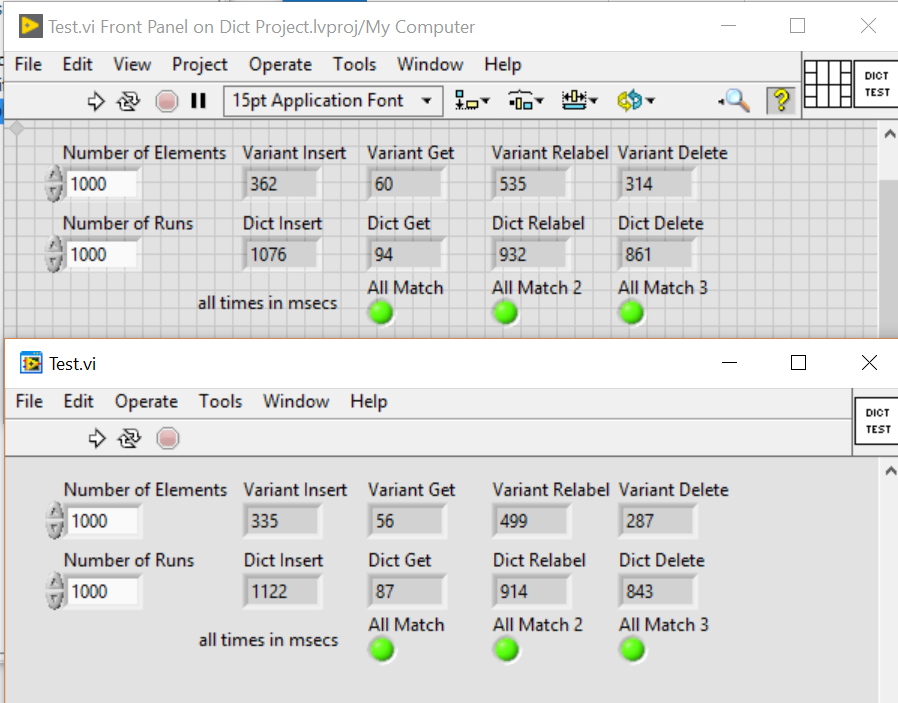

Looking closer at the test code (both the original and the modified) I think there is a lot to improve....Building arrays of the variant (and dictionary references) for each run instead of operating on the same all through the run is a bit odd in my mind for example. The key names are not guaranteed to be unique either, I typically get half a percent collisions...(50 per 10 000 keys).The modified version introduces another variable - parallelism. I would say that adds a somewhat irrelevant complexity (unless of course you would access it like that in the actual application), when what we really want with the repeated runs is to just average out noise in the numbers.

Rebuilding the test code to just repeat the whole train of operations instead on each run, and work on a single variant/dictionary ref, the results are more in line with the good results I got when using the hash map in a realistic scenario (based on storing doubles only) on a slower target (cRIO-9030). I find the hash table to be 2x times faster than the Variant on writes on my PC, but 3 times faster on a cRIO-9030 (Linux RT). The inserts and reads are pretty much identical on the PC, but reads are 30% faster on the Linux RT target. The hash table is extremely sluggish when it comes to deletes though, as it is right now.

04-04-2018 07:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The point of the parallellism test is to see how fast it can go and how well it scales (it seems Variant scale slightly better). I'd say that's interesting for real world implementation, but first and foremost you should optimize the individual parts.

/Y

- « Previous

-

- 1

- 2

- Next »