- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Preallocated Reentrant VI within Parallelized For Loop

Solved!01-30-2015 07:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

@Kevin_Price wrote:

No combination of auto-indexing, or indexing by iteration # or parallel instance # seemed to preserve correspondence between a given station array index and a specific reentrant instance of my processing vi.

You can configure the parallel for loop to output the parallel instance. RIght-click the P terminal to change, the wire it to a case strcuture.

Yeah, I did config the P terminal that way, but still no joy. I'm *hoping* this is still just me being thick-headed though b/c I'd *like* there to be a more straightforward solution. Let me describe in more detail:

1. I have a few banks of test stations, multiple stations per bank.

2. My data structures involve an array of "station data containers". Data inside each station's container includes arrays of measured data for the most recent interval.

3. Each station is streaming it's data in packets at pretty regular intervals. Data processing is performed in pseudo real time, one packet at a time.

4. Data processing includes the need for functions that must retain state between subsequent calls in order to treat consecutive packets as a continuous data stream (integrators, debounce, etc.) Filters are another excellent example of this kind of need.

5. What I *want* to do is feed my array of station data into a For loop. I want to place a reentrant data processing function inside that loop. And I want there to be a distinct *instance* of that reentrant, state-preserving processing function for each *iteration* of the For loop. Further (and I think this is where my problem is), I need to know that a given "instance #" (or "iteration #") of the For loop will always correspond to the *same* reentrant function instance.

6. I tried setting the For loop up for parallelization by wiring in the array size to both the N and P terminals.

7. It *did* appear to result in N distinct instances of the processing function. (Note: at the time I was running with N=8 on a system with 8 apparent cores -- 4 real and 4 virtual. Even if things had worked out for that case, my approach would also need to work when # stations is larger than the # apparent cores.)

8. Regardless of whether I used For loop auto-indexing, or explicit indexing with either the 'P' instance # or the 'i' iteration #, a specific iteration # didn't always call the same reentrant instance. Occasionally, a couple of them would seem to exchange places so that iteration 3 seemed to act on index 4's data and vice versa. I observed this by seeing brief glitches in 2 channels at a time where they seemed to swap each other's data then return to their normal trend. A while later, another pair would swap.

At the time, I tried making a little test program to poke & prod at parallelized for loop behavior. I got just far enough with it to decide not to pursue use of the parallelization feature. I'll dig it up, clean it up, and post it in hopes that I did a bad test or formed a wrong conclusion.

-Kevin P

01-30-2015 08:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

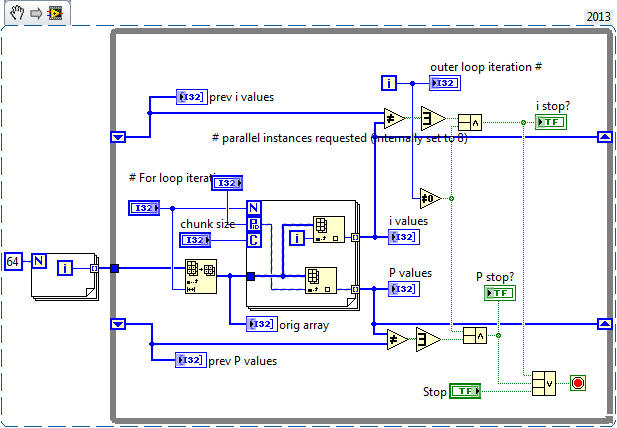

Ok, here goes. This doesn't directly address the issue of reentrant instances, it simply illustrates that the parallel instance # P in a parallelized For loop acts in ways I have no explanation for. The anomaly is somewhat consistent with my observations when trying to call specific reentrant instances -- occasional glitches where the "wrong" reentrant instance gets called. Here, the occasional glitch is that indexing an unchanging array with the parallel instance # P doesn't always index to the same place. Perhaps more importantly, it *never* seems to index to the expected / correct place. Compare to the result using the iteration terminal i for indexing.

Snippet and screencap from a run below. Sorry, multiline labels got reformatted by snippet-ing.

-Kevin P

02-02-2015 09:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

*bump*, just this once to try for some post-weekend attention...

-Kevin P

02-02-2015 10:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Cant you change the sub-vi /AE to use DVRs? Init case creates a DVR and following iterations can work on those DVRs? Guaranteed unique data area.

/Y

02-02-2015 10:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

With Preallocated clones you get 1 clone for each instance of the vi on the block diagram. your excamples prove this is in fact the behavior since you have 4 instces (one for each case) in the lower loop and 1 in the upper loop.

It does seem stange in a parallel loop but we really don't know how many parallel threads there will be (we can only request a maximim)

"Should be" isn't "Is" -Jay

02-02-2015 03:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yamaeda: I don't use DVR's a lot so maybe you mean something different than I'm picturing, but I think DVR's would solve a different kind of problem than the one I'm having.

Jeff: thanks for the confirmation. Looks like For loop parallelization isn't gonna be (and in fairness, never *claimed* to be) the magic bullet that solves this. I've gotta say, though, that it still "feels like" there should be a more elegant solution than what I'm doing with VI Server.

What's the better way that I'm missing? What do others do when they have a size-N array of waveforms and they want to calculate, say, a cumulative integration on each waveform individually? I *want* there to be a way to auto-index the array and then have each loop iteration call a unique instance of a common integration routine. (The need for unique instances is that the procesing function, here illustrated as integration, must maintain state separately for each of the N waveforms.)

-Kevin P

02-02-2015 03:43 PM - edited 02-02-2015 03:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Kevin_Price wrote:

Yamaeda: I don't use DVR's a lot so maybe you mean something different than I'm picturing, but I think DVR's would solve a different kind of problem than the one I'm having.

Jeff: thanks for the confirmation. Looks like For loop parallelization isn't gonna be (and in fairness, never *claimed* to be) the magic bullet that solves this. I've gotta say, though, that it still "feels like" there should be a more elegant solution than what I'm doing with VI Server.

What's the better way that I'm missing? What do others do when they have a size-N array of waveforms and they want to calculate, say, a cumulative integration on each waveform individually? I *want* there to be a way to auto-index the array and then have each loop iteration call a unique instance of a common integration routine. (The need for unique instances is that the procesing function, here illustrated as integration, must maintain state separately for each of the N waveforms.)

-Kevin P

OH! Why didn't you say so? THATS EASY!

Open an array of strict type refs for the vi with the flags set to 0xC0(? - i'll try to confirm the flag) INSIDE a loop the array of refs out is to specific clone instances Preallocate your clone pool and wham-bam. Launch your fleet of clones in a parallized for loop. Even have fun passing in a index to seee which clones complete first (or just queue out a clone instance name).

[EDIT: Found the thing]I've got an example lying around here I shared a bit ago. try a search for preallocated clone pool

"Should be" isn't "Is" -Jay

02-03-2015 08:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well, yeah, that's pretty much what I settled on doing -- opening multiple clone instances of the processing vi with VI server refs, then using Call by Ref to execute the distinct instances. I guess I just don't share your opinion that this requirement to open, store, and later close all the VI refs constitutes "Easy". To me it seems like a pain in the neck when I can end up with more code tied up in managing the all the VI refs than I had in the original processing algorithm.

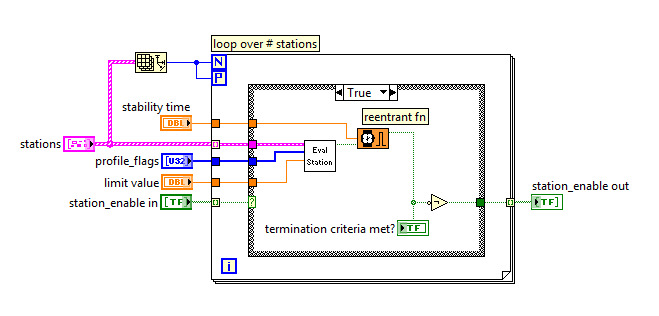

To illustrate, here's what I'd *like* to be able to do, make a direct vi call with some way to specify that I'd like to use a unique reentrant instance of my function for each *iteration* of the For loop:

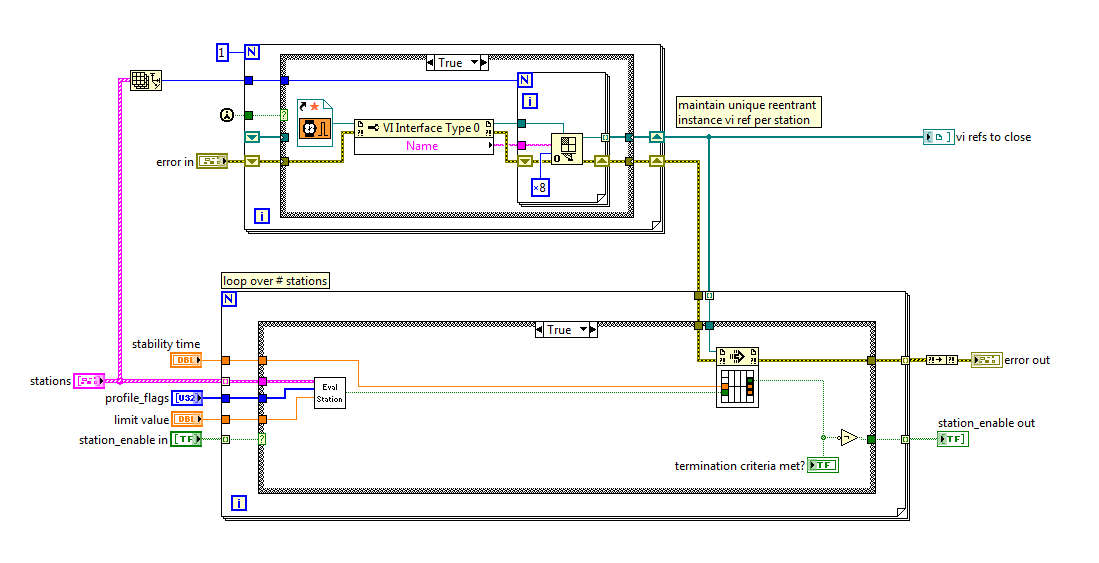

However, what follows below is what I feel *stuck* with. I'll live with it, b/c what choice do I have? But the handling of the VI refs feels like clunky overhead.

-Kevin P

02-03-2015 12:13 PM - edited 02-03-2015 12:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The benefit is in being able to use the preallocate clones method to create the dataspaces in initialization rather than pay the time penalty to create the dataspaces on the fly when you try to check out a clone and find the pool has no unused clones in it. Plus you gain some additional info availability in the caller since you have vi server refs to each clone instance. But, properties and methods you wire those refs to act on the parent vi, not the clone instance so be warned! (Explains why they are equal despite having unique values)

And your flag 0x08 is not correct. Set 0x140 for call and collect simultaineously or 0xC0 for simultaineous fire-n-forget. setting 0x08 and opening refs in a loop just gets multiple refs to the reenterant original. you don't want that at all!

The link I pointed out earlier contains several other sources. This was the first Read(2) and (5) first (the circled numbered bullets in the "What to Do" headding."

"Should be" isn't "Is" -Jay

02-03-2015 03:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

RE: the 0x08 flag

I wanna go through this carefully to make sure I understand. I chose 0x08 because I'm doing a regular old "Call by Reference". The function in question is a simple data processor, get in get out fast kind of thing. It's *not* a free-running loop I'm looking to launch with an explicit *asynchronous* designation. I thought those explicit Async Call by Ref functions were primarily useful for vi's with a longer or unpredictable execution time, while the plain old "Call by Ref" was fine for fast bounded-time calls to functions with wired inputs and outputs.

I'm not convinced yet that the 0x08 flag is incorrect. The code screenshot I posted does in fact show every sign of opening distinct instances with distinct internal dataspaces. The reentrant vi contains USR's to retain state between calls. If this dataspace were being shared among several stations, it would make pretty obvious discontinuities in the calculated results.

The online help suggests that I'd only designate the 0x40 bits when also OR'ing them with either the 0x80 or 0x100 bits, both referring to different ways to prepare for explicit "async" calls. It also states that both 0x80 and 0x100 are incompatible with 0x08.

I think my only choices would be either 0x08 or 0x140 since I need to receive the output values. 0x08 seems to work fine with the code I showed, 0x140 would require separate calls to "Start Async Call..." and "Wait on Async Call..." as illustrated in your recent link. Of the two, the code I showed with the 0x08 flag and the standard "Call by Ref" seems simpler.

What I don't know for sure is whether my 0x08 & "Call by Ref" approach supports true parallelism where distinct instances can run on distinct cores in a truly simultaneous way. That wasn't what I was mainly looking for though. My attempt & desire to use For loop parallelism was more about economy of syntax than about a need for true multi-core parallel execution.

Thanks for sticking with this...

-Kevin P