- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Parsing binary data

09-22-2016 04:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello!

I have some ADC, it sends data in binary format like:

1st byte: (resVal & 0x1F) | 0xA0

2nd byte: (resVal >> 5) & 0x1F

resVal is 10 bit ADC value. Last five bits in firs byte + 101 and first five bits in second byte +000. (I can change this parts, if there is better way to send U16 data, it's first that i can remember).

So, i need to read this in LV through VISA, do something with it (like graph, calculate something like frequency, amp etc) and save it in any file.

I'm newbie in LV so my VI isn't fine. It works on low speed. But when Baud-rate 500k+ program is slowing down. And almost all data was lost.

So i don't know how can i do it right. But i think there is at list 2 ways. Some better way to convert 10bit number to binary data and bunary data to numbers. And another way to optimize saving process.

Sorry if it's so newbie questions.

Thx.

09-22-2016 04:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It sounds like you have a very old-fashioned device that sends byte data through a VISA port (generally meant for Text, not binary, streams). These are a bit tricky to program (I know, I've done it). When sending a byte stream, is there an indication of which is the "first" byte?

For example, one instrument I used sent 14-bit quantities over a serial line. Bit 7 was reserved for "Start of stream of 12 bytes", which were sent low-byte first. The second byte was therefore the high byte, with bit 7 necessarily cleared (otherwise it would become "Start of stream") and bit 6 becoming the "sign" bit. Needless to say, putting these bytes together to yield 6 signed "14-bit" integers (which we saved as 16-bit signed integers) was a minor challenge.

Bob Schor

09-22-2016 04:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yes. If i use only one channel i have the sequence only of 2 bytes. First byte 101AAAAA (U8 >= 160), second byte 000BBBBB (U8<=31). And I need to combine it in 10bit number BBBBBAAAAA.

09-22-2016 06:31 PM - edited 09-22-2016 06:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

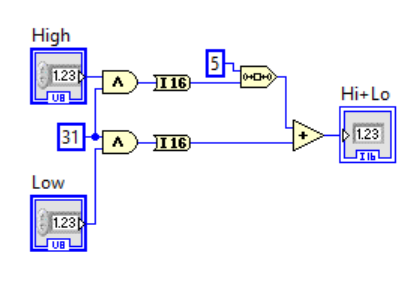

Masking, bit shift, join, bit shift

09-22-2016 08:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Note that you have to make sure you are "in synch" with the Stream, so be prepared to "throw away" bytes until you come to the one that has "101" as the high three bits (I say this based on what you've described about the stream).

Bob Schor

P.S. -- did you understand how RavensFan's algorithm works?

09-27-2016 02:47 AM - edited 09-27-2016 02:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yes, thx, I understand it. But my VI has already mask = 31 = 00011111. If I simplify, my VI looks like this:

Maybe type convertion is a little bit slower, but i don't think that's the main problem. Yes? No? So any ideas how can I spped up saving process? Or any other modifications of parsing binary string? Can I use use arrays to speed up something?

Thx.

P.S. And, yes, you'r right, i'm looking for "101" as the high three bits, it's just comparing with 150 (it can be any number btw 31 and 160). As you know 10100000=160, so high 5 bits --> numbers 160+. 00011111=31, so low 5 bits --> numbers 31-.

09-27-2016 05:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

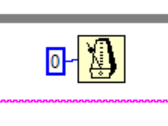

OMG! I found the solution! I can't understand, why my loop can process 20000 numbers/sec, but can't 40000 numbers from string. It's not so much. I tried to make buffer string and to split and then typecast, I tried make array of bytes and then split it. And the problem was so simple:

I just del this piece of loop. And now it's fast as I want.

But what is the right way to parse my string? Make string buffer or U8 array buffer, typecast 1char len strings or U8 1num arrays? Maybe there is another good way, or not?

09-27-2016 04:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I would consider separating the file processing from your data acquision loop. Disk activity can significantly impact your performance. Also, the Wait 0 should not have had that big of an effect on your performance. Be aware that without the Wait you could starve other tasks of CPU cycles.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

09-27-2016 04:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Iluso wrote:OMG! I found the solution! I can't understand, why my loop can process 20000 numbers/sec, but can't 40000 numbers from string. It's not so much. I tried to make buffer string and to split and then typecast, I tried make array of bytes and then split it. And the problem was so simple:

I just del this piece of loop. And now it's fast as I want.

But what is the right way to parse my string? Make string buffer or U8 array buffer, typecast 1char len strings or U8 1num arrays? Maybe there is another good way, or not?

Yeah, that 0 forces the process to release the thread and re-arbitrate for OS attention. Also make sure you disable debugging on that sub-vi.

"Should be" isn't "Is" -Jay